What skills should a good Apache Spark freelancer have?

A great Apache Spark freelancer should have strong skills in big data processing and analytics. They should know programming languages like Python, Scala, or Java since they're commonly used with Spark. Understanding of data transformation, machine learning integration, and real-time data streaming is crucial.

How do I decide the project scope for an Apache Spark expert?

First, think about what you need. Do you want data analysis, a machine learning model, or something else? Make a list of specific tasks and the outcomes you want. This helps the expert know exactly what to do.

What deliverables should I expect from an Apache Spark project?

Deliverables can include data pipelines, machine learning models, and analytics reports. Make sure the freelancer knows exactly what you want at the start. This way, they can provide the right results for your needs.

How do I check the expertise of a freelancer in Apache Spark?

Look at their previous projects and reviews. Ask to see examples of their work, like data processing tasks they've done. This can show you their ability to handle complex data workflows.

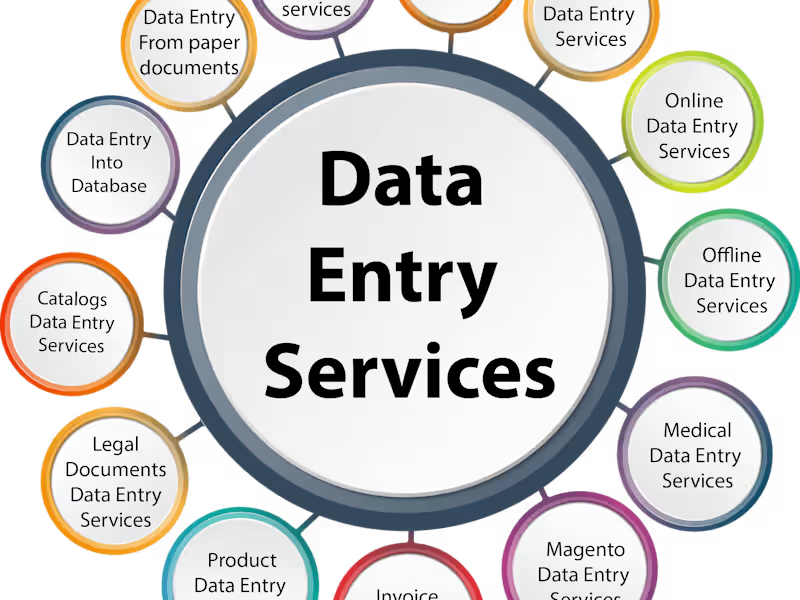

What tools or platforms work well with Apache Spark?

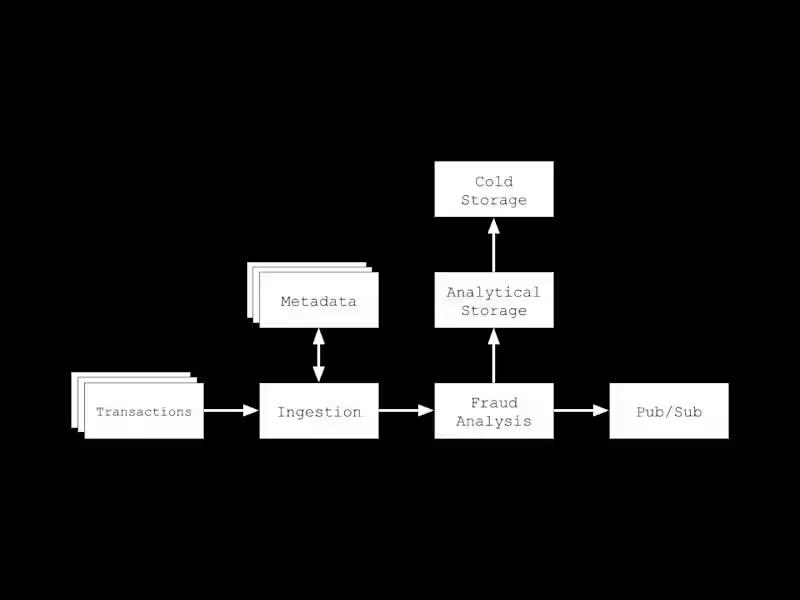

Apache Spark works well with Hadoop clusters, cloud platforms, and data storage solutions like HDFS. It can also integrate with tools like Kafka for real-time data processing. Ensure your freelancer is familiar with these integrations as needed for your project.

How do I set clear goals with a freelance Apache Spark expert?

Discuss what your business needs at the start. Share your vision and any specific metrics or outcomes you aim to achieve. This helps the expert align their work with your expectations.

How important is communication before starting an Apache Spark project?

Communication helps everyone understand the project goals and tasks clearly. Talk about timelines, expectations, and any special needs. This makes sure both sides are on the same page before work begins.

What timeline is realistic for an Apache Spark project?

Timelines depend on the project's size and complexity. A small data task might take a few days, while larger projects can take weeks. Discuss and agree on a timeline early with the freelancer.

What are key challenges an Apache Spark expert might face?

Challenges can include handling huge data volumes and optimizing processing speed. Sometimes, integrating other tools or ensuring data quality can be tricky. Knowing these in advance helps in planning better solutions.

Why is project documentation important with Apache Spark?

Documentation ensures everything is clear and can be understood later. It provides details on how the data processing was done and any models created. This is valuable for ongoing use and future projects.

Who is Contra for?

Contra is designed for both freelancers (referred to as "independents") and clients. Freelancers can showcase their work, connect with clients, and manage projects commission-free. Clients can discover and hire top freelance talent for their projects.

What is the vision of Contra?

Contra aims to revolutionize the world of work by providing an all-in-one platform that empowers freelancers and clients to connect and collaborate seamlessly, eliminating traditional barriers and commission fees.

Explore Apache Spark projects on Contra

Top services from Apache Spark freelancers on Contra

Apache Spark

Database Engineer

Data Scientist

+5

Data Science and Data Visualization for Optimal Business Growth

Contact for pricing

Apache Spark

Database Engineer

Backend Engineer

+3

Empowering Businesses with Data-Driven Backend Solutions

Contact for pricing

Apache Spark

Data Engineer

Database Engineer

+3

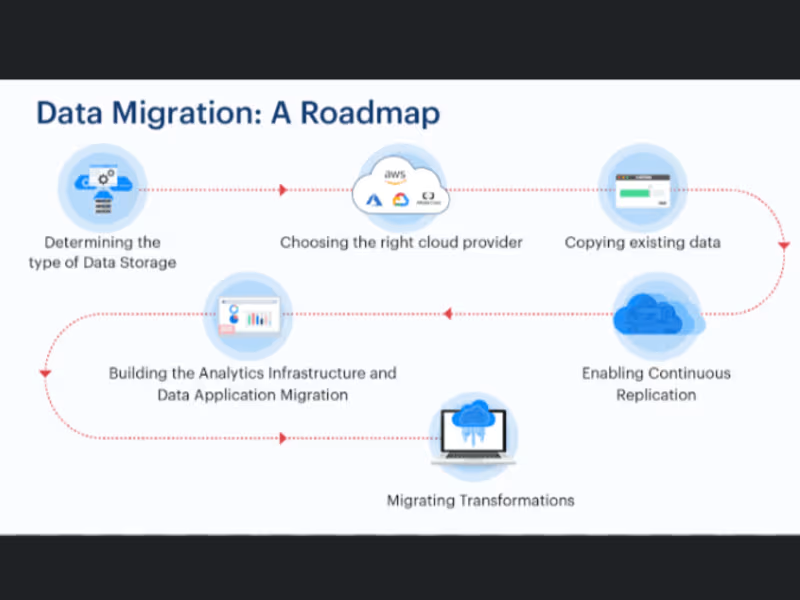

Cloud Migration

$1,600