Google Data Pipeline

Google Data Pipeline

Problem Statement

The client and his team have been manually searching and collecting data from google search results and feeding them to a spreadsheet for list building and then manually cleaning and verifying the results to be used for their campaigns and business decision making. This process hardly gives them 1000-1500 records per day that does not meet the needs of the client's business.

My solution

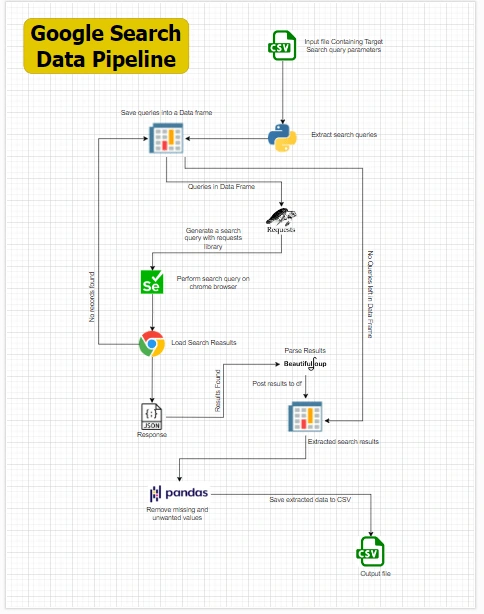

Built a data pipeline that generates a customized query from the predefined data tables and scrapes data from the google search page. After scraping the results the pipeline then transforms the results and export the data into a csv file that is ready to use for campaigns.

The key features of the pipeline are

Query Building

Automated Google Search

Scrape google search results

Data Processing (handling missing values, removing outliers, deleting unwanted results)

Export data to CSV

Tools and Technologies

Python

Pandas

Excel

Beautiful Soup

Selenium

Requests

Results

The pipeline is able to process and generate up to 5000-7000 records per execution. The team spend no time searching and grabbing data from google. The data generated by the pipeline is filtered, structured and ready to use for campaigns and analytical purposes.

Conclusion

The implementation of the data pipeline has successfully addressed the inefficiencies and limitations of the manual data collection process previously utilized by the client's team. By automating the search and data collection process from Google, the pipeline significantly increases efficiency, yielding between 5000-7000 records per execution compared to the previous 1000-1500 records per day. This streamlined approach not only saves time and effort but also enhances the quality and reliability of the data, empowering the client's team to make more informed business decisions and drive impactful campaigns.

Data Pipeline Structure

Author

Like this project

Posted Oct 7, 2024

Data enrichment for list building, email marketing and data entry