Llama 3 Finetune and AWQ Quantization

Project Overview

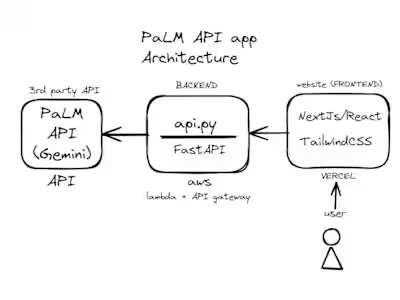

I fine-tuned the Llama 3 8B base model into a conversational model using Nvidia's ChatQA dataset and performed quantization to AWQ 4-bit. This project showcases my expertise in model fine-tuning, efficient quantization techniques, and deployment-ready transformations.

Details

Model: Llama 3 8B

Quantization: AWQ 4-bit

Dataset: Nvidia ChatQA Training Data

Frameworks and Tools: PyTorch, Hugging Face, Safetensors

Capabilities: Conversational QA, tabular and arithmetic calculations, retrieval-augmented generation

Quantization Benefits: Efficient low-bit weight quantization with faster inference compared to GPTQ, suitable for high-throughput concurrent inference in multi-user server scenarios.

Model Repository:

Hugging Face Model Repository - Sreenington/Llama-3-8B-ChatQA-AWQ

Like this project

Posted Jul 7, 2024

I fine-tuned the Llama 3 8B base model using Nvidia's ChatQA dataset and applied AWQ 4-bit quantization for efficient inference. Available in HuggingFace.

Likes

0

Views

13