Additional resources

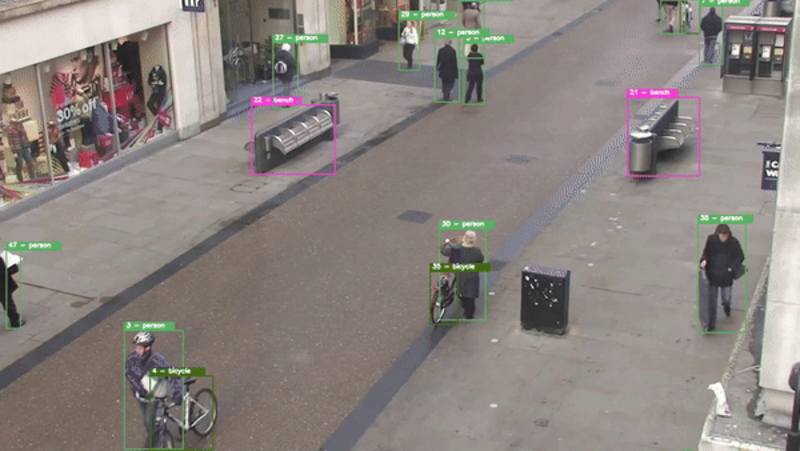

What is an AI Model Developer

Core Responsibilities in AI Development

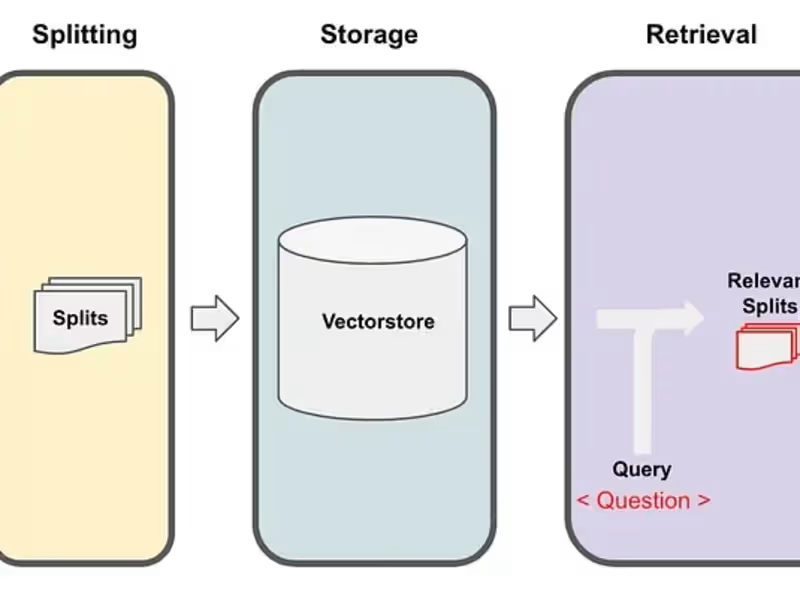

Full-Stack AI Integration Skills

Cross-Functional Collaboration Requirements

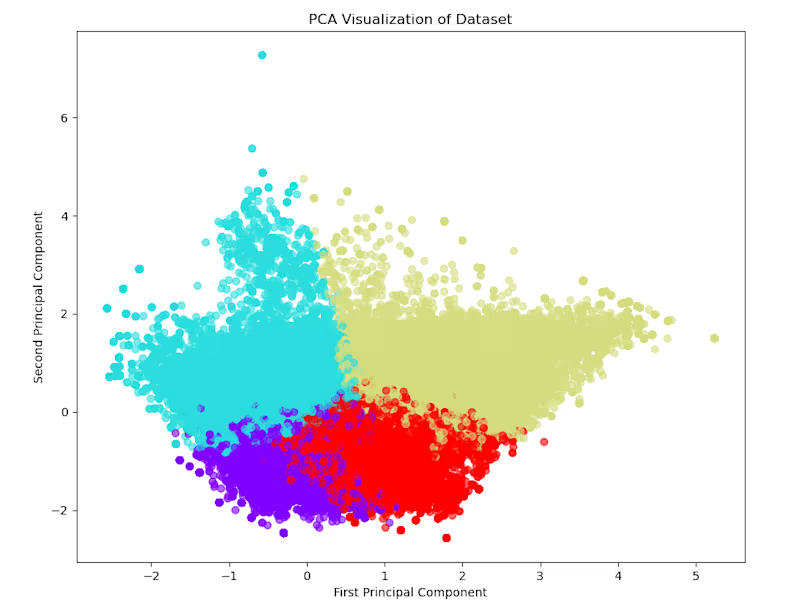

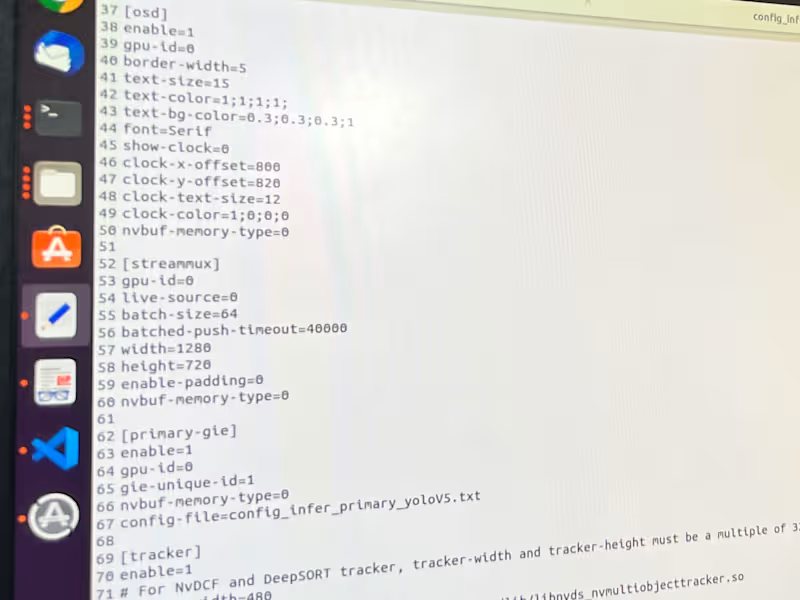

Essential Technical Skills for AI Model Developers

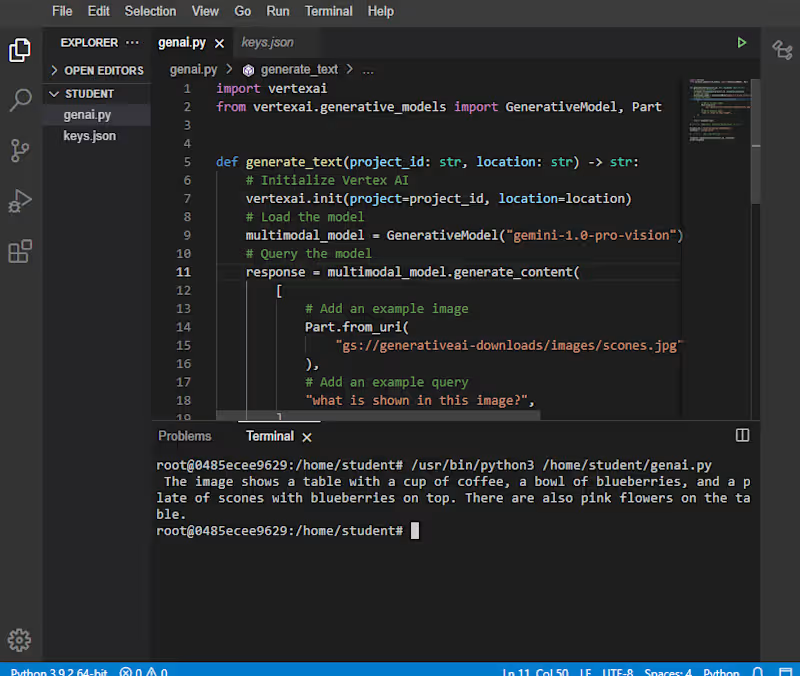

Core Programming Languages

Machine Learning Frameworks

Cloud AI Services Expertise

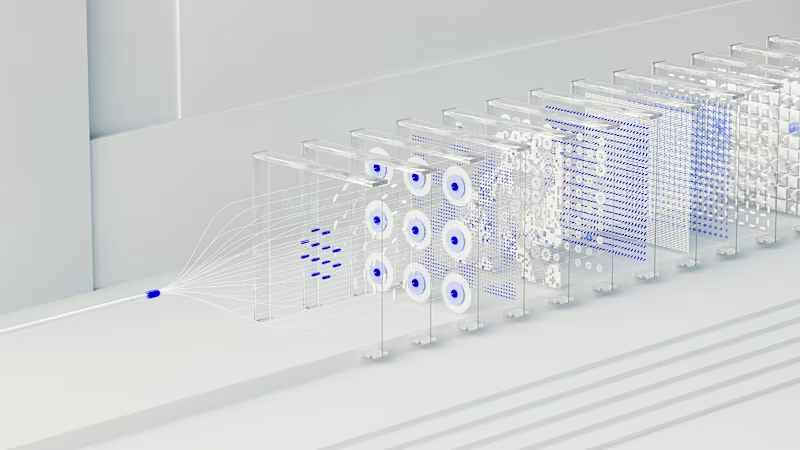

Advanced Neural Network Knowledge

Where to Find AI Model Developers

Specialized AI Talent Platforms

Academic Partnerships

Global Remote Talent Pools

Professional AI Communities

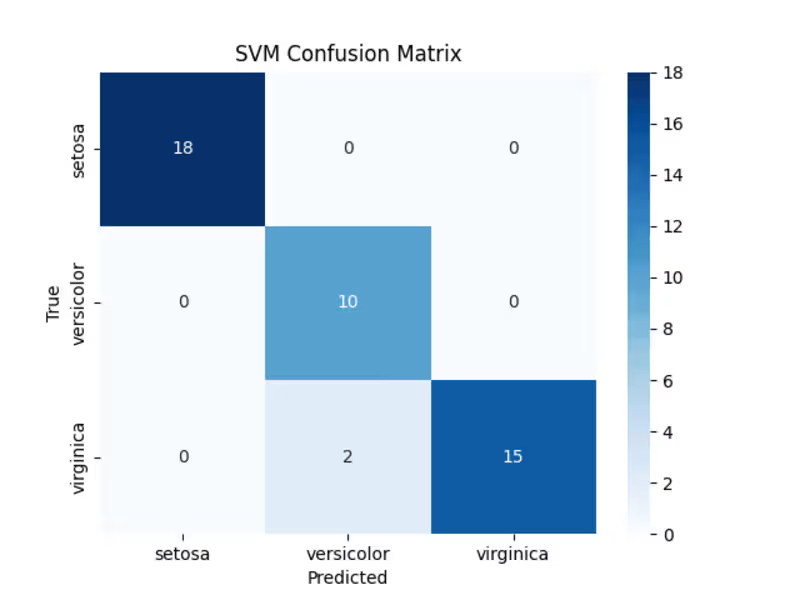

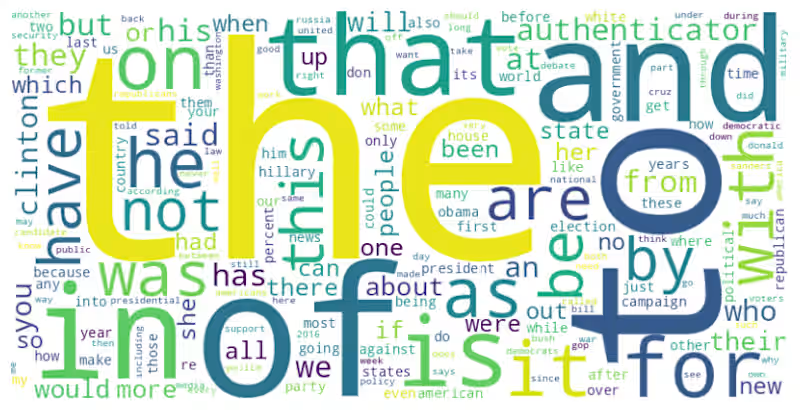

How to Assess AI Developer Candidates

Technical Screening Methods

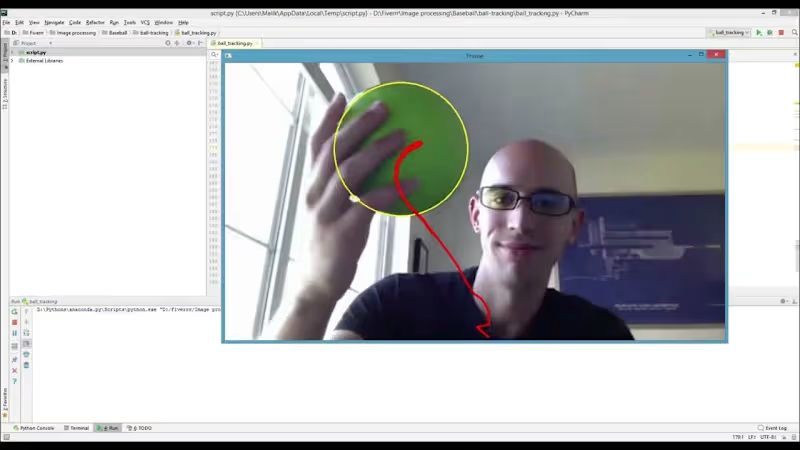

Live Coding Challenges

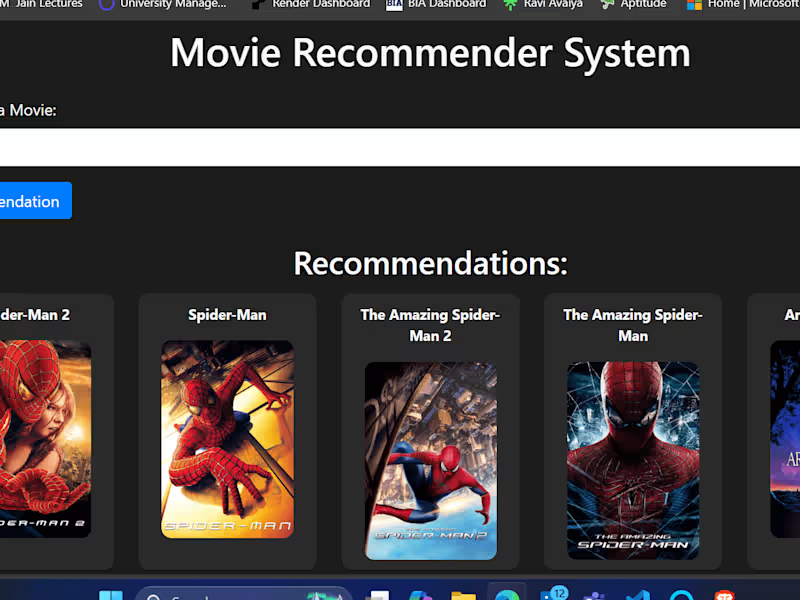

Portfolio Evaluation Criteria

AI Ethics Assessment

Compensation Strategies for AI Model Developers

Global Salary Benchmarks

Equity and Stock Options

Performance-Based Incentives

Education and Training Budgets

Building Your AI Development Team Structure

Team Composition Models

Remote vs On-Site Considerations

Collaboration Framework Setup

7 Steps to Hire AI Model Developers

Step 1: Define Project Requirements

Step 2: Create Detailed Job Descriptions

Step 3: Set Up Technical Assessments

Step 4: Conduct Structured Interviews

Step 5: Evaluate Cultural Fit

Step 6: Negotiate Terms

Step 7: Onboard Successfully

Common Hiring Mistakes to Avoid

Rushing the Screening Process

Overlooking Soft Skills

Inadequate Technical Vetting

Misaligned Expectations

Retaining Your AI Development Talent

Career Development Pathways

Research Freedom Allocation

Continuous Learning Programs

Work-Life Balance Initiatives

Legal and Compliance Considerations

AI Ethics Requirements

Data Privacy Regulations

Intellectual Property Protection

Contractual Obligations

Managing AI Development Projects

Agile Methodologies for AI

Documentation Standards

Quality Assurance Processes

Performance Monitoring Systems