Aatif Mohd - SEO Consultant on LinkedIn: Website Hosting & Craw…

SEOs how frequently do you check websites for Host issues that could affect SEO performance? 🤔

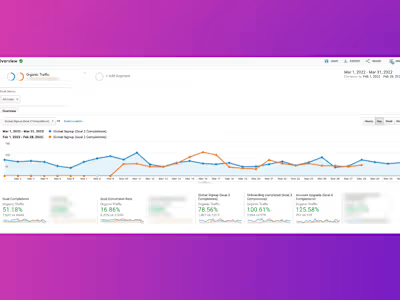

Working on this website that earlier had a ton of indexing issues & a huge chunk of newly published content were falling in either of the two buckets

a) Discovered - currently not indexed

b) Crawled - currently not indexed

On doing a deeper examination into the Crawl Stats report in GSC,

I was able to find that the website host was unresponsive from May 10 to May 12 and no crawl requests were made during this time.

As I looked over the Host status report, I could see it wasn't performing at its best and Google bots were neither able to crawl during the time period noted above. 🧐

Remember the indexing issues that I discussed earlier?

Analysis of the server connectivity graph was apparently clear it showed the amount of failed crawl requests from Google which at one point was over 52.6%.

Why is this concerning?

If the crawler consistently encounters issues for a long period of time,

URLs can drop out of Google’s index.📈

The backbone of any online business is organic traffic,

But you won't get any if Google doesn't index your content.

Think about how much time & money having a sub-par hosting provider can really cost you. 😱

Writers, designers, developers & SEO manager's efforts all go down the drain when your pages aren't indexed. 🤯

Like this project

Posted Jun 5, 2022

Web hosting & Crawl Requests Report Analysis