Transformer-Based Brainwave Image Reconstruction (2022)

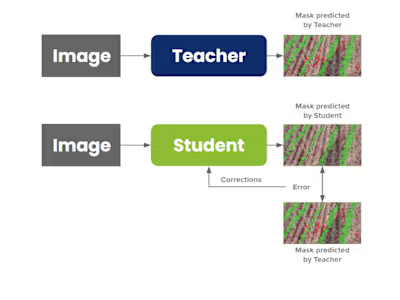

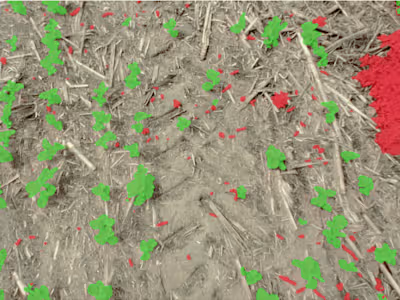

Reconstructing images from brainwave data is a major challenge with the potential to reveal deeper insights into brain function. Advances in transformers, diffusion models, and variational autoencoders have transformed art and image generation, as seen with tools like DALL-E, Parti, and Stable Diffusion. Since text is treated as a time series, this idea can be extended to other types, such as audio or brainwave data. In this project, I implemented a minimal transformer-based Parti architecture to convert time series data into images (ts2img). I built autoencoders (AE), variational autoencoders (VAE), and vector-quantized VAEs from scratch, explored multivariate time-series transformers, and tested the pix2pix architecture by converting time series into spectrogram images.

Like this project

Posted Sep 16, 2024

Built a transformer-based architecture to convert time series into images using autoencoders and tested pix2pix for spectrogram conversion.

Likes

0

Views

10