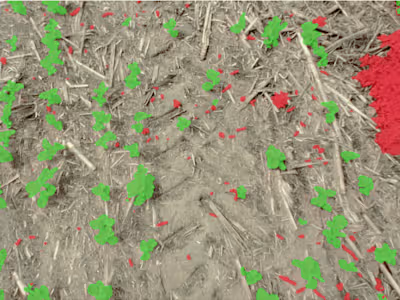

Knowledge Distillation for Crop/Weed Image Segmentation (2022)

My primary task during the internship was to conduct knowledge distillation experiments to enhance the performance of BRT’s production model. I first trained a highly accurate model to generate labels, which were then applied to an unlabeled dataset. After combining these predictions with the human-labeled data, I fine-tuned the less accurate production model. This approach resulted in a statistically significant performance improvement, confirming that knowledge distillation works for our dataset.

Like this project

Posted Sep 16, 2024

Conducted knowledge distillation, combining model-generated and human-labeled data to improve BRT's production model performance significantly.

Likes

0

Views

8

Clients

Blue River Technology