MLOps API: Sentiment Analysis with DistilBERT

Like this project

Posted Apr 6, 2025

Exploring a simple text classification on emotions with DISTILBert and enabling MLOPs with colab, MLFlow, wandb, fastapi, Docker and Github Actions - mlops-sen…

Likes

0

Views

3

Timeline

Apr 4, 2025 - Apr 6, 2025

Emotion Classification API

A production-ready machine learning service that classifies text into emotions using a fine-tuned DistilBERT model with full CI/CD pipeline integration.

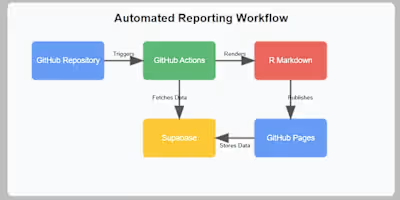

Architecture Overview

This MLOps solution consists of:

Fine-tuned DistilBERT model hosted on Hugging Face Hub

FastAPI service for low-latency model serving

Automated CI/CD pipeline with GitHub Actions

Containerized deployment on Render

Getting Started

Prerequisites

Python 3.11+

Docker (for local container testing)

Hugging Face account (for model hosting)

Local Development

Clone the repository:

Install dependencies:

Run the API locally:

Access the interactive API documentation at http://localhost:8000/docs

Running Tests

CI/CD Pipeline

The automated GitHub Actions workflow:

Test: Runs unit tests and code quality checks

Build: Packages the application into a Docker container

Deploy: Pushes to Docker Hub and triggers deployment on Render

Model Information

The emotion classification model is fine-tuned on [dataset description] and detects six emotions: anger, fear, joy, love, sadness, and surprise.

Training metrics and experiment tracking are available in the training notebook.

The CI/CD pipeline automatically deploys the application to Render:

Making Predictions

cURL Example

Making Prediction Calls

Now having deployed this project, you can just make API Calls to the deployed render service E.g:

Python Example

Sample Response

Other NOTES

-> SOFTMAX Calc.

[0.1, 0.2, 0.7] The softmax calculation takes exponentials of each value and then normalizes them to sum to 1. When the differences between values are small, the probabilities are more evenly distributed.Technical Details

Model Architecture: DistilBERT (faster and lighter version of BERT)

Inference Optimization: Batched inference, model quantization

Monitoring: Basic metrics via Render dashboard

Security: API secret key authentication (optional)

Flowchart

Training to Deployment

Loading

CI/CD Workflow

Loading

Model Serving

Loading

Monitoring (wandb/mlflow)*

Loading