AI System Optimization with TensorRT Engines

Real-world problem solution for Autonomous Driving

Brief

Introduced TensorRT framework into the company's workflow, optimizing all the company's neural network graphs and achieving a 20%-40% increase in frame rate.

Developed a C++ API for loading and initializing the engines and orchestrating the input, inference, and output during runtime.

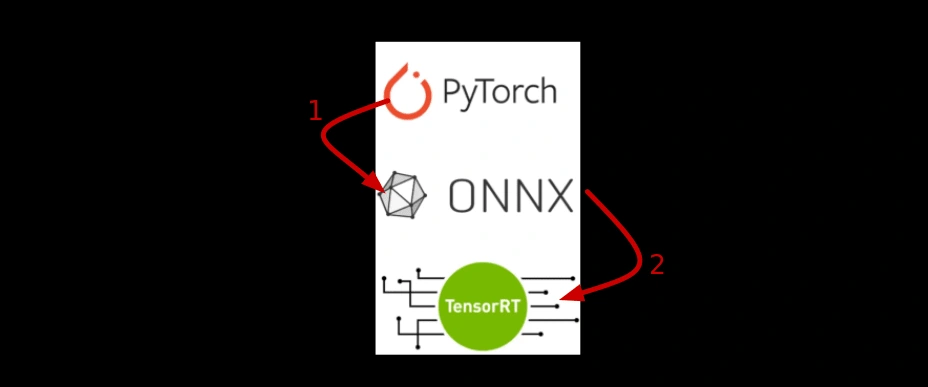

Automated the model deployment pipeline, converting models from PyTorch to ONNX and generating TensorRT engines for all the networks.

Significantly reduced neural network inference time, enhancing overall system performance

Quantization of models was done with both FP16 and INT8 precisions while maintaining accuracy.

For INT8 quantization, I used both, PTQ and QAT techniques (calibration data and quantize-aware training).

Work Flow

1) The trained PyTorch models were converted to ONNX graphs.

2) ONNX graphs then are processed with the Polygraphy to to have a dynamic batch dimension.

3) Finally, converting ONNX graphs to TensorRT engines.

When starting the AI system, the engines are loaded via the C++ API and are ready to perform inference on new input.

Like this project

Posted Sep 9, 2024

Optimizing AI systems by implementing TensorRT-based neural network graph optimization, resulting in significant performance improvements.

Likes

0

Views

17