Automated ELT Pipeline Development

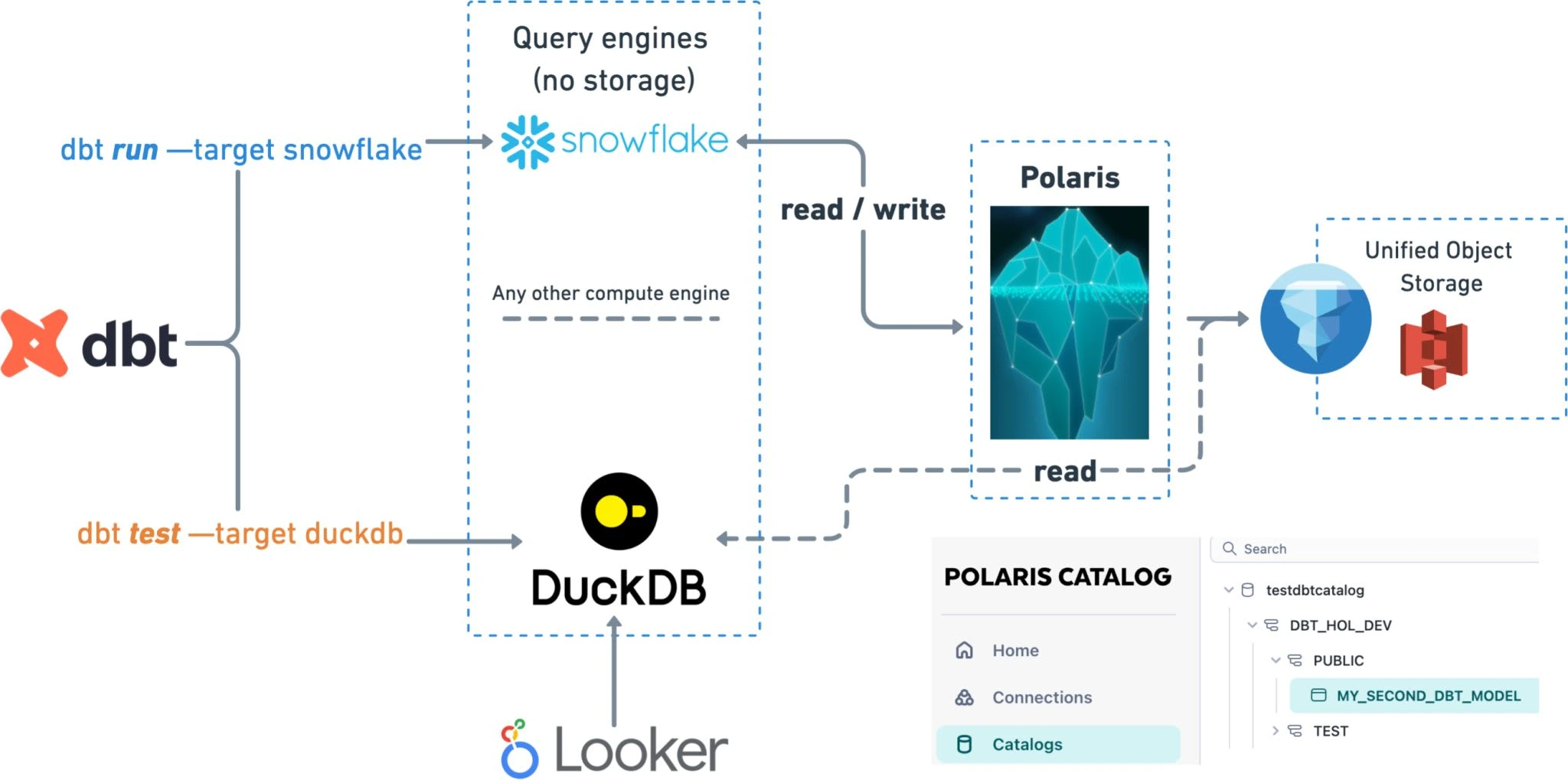

I developed a fully automated ELT pipeline leveraging Snowflake, DBT, Airflow, and Iceberg to optimize data workflows, enhance performance, and ensure scalability. The pipeline was designed to efficiently ingest, process, and transform large datasets.

Technologies Used:

Data Warehouse: Snowflake

Data Transformation: DBT

Orchestration: Airflow

Data Storage: Iceberg, Amazon S3

Process:

Data Ingestion: Data is collected from various sources and stored in Amazon S3.

Data Loading: Airflow automates the movement of data into Snowflake.

Data Transformation: DBT performs the transformations for analytics.

Orchestration: Airflow schedules and monitors the pipeline.

Key Features:

Scalable Data Processing using Snowflake

Modular Transformations with DBT

Reliable Orchestration with Airflow

Like this project

Posted Apr 17, 2025

Built an automated ELT pipeline using Snowflake, DBT, Airflow, and Iceberg for scalable data processing and lakehouse integration

Likes

0

Views

23