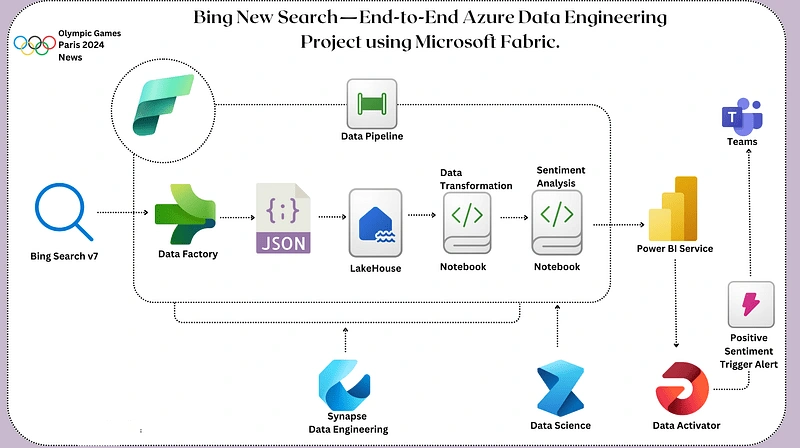

End-to-End Azure Project using Microsoft Fabric.

I designed and implemented a comprehensive ELT pipeline utilizing Snowflake, Amazon S3, Apache Airflow, and DBT to streamline data ingestion, processing, and analytics for a data-driven organization.

Technologies Used:

Data Warehouse: Snowflake

Data Storage: Amazon S3

Workflow Orchestration: Apache Airflow

Data Transformation: DBT (Data Build Tool)

Visualization: Tableau, Looker

Programming/Markup: Python (Airflow), SQL (DBT and Snowflake)

Architecture and Workflow:

Data Ingestion (Extract):

Extracted data from multiple sources, including REST APIs, third-party systems, and flat files.

Stored data in Amazon S3 buckets, organized by date, source, and format.

Data Loading (Load):

Utilized Airflow DAGs to automate loading raw data from S3 into Snowflake staging tables.

Data Transformation (Transform):

Employed DBT to define and execute SQL-based transformations in Snowflake.

Created modular and reusable DBT models for:

Cleaning and standardizing data.

Joining data from multiple sources.

Orchestration and Scheduling:

Designed Airflow workflows to manage the pipeline end-to-end, including:

Scheduling data extraction jobs.

Loading data into Snowflake.

Triggering DBT transformations.

Analytics and Insights:

Enabled near-real-time reporting and analytics by transforming raw data into actionable insights.

Data was consumed by BI tools like Tableau and Looker for visualization.

Like this project

Posted Apr 17, 2025

Architected and implemented a robust ELT pipeline leveraging Snowflake, Amazon S3, Airflow, and DBT to enable scalable and automated data processing

Likes

0

Views

11