Event-Driven ETL Data Pipeline for Loading Shipments to Redshift

Like this project

Posted Dec 2, 2024

Built an ETL data pipeline using AWS services AWS Lambda, S3, SQS, EventBridge & Redshift. Deployed via an Azure DevOps CI/CD, and built with AWS Cloudformation

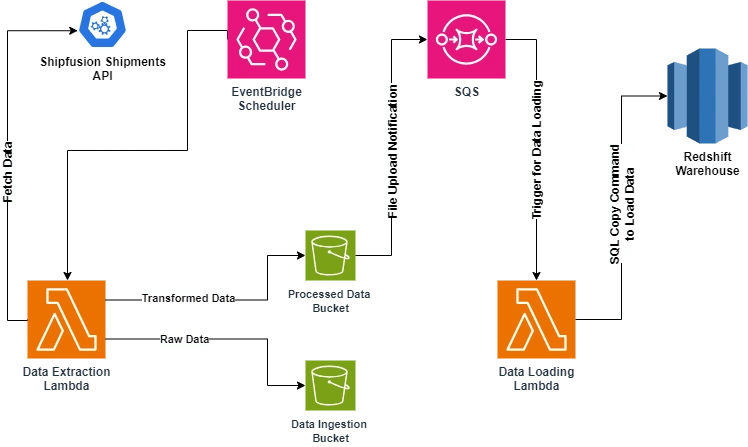

Event-Driven Data Pipeline for Shipments Data Extraction,Processing and Loading into Redshift.

I have built an event-driven data pipeline using AWS services to automate the extraction, transformation, and loading (ETL) of shipments data from an API into Amazon Redshift, leveraging a serverless architecture. Deployed the solution using an Azure DevOps CI/CD pipeline and AWS CloudFormation for infrastructure provisioning.

About Client:

I served as a Cloud Solution Architect and Lead Data Engineer for a prominent Healthcare and Wellness brand, Wellbeam Health Org, based in San Francisco. In this full-time role, I successfully completed the project while navigating and overcoming numerous complex challenges and technical issues throughout the development process

Technologies Used:

AWS Services: EventBridge, Lambda, S3, SQS, Redshift, CloudFormation, IAM.

Azure DevOps: Pipelines for CI/CD, versioning, and deployments.

Programming: Python (with libraries for API interaction, data transformation, and Redshift integration).

Database: Amazon Redshift for analytics and reporting.

Infrastructure as Code (IaC): AWS CloudFormation templates.

Local Testing and Debuging: AWS CLI, SAM CLI, Terraform, Local Stack, Make.

Architecture and Workflow:

EventBridge Scheduler:

Configured AWS EventBridge to trigger the workflow daily.

Ensures the timely extraction of shipments data from the API.

First Lambda Function (Data Extraction):

Extracts data from the Shipments Data API.

Saves:

Raw version of the data into the Data Ingestion S3 Bucket.

Transformed version of the data into the Processed Data S3 Bucket.

S3 to SQS Notification:

Configured the Processed Data S3 Bucket to send notifications to an SQS Queue whenever new files are added.

Ensures decoupled and reliable processing.

Second Lambda Function (Data Loading):

Triggered by the SQS Queue.

Loads the transformed data from the Processed Data S3 Bucket into Amazon Redshift using the COPY command.

AWS CloudFormation:

Automated provisioning of all AWS resources (EventBridge, Lambda functions, S3 buckets, SQS queues, Redshift cluster, IAM roles, etc.).

Azure DevOps CI/CD Pipeline:

Used to package, build, and deploy Lambda functions and CloudFormation templates.

Integrated with Git for version control, automated tests, and artifact storage.

Key Challenges and Solutions:

Handling API Rate Limits:

Implemented retry logic with exponential backoff in the Lambda function.

Scalable Data Processing:

Used SQS to buffer and queue processing tasks for Lambda to handle high-volume data.

Optimizing Redshift COPY Command:

Ensured optimized performance by using manifest files and enabling data compression.

Deployment and Testing:

Leveraged Azure DevOps to run unit tests, package code, and deploy artifacts seamlessly.