Efficiency in Data Processing

Understanding and Planning

I started the project understanding all the rules and data sources that I would use to develop the project, as well as planning each step and its expected duration.

In this step I used Miro to organize and plan how the data workflow would be.

Also, an important factor for the success of this project was the constant communication and open search for opportunities for improvement that I used to do with the requester.

Data Pipeline

Extract

After that I started to develop the data pipeline.

The data sources were mainly databases and APIs. Therefore, I used AWS services to extract the required data, more specifically Lambda and API Gateway for the APIs and S3 for the datasets.

Transform

For the data transformation I used Python and its packages, mostly Pandas.

In this step I had to apply a lot of business rules for each category, thus I decided to develop one script for each category. Each one would become a task of the DAG.

Load

Once the code was ready, validated and well documented, I used Airflow and AWS (Glue and S3) to keep the dataset up to date.

Like this project

Posted May 29, 2024

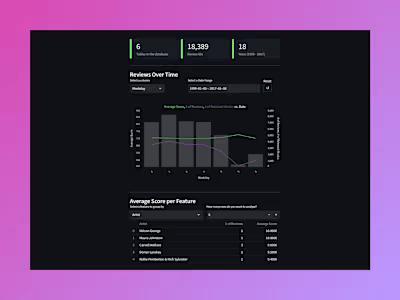

I developed and optimized data workflows using Python, Airflow and AWS. In this kind of project I'm mainly concerned about data accuracy and workflow efficiency

Likes

0

Views

3