Predicting Lung Cancer Survival

Importing the Packages

Importing matplolib for visualization and pandas for reading the csv and cleaning.

Understanding and Visualizing the training data.

Patient Id: Numbered Patient.

Age: The age of the Patient.

Histology: type of cancer cell presented within the patient.

Tstage: determines how much and where the cancer is in the body. The numbers(1-4) describes the tumor size and/or the amount of spread into nearby structures. 0 means there is no evidance of primary tumor.

Nstage: tells weather the cancer has spread to the nearby lymph nodes. The numbers(1-3) describe the size, location, and/or the number of nearby lymph nodes affected by cancer. 0 means nearby lymph nodes do not contain cancer.

Mstage: tells weather the cancer has spread to distant parts of the body. 0 means no distant cancer has been found. 1 means cancer has been found to have spread to distant organs or tissue.

SourceDataset: location where data that is being used originates from

To start the data analysis we read in the clinical data csv and display part of the data.

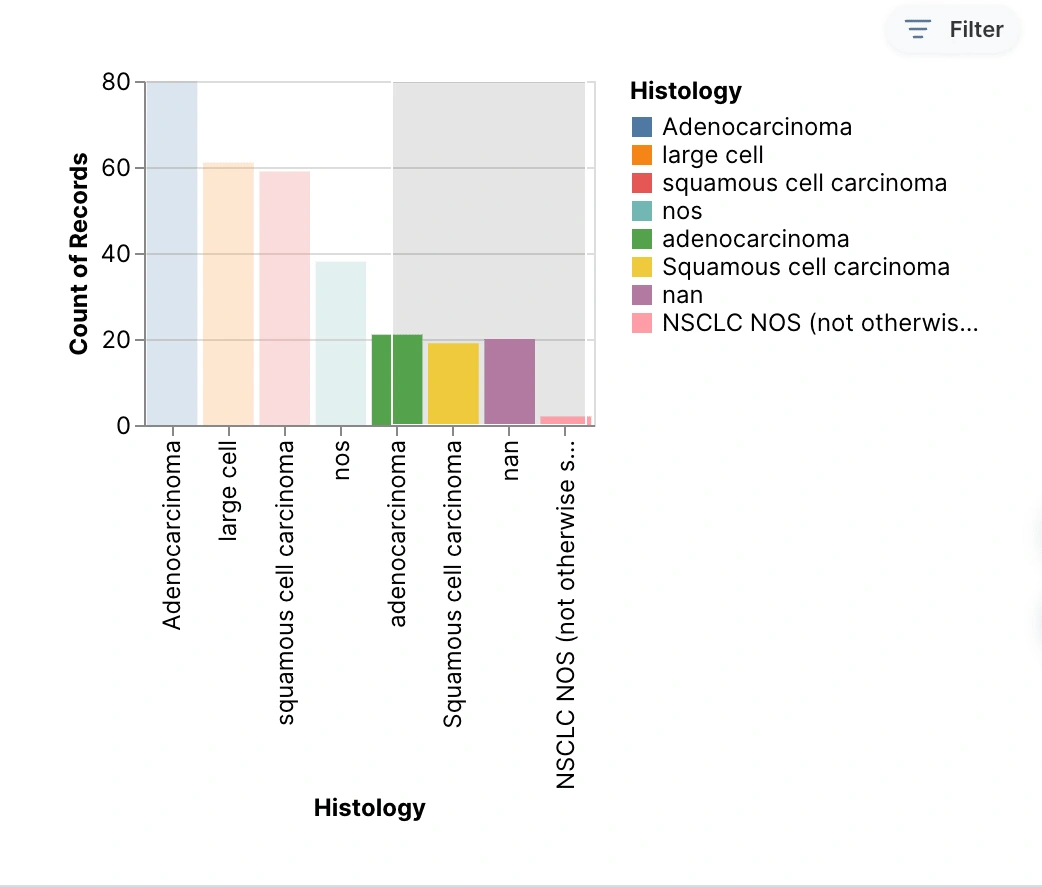

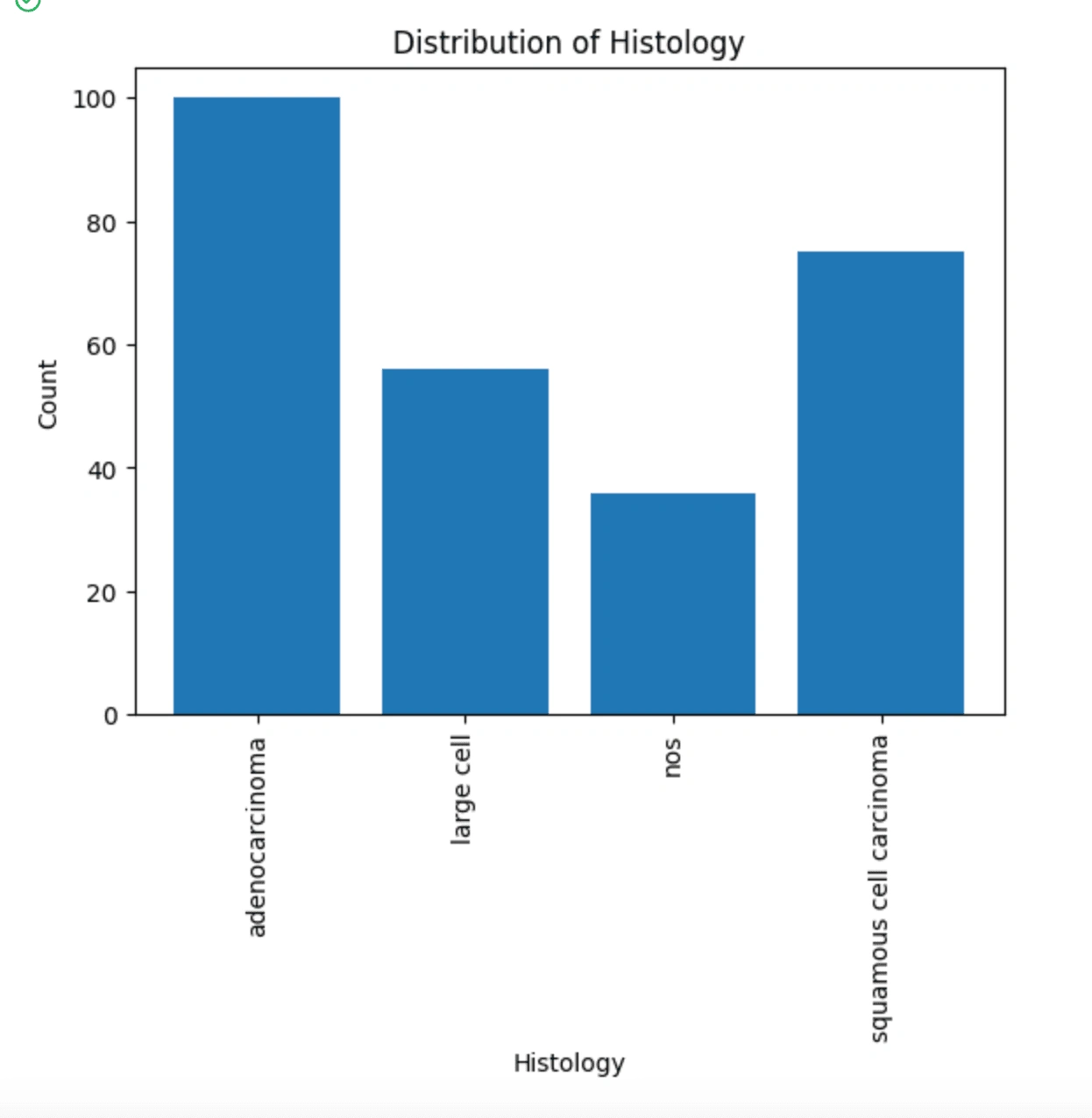

The bar graph shows all the different cancer types included in this study against the number of cases that each type had. This graph shows that Adenocarcinoma was the type of cancer with the most recorded cases. This graph also includes the nan and the two types of NOS data that were collected.

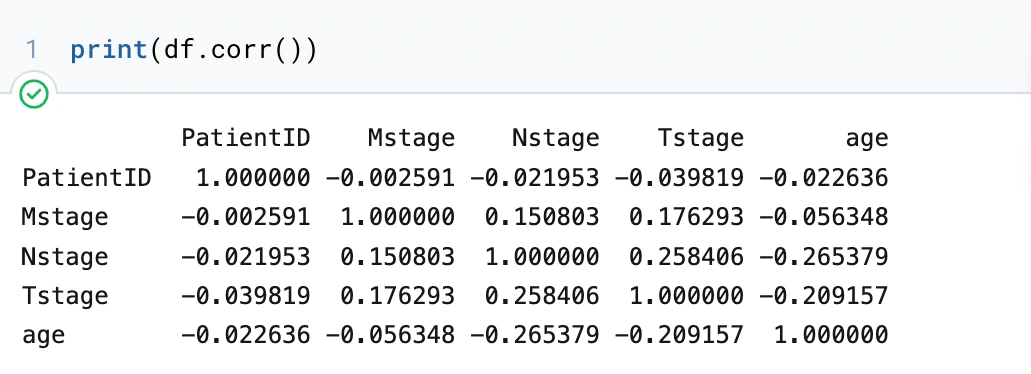

First thing with this data frame, we want to see any upfront correlations between the variables, so we will make a matrix.

Variables in the radiomics Data

Radiomics is a method for analyzing high-throughput imaging data and extracting a large number of sophisticated quantitative features. By using radiomics data we can obtain extremely valuable information about cancer that may have been overlooked or that cannot be identified with the naked eye.

Some information about the 3 main categories that are under radiomics data.

shape

first order

textural

Some acronyms that are used multiples times in the dataset:

Below is the description of the variables in radiomics data

shape: original_shape_Compactness1 measure of how compact the shape of the tumor is relative to a sphere (most compact)

shape: original_shape_Compactness2 measure of how compact the shape of the tumor is relative to a sphere (most compact)

shape: original_shape_Maximum3DDiameter largest pairwise Euclidean distance between tumor surface mesh vertices.

shape: original_shape_SphericalDisproportion ratio of the surface area of the tumor region to the surface area of a sphere with the same volume as the tumor region, and by definition, the inverse of Sphericity

shape: original_shape_Sphericity measure of the roundness of the shape of the tumor region relative to a sphere

shape: original_shape_SurfaceAera an approximation of the surface of the ROI in mm2, calculated using a marching cubes algorithm.

shape: original_shape_SurfaceVolumeRatio a lower value indicates a more compact (sphere-like) shape. This feature is not dimensionless, and is therefore (partly) dependent on the volume of the ROI.

shape: original_shape_VoxelVolume multiplying the number of voxels in the ROI by the volume of a single voxel

firstorder: original_firstorder_Energy measure of the magnitude of voxel values in an image. A larger values implies a greater sum of the squares of these values.

firstorder: original_firstorder_Entropy specifies the uncertainty/randomness in the image values. It measures the average amount of information required to encode the image values.

firstorder: original_firstorder_Kurtosis measure of the ‘peakedness’ of the distribution of values in the image ROI. A higher kurtosis implies that the mass of the distribution is concentrated towards the tail(s) rather than towards the mean. A lower kurtosis implies the reverse: that the mass of the distribution is concentrated towards a spike near the Mean value.

firstorder: original_firstorder_Maximum maximum gray level intensity within the ROI.

firstorder: original_firstorder_Mean average gray level intensity within the ROI.

firstorder: original_firstorder_MeanAbsoluteDeviation mean distance of all intensity values from the Mean Value of the image array.

firstorder: original_firstorder_Median median gray level intensity within the ROI.

firstorder: original_firstorder_Minimum minimum gray level intensity within the ROI.

firstorder: original_firstorder_Range range of gray values in the ROI.

firstorder: original_firstorder_RootMeanSquared square-root of the mean of all the squared intensity values. It is another measure of the magnitude of the image values

firstorder: original_firstorder_Skewness measures the asymmetry of the distribution of values about the Mean value. Depending on where the tail is elongated and the mass of the distribution is concentrated, this value can be positive or negative.

firstorder: original_firstorder_StandardDeviation measures the amount of variation or dispersion from the Mean Value.

firstorder: original_firstorder_Uniformity a measure of the sum of the squares of each intensity value.

firstorder: original_firstorder_Variance the the mean of the squared distances of each intensity value from the Mean value. This is a measure of the spread of the distribution about the mean.

textural: original_glcm_Autocorrelation a measure of the magnitude of the fineness and coarseness of texture.

textural: original_glcm_ClusterProminence a measure of the skewness and asymmetry of the GLCM. A higher values implies more asymmetry about the mean while a lower value indicates a peak near the mean value and less variation about the mean.

textural: original_glcm_ClusterShade measure of the skewness and uniformity of the GLCM. A higher cluster shade implies greater asymmetry about the mean.

textural: original_glcm_ClusterTendency measure of groupings of voxels with similar gray-level values.

textural: original_glcm_Contrast a measure of the local intensity variation, favoring values away from the diagonal (𝑖=𝑗). A larger value correlates with a greater disparity in intensity values among neighboring voxels.

textural: original_glcm_Correlation value between 0 (uncorrelated) and 1 (perfectly correlated) showing the linear dependency of gray level values to their respective voxels in the GLCM.

textural: original_glcm_DiffernceEntropy measure of the randomness/variability in neighborhood intensity value differences.

textural: original_glcm_DifferenceAverage measures the relationship between occurrences of pairs with similar intensity values and occurrences of pairs with differing intensity values.

textural: original_glcm_JointEnergy Energy is a measure of homogeneous patterns in the image. A greater Energy implies that there are more instances of intensity value pairs in the image that neighbor each other at higher frequencies.

textural: original_glcm_JointEntropy measure of the randomness/variability in neighborhood intensity values.

textural: original_glcm_Id Inverse Difference. Homogeneity 1 is another measure of the local homogeneity of an image.

textural: original_glcm_Idm Inverse Difference Moment. Homogeneity 2 is a measure of the local homogeneity of an image

textural: original_glcm_Imc1 Informational Measure of Correlation1. assesses the correlation between the probability distributions of 𝑖 and 𝑗 (quantifying the complexity of the texture)

textural: original_glcm_Imc2 Informational Measure of Correlation2. also assesses the correlation between the probability distributions of 𝑖 and 𝑗 (quantifying the complexity of the texture).

textural: original_glcm_Idmn Inverse Difference Moment Normalized. inverse difference moment normalized is a measure of the local homogeneity of an image.

textural: original_glcm_Idn Inverse Difference Normalized. inverse difference normalized is another measure of the local homogeneity of an image. normalizes the difference between the neighboring intensity values by dividing over the total number of discrete intensity values.

textural: original_glcm_InverseVarience method of aggregating two or more random varaibles to minimize the variance of the weighted average.

textural: original_glcm_MaximumProbability occurrences of the most predominant pair of neighboring intensity values.

textural: original_glcm_SumAverage measures the relationship between occurrences of pairs with lower intensity values and occurrences of pairs with higher intensity values.

textural: original_glcm_SumEntropy a sum of neighborhood intensity value differences.

textural: original_glrlm_ShortRunEmphasis measure of the distribution of short run lengths, with a greater value indicative of shorter run lengths and more fine textural textures.

textural: original_glrlm_LongRunEmphasis measure of the distribution of long run lengths, with a greater value indicative of longer run lengths and more coarse structural textures.

textural: original_glrlm_GrayLevelNonUniformity measures the similarity of gray-level intensity values in the image, where a lower GLN(Gray Level Non-Uniformity) value correlates with a greater similarity in intensity values.

textural: original_glrlm_RunLengthNonUniformity (RLN) measures the similarity of run lengths throughout the image, with a lower value indicating more homogeneity among run lengths in the image.

textural: original_glrlm_RunPercentage (RP) measures the coarseness of the texture by taking the ratio of number of runs and number of voxels in the ROI.

textural: original_glrlm_LowGrayLevelRunEmphasis (LGLRE) measures the distribution of low gray-level values, with a higher value indicating a greater concentration of low gray-level values in the image.

textural: original_glrlm_HighGrayLevelRunEmphasis (HGLRE) measures the distribution of the higher gray-level values, with a higher value indicating a greater concentration of high gray-level values in the image.

textural: original_glrlm_ShortRunLowGrayLevelEmphasis (SRLGLE) measures the joint distribution of shorter run lengths with lower gray-level values.

textural: original_glrlm_ShortRunHighGrayLevelEmphasis (SRHGLE) measures the joint distribution of shorter run lengths with higher gray-level values

textural: original_glrlm_LongRunLowGrayLevelEmphasis (LRLGLRE) measures the joint distribution of long run lengths with lower gray-level values.

textural: original_glrlm_LongRunHighGrayLevelEmphasis (LRHGLRE) measures the joint distribution of long run lengths with higher gray-level values.

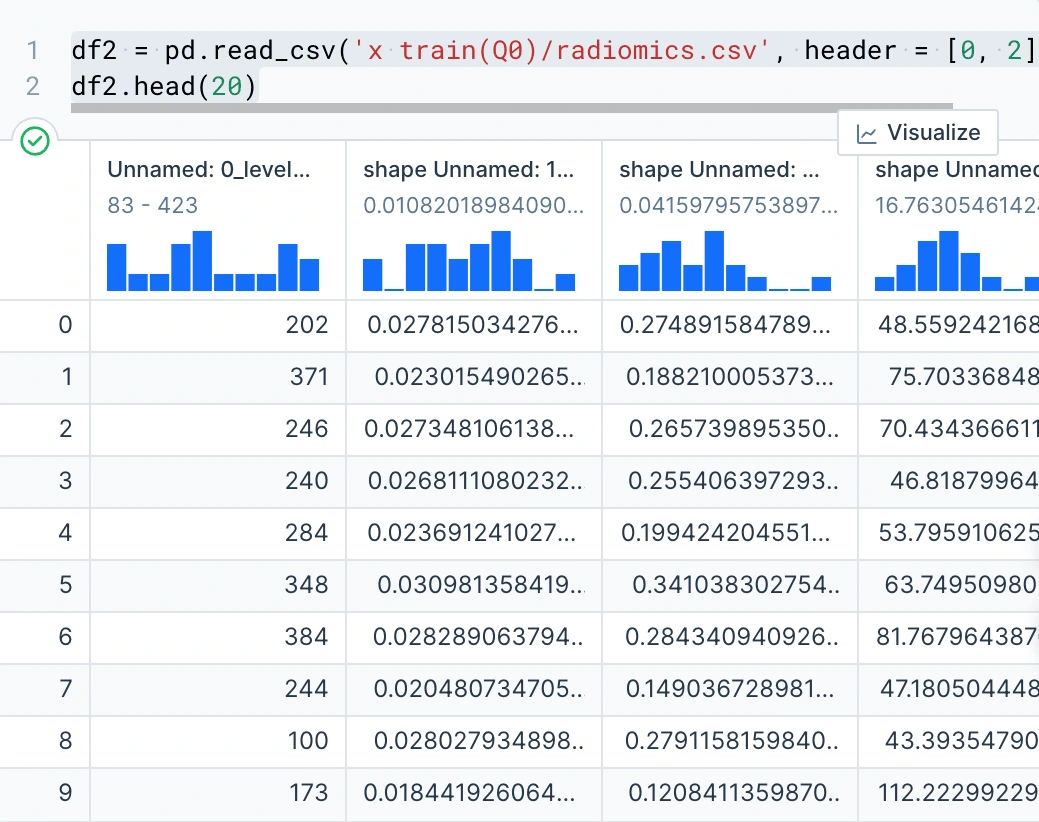

Here we are reading in the radiomics csv and displaying part of the data.

Data Wrangling train data

Next, we have to process the radiomics.csv into a one-dimensional header. Previously our header was in a three-dimensional shape. To fix this we concatenate the data headers into a one-dimensional header to be used

In this next code block we merge our datasets on the "PatientID" column in order to get one unified data frame.

Cleaning the train data

After having this, we need to remove rows that have missing values. On top of this, we need to remove rows with Mstage values of 3 and Tstage values of 5. Mstage 3 should be removed due to this being a typo as it only can go from 1-2. Likewise, Tstage 5 should be treated the same as it only moves from 1-4.

At this current moment, PatientID was not needed as it was just taking up space. We remove it from the data frame so that we are solely working with the data needed.

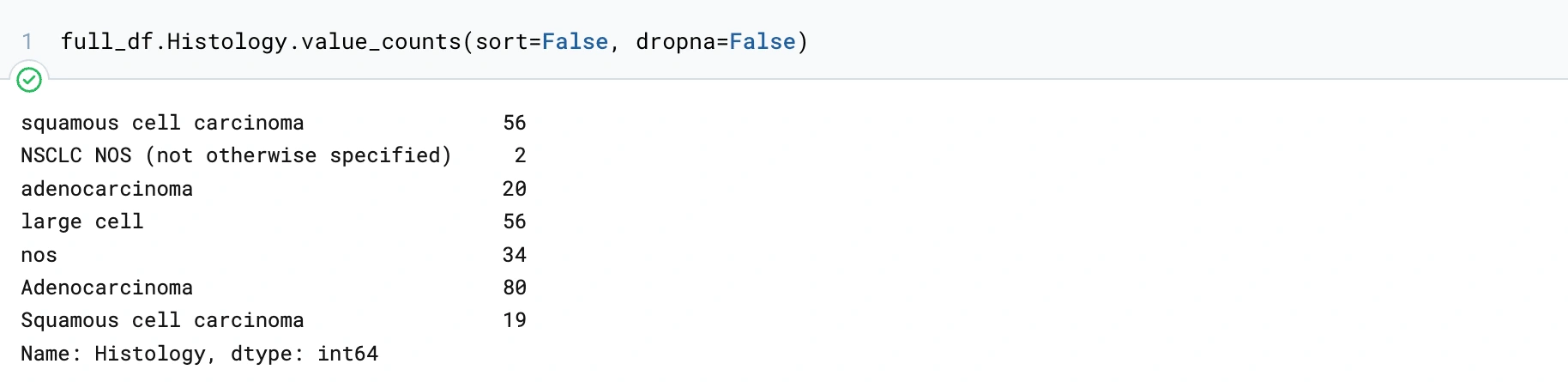

Here is the count of each value in the 'Histology' column. We can notice that there are 20 NaNs, a bunch of 'nos', and 'NSCLC NOS', which we will assume is the same as 'nos'. Knowing that we have these values, we know that we have to consider what rows we need to remove.

This code block will change the values in the Histology column containing 'NOS' to 'nos'. We had to do this for 2 other Histologies. Now we will only have 4 Histologies. This is something we didn't notice up front but knowing this makes this column easier to digest as the data is able to be confined to rows of the same types of data.

Here we are remaking the histogram after cleaning out missing or invalid values.

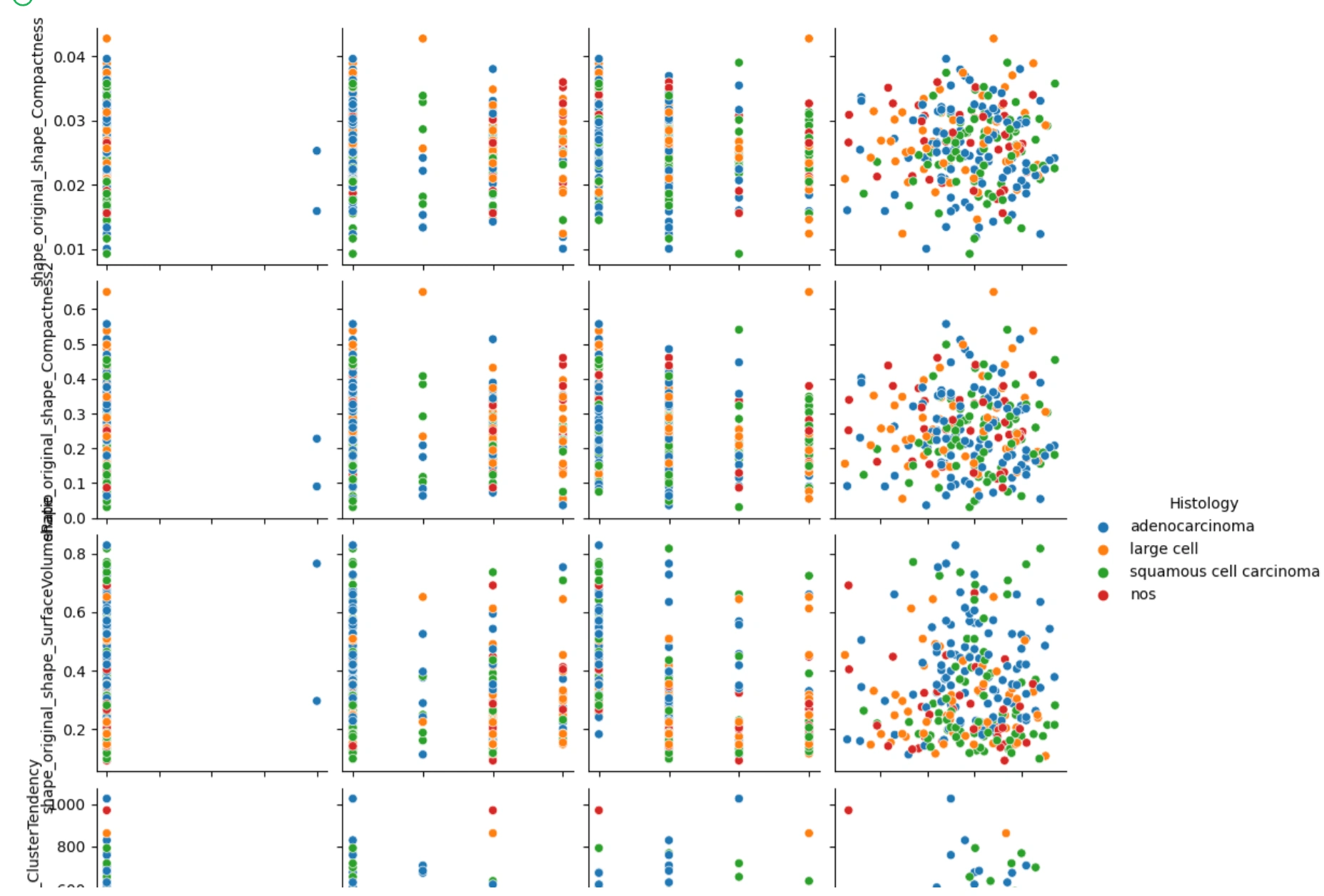

We can see here that adenocarcinoma and squamous cell carcinoma are the most represented histologies in our data. This could potentially yield a bias in the data, so we can try to separate the data into these four types. I made a pair plot using seaborn to condense some correlations down but also visualize them.

Here are the variables in this plot:

On the x-axis we have Mstage, Nstage, Tstage, and age.

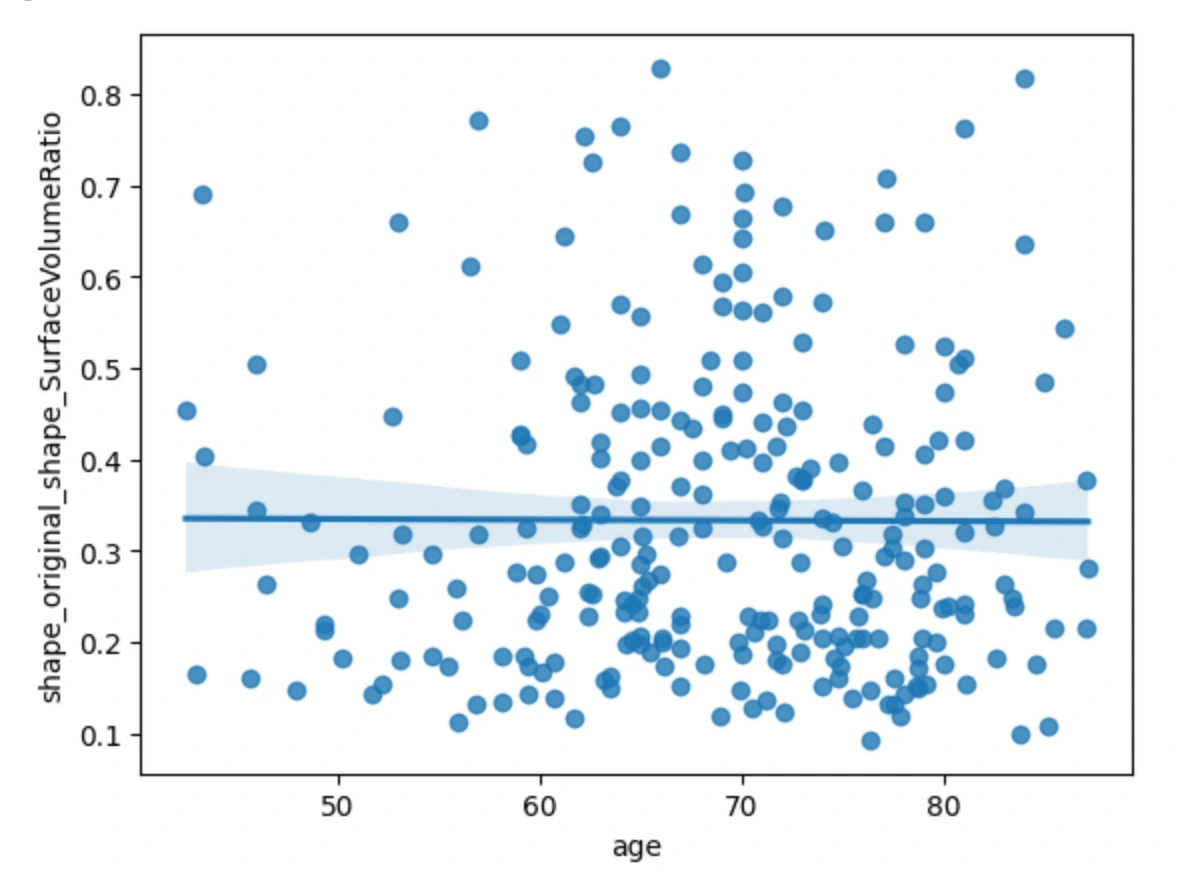

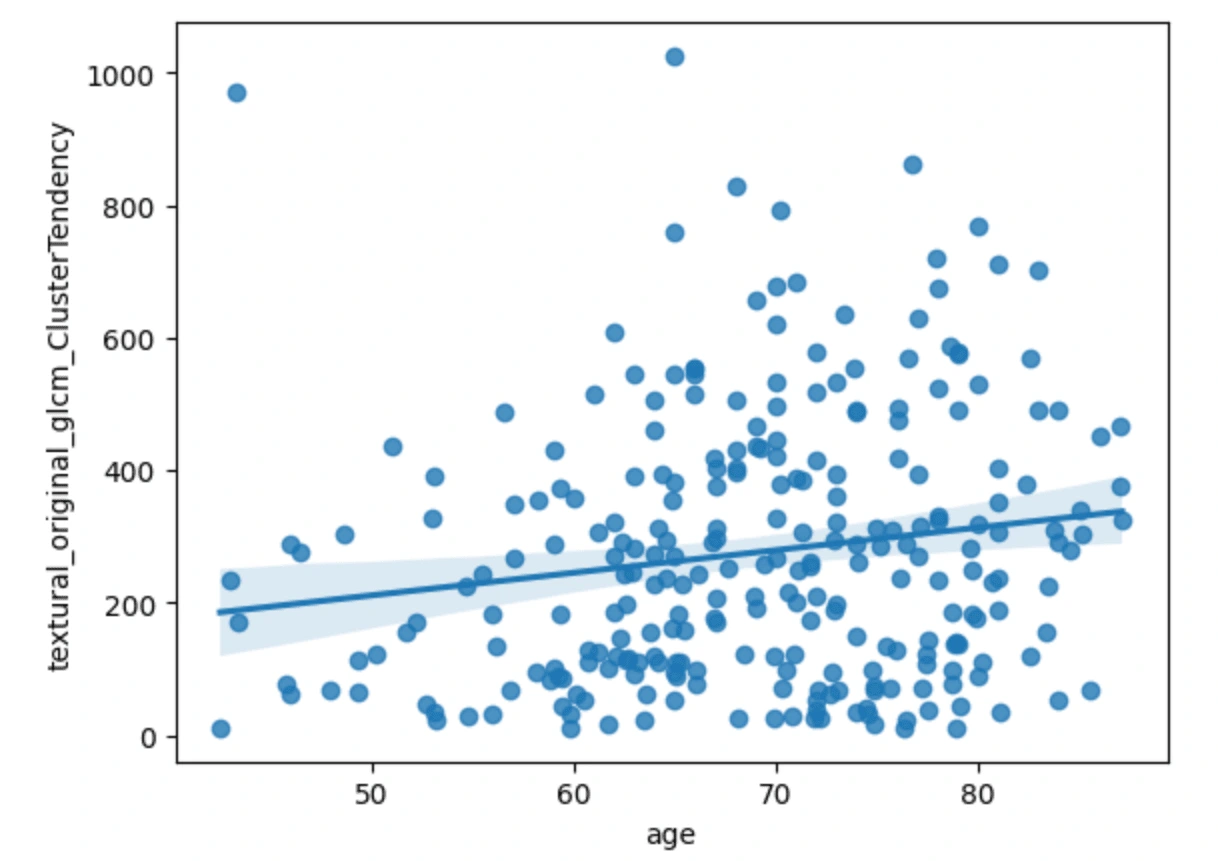

On the y-axis, we have shape_original_shape_Compactness1, shape_original_shape_Compactnesss2, shape_original_shape_SurfaceVolumeRatio, and textural_original_glcm_ClusterTendency.

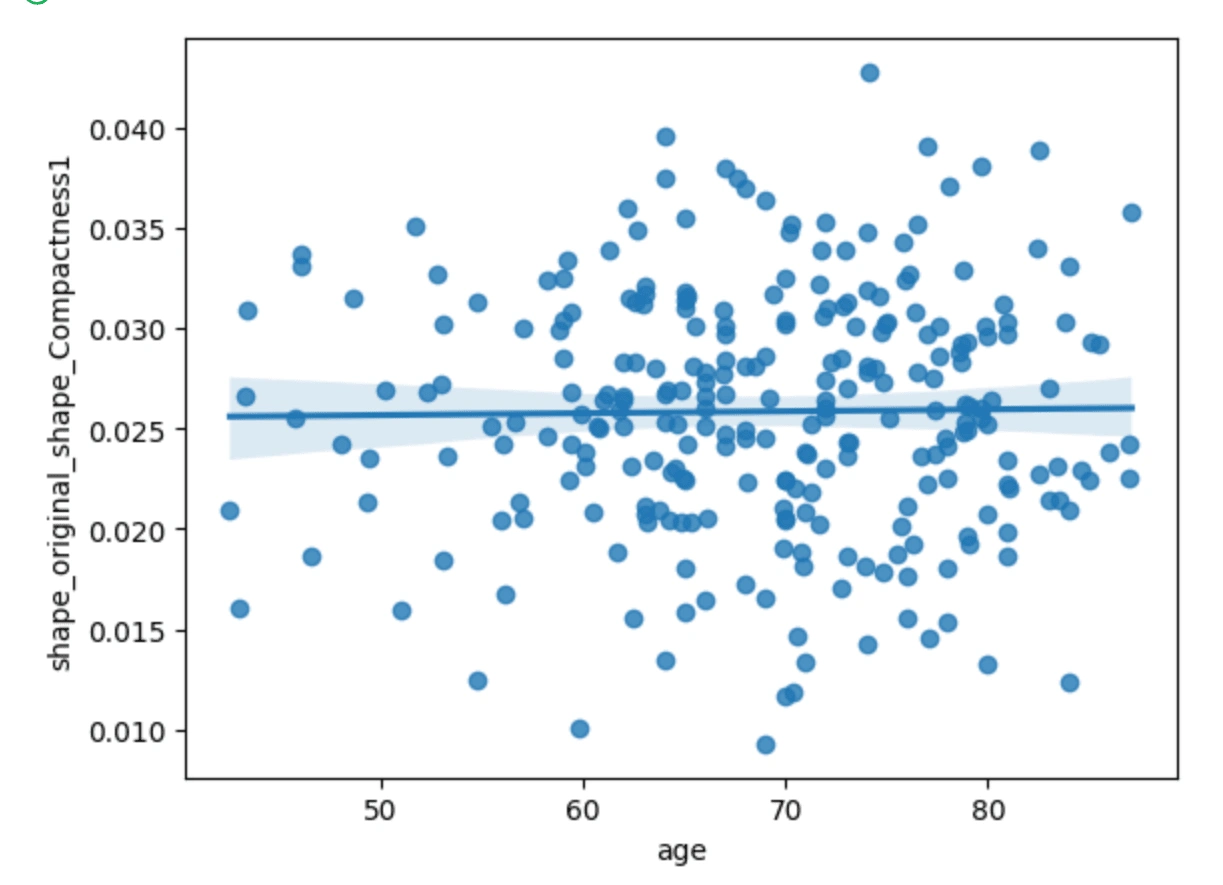

What we notice from these plots is that the values compared with the different stage categorical variables are all over the place, being that the stage variables don't have many variables to compare to. We can say the same about age but looking at where all the points in the cluster areas lie, we can potentially see a positive correlation if we were to implement a line of best fit. I will do this in the next step.

The first three scatterplots have a flat line of best fit, meanwhile, the plot of age vs cluster tendency shows a slightly positive correlation. From this we can just gather that as the age of the cancer patient goes up, the cluster tendency may become more prevalent.

Here we just dropped columns that we do not need for predicting numeric values.

Below here, we have attempted 3 different regression models: Random Forest, Linear, and Cox. We decided to go for regression models because we are predicting values and not classifying.

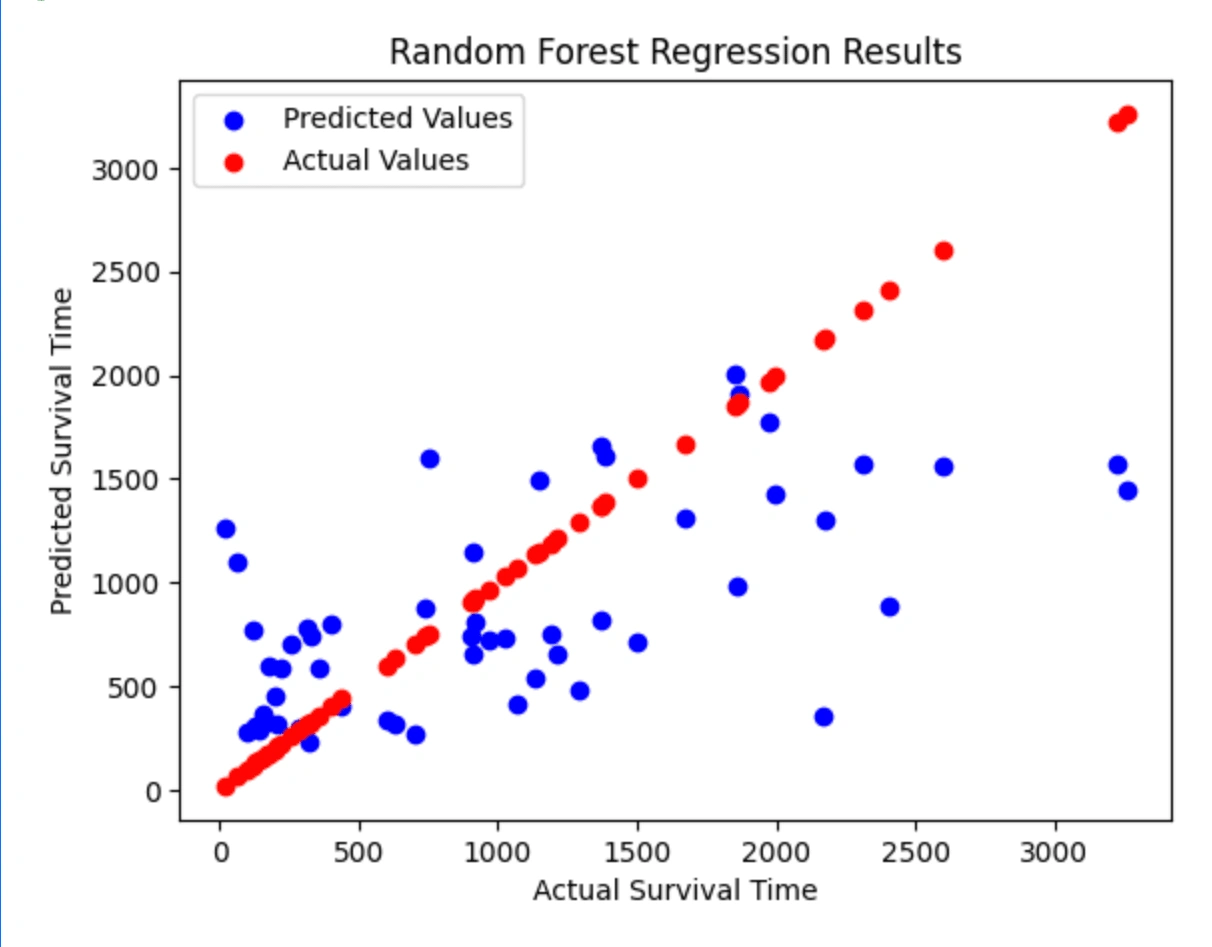

Random Forest Regression

Feature ranking:1. feature 58 (0.284684)2. feature 40 (0.049301)3. feature 39 (0.048777)4. feature 41 (0.043883)5. feature 10 (0.034985)6. feature 42 (0.032079)7. feature 12 (0.025764)8. feature 7 (0.024910)9. feature 32 (0.024882)10. feature 57 (0.022816)11. feature 54 (0.022681)12. feature 21 (0.020396)13. feature 52 (0.019036)14. feature 4 (0.017845)15. feature 45 (0.016168)16. feature 13 (0.014565)17. feature 20 (0.014112)18. feature 56 (0.014055)19. feature 11 (0.013967)20. feature 55 (0.013530)21. feature 2 (0.012676)22. feature 29 (0.012627)23. feature 44 (0.010732)24. feature 28 (0.010197)25. feature 53 (0.009865)26. feature 31 (0.009787)27. feature 16 (0.009679)28. feature 0 (0.009467)29. feature 43 (0.009063)30. feature 27 (0.009059)31. feature 6 (0.009010)32. feature 9 (0.007215)33. feature 50 (0.006699)34. feature 22 (0.006661)35. feature 8 (0.006223)36. feature 37 (0.006216)37. feature 51 (0.006039)38. feature 35 (0.006021)39. feature 49 (0.005928)40. feature 36 (0.005910)41. feature 23 (0.005650)42. feature 19 (0.005557)43. feature 46 (0.005400)44. feature 15 (0.005182)45. feature 25 (0.005094)46. feature 34 (0.004843)47. feature 5 (0.004607)48. feature 17 (0.004342)49. feature 30 (0.004238)50. feature 38 (0.004017)51. feature 18 (0.003789)52. feature 33 (0.003654)53. feature 24 (0.003369)54. feature 14 (0.003178)55. feature 26 (0.003159)56. feature 47 (0.002655)57. feature 48 (0.002325)58. feature 3 (0.001244)59. feature 1 (0.000185)RandomForestRegressor(max_depth=60, min_samples_leaf=5, min_samples_split=15, n_estimators=500)Predicted Values: [ 238. 1224. 968. 440. 1468. 765. 1665. 263. 1661. 674. 478. 325. 688. 803. 279. 1956. 831. 662. 401. 722. 371. 286. 1145. 822. 1411. 1849. 1536. 1686. 1631. 601. 352. 1619. 884. 805. 787. 344. 1510. 1328. 454. 1377. 359. 782. 866. 305. 1416. 317. 755. 1251. 583. 523. 755. 586. 355. 728.]Mean squared error: 442161.8731146045Brief explanation of the above code:

The code begins by reading in two CSV files named 'radiomics_concatenated.csv' and 'ytrain.csv' using pandas.read_csv. The first CSV file contains radiological features, while the second CSV file contains the survival times of patients. The two datasets are then merged into a single DataFrame named 'merged_df' using the pandas.merge method based on a common column 'PatientID'.

Next, the features (all columns except 'SurvivalTime') and target variable ('SurvivalTime') are separated into two separate DataFrames named 'X' and 'y', respectively, using the pandas.DataFrame.drop method.

The data is then split into training and testing sets using the train_test_split function from the sklearn.model_selection module. The training set contains 80% of the data, while the testing set contains the remaining 20%. The random_state parameter is set to 42 for reproducibility.

A random forest regression model is then created using the RandomForestRegressor class from the sklearn.ensemble module. The fit method is called on the training data to train the model.

The trained model is used to make predictions on the testing set, and the predicted values are stored in 'y_pred'. The R-squared value between the actual target values and the predicted values is calculated using the r2_score function from the sklearn.metrics module, which measures the proportion of variance in the target variable that is explained by the predictor variables.

Finally, the predicted values are printed to the console, followed by the R-squared value. This code performs the task of training a random forest regression model to predict survival times of patients with glioblastoma based on radiological and clinical features.

Random Forest Scatterplot

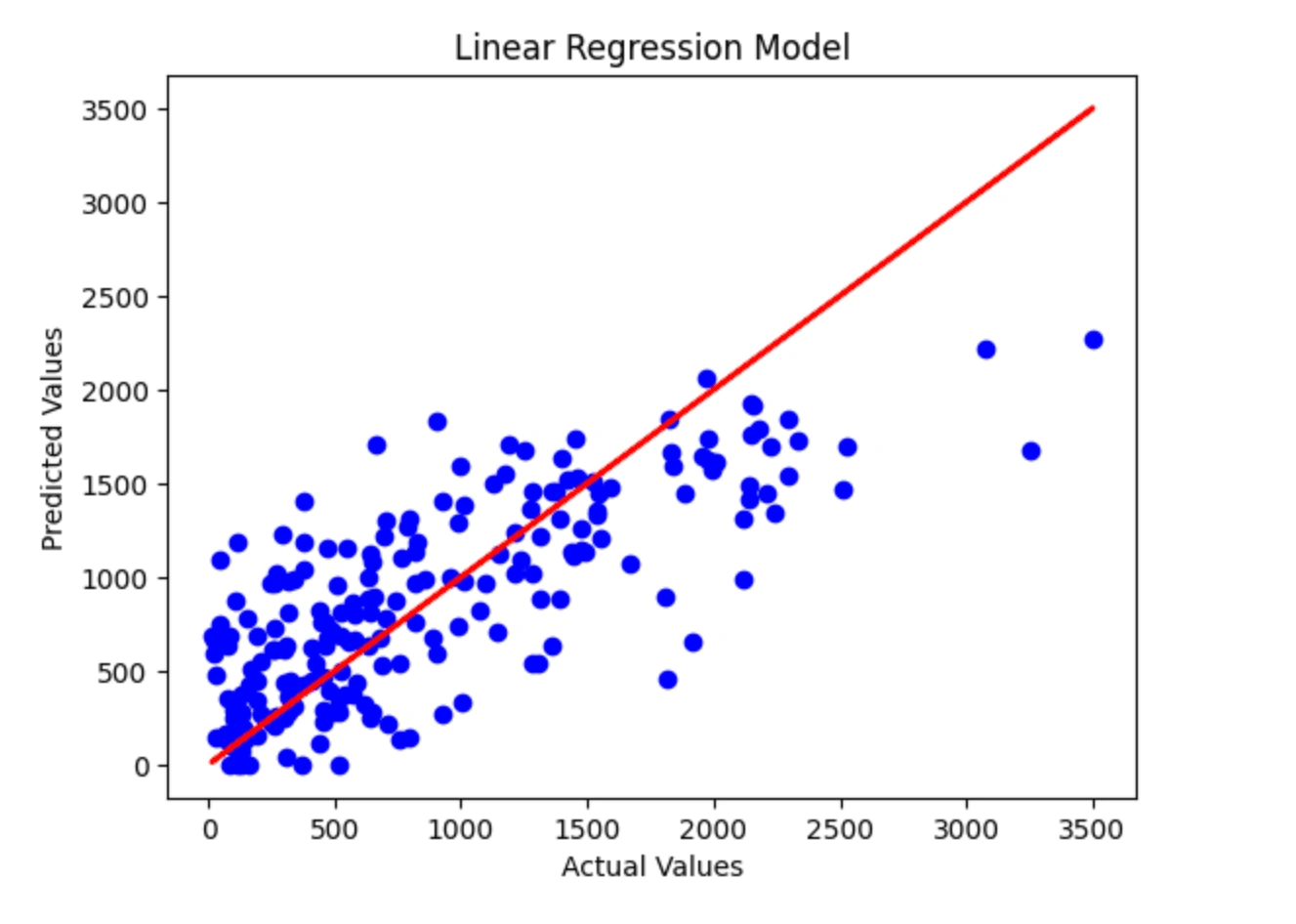

Linear Regression Model

Predicted Values: [ 412. 972. 807. 1380. 1351. 613. 820. 1338. 694. 0. 1273. 311. 328. 1159. 527. 2214. 716. 1024. 1505. 1095. 1137. 1023. 1208. 305. 821. 0. 448. 1020. 1461. 1451. 228. 1402. 149. 541. 759. 433. 1301. 537. 990. 687. 1757. 631. 1485. 1365. 1448. 960. 611. 639. 270. 37. 679. 631. 543. 998. 136. 736. 1917. 878. 275. 448. 758. 897. 1841. 626. 400. 671. 275. 1454. 148. 1402. 137. 373. 972. 208. 1930. 541. 1497. 863. 1238. 871. 686. 2269. 595. 546. 975. 280. 1590. 1186. 397. 1709. 1222. 702. 1255. 169. 984. 1003. 1707. 1182. 634. 993. 1072. 1138. 776. 1453. 132. 1836. 358. 617. 0. 1537. 651. 434. 194. 368. 429. 1092. 446. 372. 260. 1649. 162. 245. 0. 1530. 1678. 1136. 1185. 1420. 1309. 972. 295. 377. 729. 295. 74. 1122. 1845. 689. 278. 222. 423. 675. 0. 638. 1474. 1316. 759. 1309. 664. 660. 882. 1522. 283. 669. 1724. 1575. 799. 495. 784. 462. 810. 1633. 1230. 809. 1665. 887. 318. 1698. 251. 1121. 1551. 1795. 1288. 153. 277. 224. 506. 1154. 2064. 1332. 91. 356. 159. 1118. 1735. 751. 1443. 480. 1609. 890. 597. 195. 1741. 1625. 268. 691. 1105. 1696. 1680. 114. 1588. 1468. 973. 470. 984. 890. 344. 247. 1042. 1219. 1079. 1140. 0.]Here I implemented a linear regression model with feature elimination and scaling.

I noticed that if you lower the number of selected features, the r-squared score becomes significantly lower, so I am just keeping that set to 55.

I tried to implement mix/max scaling but it seemed to make the model's r-squared score lower than with the StandardScaler function.

The naive linear regression model here acquires an r-squared score around the 50-59% range, which means the model would need more work in able to better predict the survival times.

Below is an actual plot of the linear regression.

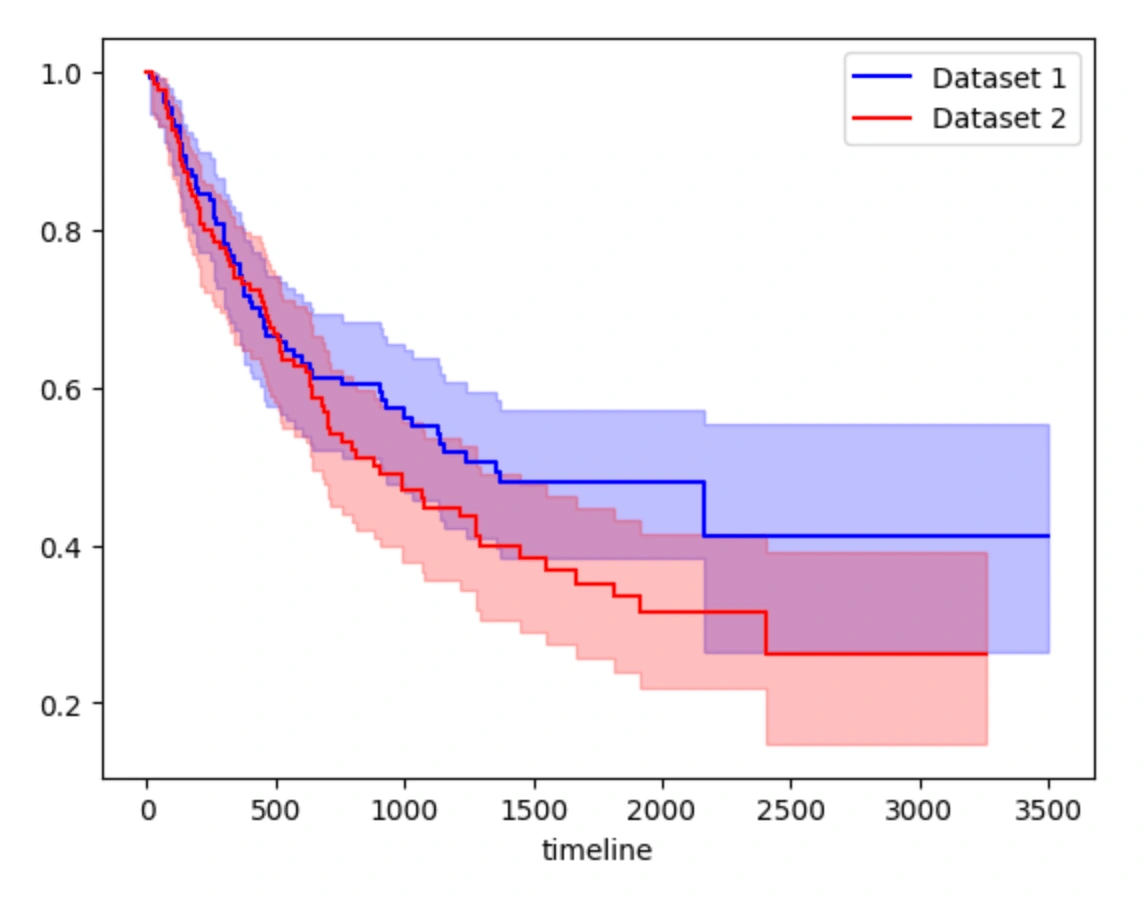

Kaplan Meier

Kaplan-Meier analysis focuses on estimating the survival function, which represents the probability of survival as a function of time. The accuracy of the Kaplan-Meier estimator is determined by how well it fits the observed data and is typically evaluated using statistical tests such as the log-rank test or the Wilcoxon test. These tests compare the observed survival curve to the expected survival curve under the null hypothesis of no difference between groups and can be used to assess whether there are significant differences in survival between different groups or subpopulations.

If the p-value is above the significance level=, you can reject the null hypothesis and conclude that there is a significant difference in survival between the groups. If the p-value is below the significance level, you cannot reject the null hypothesis and conclude that there is no significant difference in survival between the groups.

Confidence Interval for dataset 1: KM_estimate_lower_0.95 KM_estimate_upper_0.950.0 1.000000 1.00000014.0 0.947832 0.99893720.0 0.947832 0.99893721.0 0.947832 0.99893733.0 0.940768 0.996189... ... ...2309.0 0.263507 0.5542562330.0 0.263507 0.5542562600.0 0.263507 0.5542563078.0 0.263507 0.5542563500.0 0.263507 0.554256[129 rows x 2 columns]Confidence Interval for dataset 2: KM_estimate_lower_0.95 KM_estimate_upper_0.950.0 1.000000 1.00000025.0 0.948212 0.99894531.0 0.941643 0.99624643.0 0.932202 0.99272477.0 0.922425 0.988691... ... ...2515.0 0.148192 0.3915742528.0 0.148192 0.3915743222.0 0.148192 0.3915743251.0 0.148192 0.3915743259.0 0.148192 0.391574[133 rows x 2 columns]Survival function for dataset 1: KM_estimatetimeline 0.0 1.00000014.0 0.99248120.0 0.99248121.0 0.99248133.0 0.984847... ...2309.0 0.4118272330.0 0.4118272600.0 0.4118273078.0 0.4118273500.0 0.411827[129 rows x 1 columns]Survival function for dataset 2: KM_estimatetimeline 0.0 1.00000025.0 0.99253731.0 0.98507543.0 0.97761277.0 0.970149... ...2515.0 0.2623872528.0 0.2623873222.0 0.2623873251.0 0.2623873259.0 0.262387[133 rows x 1 columns]Event table for dataset 1 : removed observed censored entrance at_riskevent_at 0.0 0 0 0 133 13314.0 1 1 0 0 13320.0 1 0 1 0 13221.0 1 0 1 0 13133.0 1 1 0 0 130... ... ... ... ... ...2309.0 1 0 1 0 52330.0 1 0 1 0 42600.0 1 0 1 0 33078.0 1 0 1 0 23500.0 1 0 1 0 1[129 rows x 5 columns]Event table for dataset 2 : removed observed censored entrance at_riskevent_at 0.0 0 0 0 134 13425.0 1 1 0 0 13431.0 1 1 0 0 13343.0 1 1 0 0 13277.0 1 1 0 0 131... ... ... ... ... ...2515.0 1 0 1 0 52528.0 1 0 1 0 43222.0 1 0 1 0 33251.0 1 0 1 0 23259.0 1 0 1 0 1[133 rows x 5 columns]Log-rank test results: test_statistic p -log2(p)0 2.077145 0.14952 2.741593p-value: 0.150test statistic: 2.077

This code uses the Kaplan-Meier estimator from the lifelines package to analyze survival data. It starts by loading the survival data and feature data into separate data frames and merging them based on a common identifier column. Then, it splits the merged data into two random subsets for training purposes. The relevant columns for analysis are extracted, including the time-to-event data, event indicator data, and covariate data.

Two Kaplan-Meier estimator objects are created and trained on each of the training subsets. The survival curves for each dataset are plotted, and the confidence intervals, survival functions, and event tables are printed for Dataset 1. The same information is also calculated for dataset 2, but only the confidence interval is printed. Finally, a log-rank test is performed to compare the survival distributions of the two datasets.

The results of the log-rank test are printed, including the summary, p-value, and test statistic. The p-value provides evidence for or against the null hypothesis that the survival curves for the two datasets are the same.

Overall, this code provides a basic example of how to use the Kaplan-Meier estimator to analyze survival data and compare survival curves using a log-rank test.

Results

We tried three models for this project, Random Forest Regression, Linear Regression, and Kaplan Meier.

We decided to use Random Forest Regression because it is useful when you need to predict a target variable that has complex or non-linear relationships with the input features. It can handle missing or noisy data and is robust to outliers. It is a good choice for predicting survival times because survival analysis often involves complex relationships between features and the time-to-event variable.

As you can see above, the Random Forest Regression prints out the feature ranking based on the importance of the feature. This information is useful for feature selection or engineering, as it suggests which features might be most useful for improving the accuracy of the model. The model also prints the hyperparameters to maximize the performance of the model. Then the model predicts the output on the test data and prints the predicted values. Finally, it prints out the calculated mean squared error and the R-squared score of the model.

We decided to use Linear regression because it is a statistical method that can be used to predict the relationship between independent variables and a dependent variable. In the context of predicting lung cancer survival, this approach allows researchers to identify which variables, such as age, gender, tumor size, cancer stage, and treatment type, are most strongly associated with survival time. By quantifying the strength of the relationship between each variable and survival time, researchers can create a predictive model that accurately estimates a patient's prognosis based on their individual characteristics. Furthermore, linear regression can identify interactions between variables that may be relevant to predicting survival time. This information can be used to improve treatment decisions and ultimately, patient outcomes.

The results of the model show the predicted survival time for each patient in the training set using a linear regression model. The predicted values range from 0 to 2269, with a mean of 412. The coefficient of determination (R-squared) for the model is 0.15, indicating that the model explains 15% of the variability in the training data.

We decided to use Kaplan-Meier because it is a non-parametric statistical method used to estimate the survival or time-to-event analysis in medical research, social sciences, engineering, and other fields. It is a useful tool for analyzing time-to-event data in a variety of fields, and it is commonly used to study the progression of diseases, patient outcomes, and other factors that impact the probability of an event occurring over time.

The Kaplan-Meier Model provides confidence intervals for two datasets, a survival function, an event table and the results of a log-rank test. The confidence intervals show the upper and lower limits of the Kaplan-Meier estimates for both datasets. The survival function shows the probability of survival for each timeline. The event table provides information on the number of individuals who were removed from the study, the number of observed events, the number of censored events, the number of individuals who entered the study, and the number of individuals at risk at each timeline. The log-rank test determines whether there is a significant difference in the survival rates of the two datasets. The test produces a p-value, which represents the probability of observing the differences in survival between the two groups by chance. According to the numbers under the model, we can see that the p-value is less than 0.005 which means the survival distributions for the two datasets are significantly different. The test statistic measures the magnitude of the difference between the two groups, with larger values indicating larger differences. The test statistic for our model is quite large, which just provides evidence for the conclusion that the survival distributions for the two datasets are different.

Like this project

Posted Dec 20, 2023

Developed learning models to predict lung cancer survival times.