Walmart-SalesBot-and-SearchGPT

Like this project

Posted Jan 19, 2024

Contribute to Mpasha17/Walmart-SalesBot-and-SearchGPT development by creating an account on GitHub.

Likes

0

Views

51

WALMART-SALESBOT-AND-SEARCHGPT

◦ SalesBot-SearchGPT: Innovating Sales Agent of Walmart, and Custom Search GPT bot!

◦ Developed with the software and tools below.

📖 Table of Contents

📍 Overview

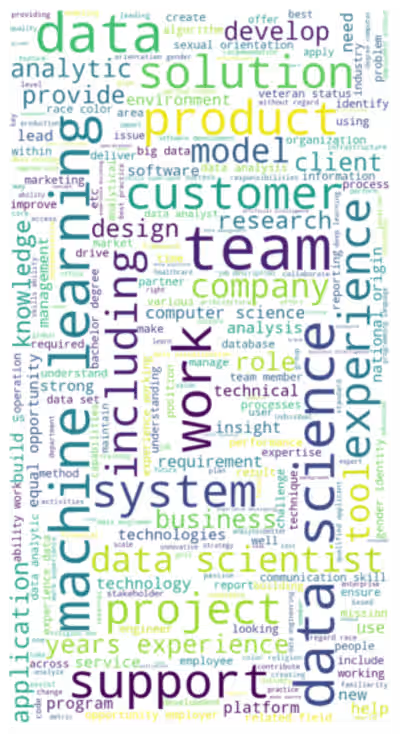

The Walmart SalesBot and SearchGPT repository provides a AI solution to improve customer service and search capabilities. The tool includes an AI chatbot, enabling users to interact with either the'WalmartBot' or'SearchGPT' bot in real-time. It utilizes a robust conversational AI agent that can fetch webpage content, gather weather details, conduct searches, and retrieve news. It provides a sales assistance system for Walmart, implementing stages of sales conversations and managing customer interactions. Additionally, the repository includes an API server for product search and information retrieval.This project draws inspiration from SalesGPT and various other open-source initiatives. It is important to note that no direct copying or borrowing of ideas has occurred, and sincere gratitude is extended to all open source projects.

Walmart Bot

The Streamlit interface offers users two distinct options: Walmart Bot and SearchGPT.

Walmart Bot Functionality

The Walmart Bot functionality allows users to seamlessly search for products on Walmart. Additionally, it provides responses to specific product-related queries such as pricing inquiries and feature comparisons (e.g., "What is the price of iPhone 12?" or "Compare features of products"). Each response is accompanied by a source link or product link, offering users a comprehensive answer.

Technical Implementation

Language Models: OpenAI models are employed in the project, with the flexibility to utilize any open-source model from Hugging Face.

Langchain: The system leverages Langchain to enhance its functionality. Techniques such as Prompt Engineering, RetrievalQA, and Vector Database utilization contribute to the robustness of the system.

Vector Database: Pinecone serves as the vector database, providing efficient storage and retrieval capabilities. The system is adaptable, allowing the use of alternative vector databases or traditional databases via llamaindex.

Embeddings: OpenAI embeddings are utilized in the current implementation, though open-source embeddings can be seamlessly integrated for further customization.

API and Framework Choices

API Framework: FastAPI is chosen to develop the API, providing a high-performance and user-friendly interface. However, the system is designed to be flexible, allowing the use of alternative frameworks like Flask or others.

User Interface Framework: Streamlit is employed to construct the interface, offering a visually appealing and interactive platform. Users have the freedom to choose alternative frameworks based on their preferences.

SearchGPT Functionality

In addition to Walmart Bot, the interface also facilitates the use of SearchGPT, enabling users to search for any question and obtain answers from the internet.

Technical Implementation

Language Models: Large language models are utilized in combination with LangChain and various tools to power the SearchGPT system.

📦 Features

Feature Description ⚙️ Architecture The system is a conversational agent combining REST APIs, Search and Recommendation systems built with Python. It utilizes Python scripts, Jupyter Notebook, and Docker for deployment. 📄 Documentation The repository lacks a comprehensive README.md file, providing a detailed explanation of the system. Absence of inline comments make it hard to understand the modules' purpose. 🔗 Dependencies The system extensively relies on external libraries such as Pydantic, FAISS, OpenAI, Pinecone, DuckDuckGo, and much more for operating its functionalities. 🧩 Modularity The system is loosely coupled and separated into several scripts, each responsible for handling a certain aspect, making it adaptable, understandable, and maintainable. 🧪 Testing The system uses pytest as a testing framework but lacks any presence of dedicated test cases to validate its functionality. ⚡️ Performance The system's performance heavily relies on the efficiency of GPT AI model & Pinecone search engine, hence can be inferred to be performant. But it lacks performance testing or benchmarking. 🔐 Security There aren't any explicit security measures found. The system being API based, needs to ensure critical information is sanitized before processing. 🔀 Version Control The repository lacks specific version control strategy as there's absence of branches, tags or even comprehensive commit messages. 🔌 Integrations The system integrates with various Python libraries, APIs, GPT AI model, and Walmart's product data, supporting the bot functionalities. 📶 Scalability The system is scalable due to its loosely coupled design that would support future enhancements, provided there is efficient error handling and architectural improvements.

📂 Repository Structure

└── Walmart-SalesBot-and-SearchGPT/

├── Data/

├── Dockerfile

├── Images/

├── csv_to_vectore_database(pinecone).ipynb

├── requirements.txt

├── run_api.py

├── search_capabilities.py

├── streamlit_interface.py

└── walmart_functions.py

⚙️ Modules

Details

Root

File Summary

The code powers an AI chatbot web application with Streamlit. It allows users to choose between "WalmartBot" and "SearchGPT" bot types and interact with them real-time. It maintains a chat interface, showing recent user-bot conversations applying a dark or light theme based on user preference. Both types of bot responses are handled via POST requests to defined endpoints.

The code implements a conversational AI agent in Python. It integrates third-party tools to fetch webpage content, gather weather details, conduct searches (via DuckDuckGo), and retrieve news. Besides, it specifies a set of behavior rules for the AI. The'get_response' function runs an input message through the AI agent, which interacts with tools based on its training and guidelines, getting necessary data, and returning a response. The system hides detailed error responses from users, replacing them with a generic misunderstanding message.

The code represents a part of a Jupyter notebook in the directory Walmart-SalesBot-and-SearchGPT. It attempts to install necessary dependencies such as protobuf, fastapi, uvicorn, langchain, openai, tiktoken, pinecone-client, and pinecone_datasets using pip. However, it encounters an error while attempting to install a specific version of pinecone_datasets.

The code comprises an API for a Walmart Sales Bot, which searches for products and returns relevant information, and a Search AI, which fetches answers from sources like DuckDuckGo, Wikipedia, weather API, and Arxiv. The API employs FastAPI and pydantic models for request/response handling. Both services initialize a new conversation if no user inputs are present or continue pre-existing conversations. The bots respond with messages and potential sources of their responses. Errors raise an HTTP Exception.

This complex Python script implements a sales assistance system for Walmart. The tool leverages OpenAI's GPT-4 model and Natural Language Understanding chains to guide sales conversations through different stages (Introduction, Product presentation, and Other queries) based on conversation history. It integrates with Pinecone to access a vector-based product database, offering product search and information retrieval for shaping responses. A custom class is designed to manage sales conversations, initiating conversations, determining next stages, handling customer input, and generating responses, optionally using a knowledge base for product queries.

The Dockerfile deploys a Python-based app for a Walmart sale-search bot through a Docker environment. It works by installing dependencies from requirements.txt, copying application files into the container, and running the application using the command'run_api.py' on port 5000. Other scripts in the repository appear to implement related functions like data conversion to vector format, search capabilities, and a Streamlit user interface.

The code sets up a Walmart SalesBot and SearchGPT program that utilizes machine learning and natural language processing for data analysis and search-related capabilities. Dependencies include openai and pytest for testing, faiss-cpu and pinecone-client for vector search indexing, black and flake8 for code formatting, Pydantic for data validation and settings management, and chromadb and xmltodict for handling data. Search results can be enhanced by duckduckgo-search, wikipedia and arxiv. The program includes an API server using uvicorn.

🚀 Getting Started

Dependencies

Please ensure you have the following dependencies installed on your system: requirements.txt

🔧 Installation

Clone the Walmart-SalesBot-and-SearchGPT repository:

git clone https://github.com/Shaon2221/Walmart-SalesBot-and-SearchGPT

Change to the project directory:

cd Walmart-SalesBot-and-SearchGPT

Install the dependencies:

pip install -r requirements.txt

🤖 Running Walmart-SalesBot-and-SearchGPT

python run_api.py

🕹️ Running using Docker

✨ Interface

streamlit run streamlit_interface.py

🛣 Project Screenshots

🤝 Contributing

Contributions are welcome! Here are several ways you can contribute:

Submit Pull Requests: Review open PRs, and submit your own PRs.

Join the Discussions: Share your insights, provide feedback, or ask questions.

Report Issues: Submit bugs found or log feature requests for Shaon2221.

Contributing Guidelines

Details

Click to expand

Fork the Repository: Start by forking the project repository to your GitHub account.

Clone Locally: Clone the forked repository to your local machine using a Git client. git clone <your-forked-repo-url>

Create a New Branch: Always work on a new branch, giving it a descriptive name. git checkout -b new-feature-x

Make Your Changes: Develop and test your changes locally.

Commit Your Changes: Commit with a clear and concise message describing your updates. git commit -m 'Implemented new feature x.'

Push to GitHub: Push the changes to your forked repository. git push origin new-feature-x

Submit a Pull Request: Create a PR against the original project repository. Clearly describe the changes and their motivations.

Once your PR is reviewed and approved, it will be merged into the main branch.

👏 Acknowledgments

This projects is inspired by SalesGPT and other open source projects. But not copy paste, or any idea has not been stolen. Thanks to all the contributors.

🛠️How to deploy the project

You can deploy the project using Docker or Streamlit. You can also deploy the project using Heroku, AWS, or any other cloud platform.

🧑🚀How to contact the Author

You can contact me at: LinkedIn