Additional resources

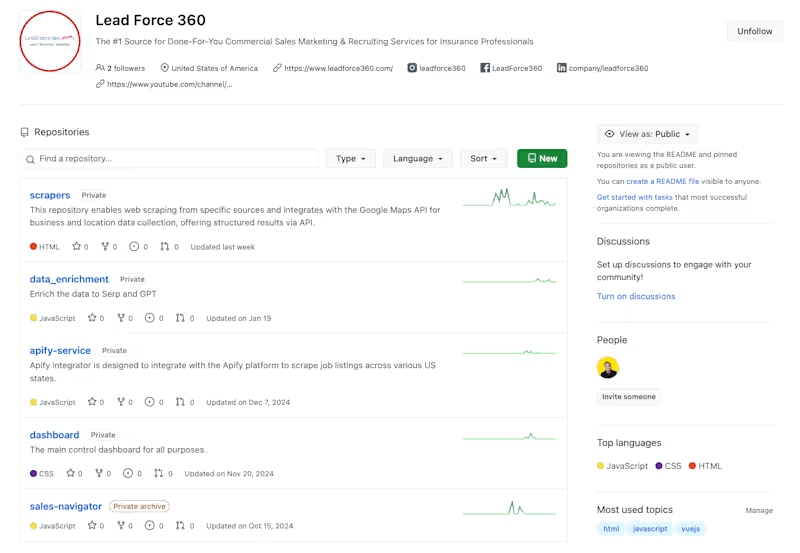

What Are Data Scrapers and Their Core Functions

Data Extraction and Automation Responsibilities

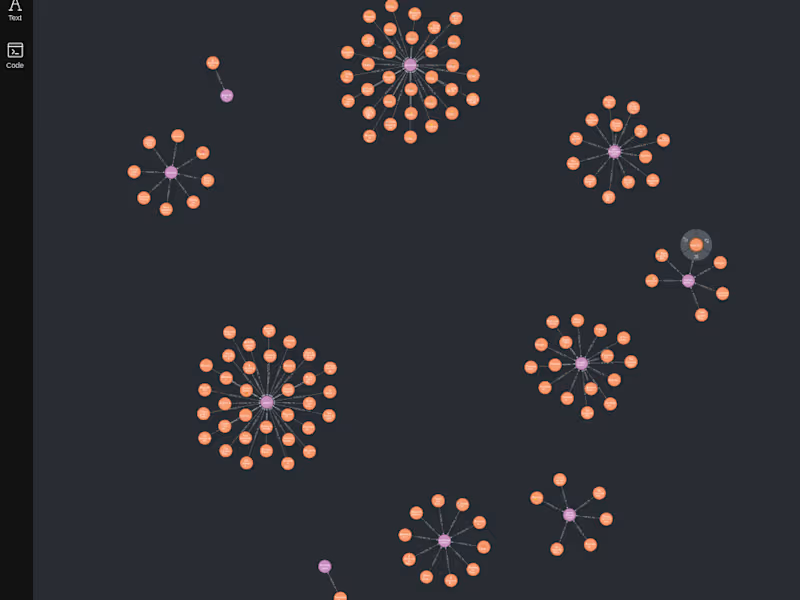

Website Architecture Navigation

Dynamic Content and JavaScript Handling

Essential Skills to Look for When You Hire Data Scrapers

Programming Language Proficiency

XPath and CSS Selector Expertise

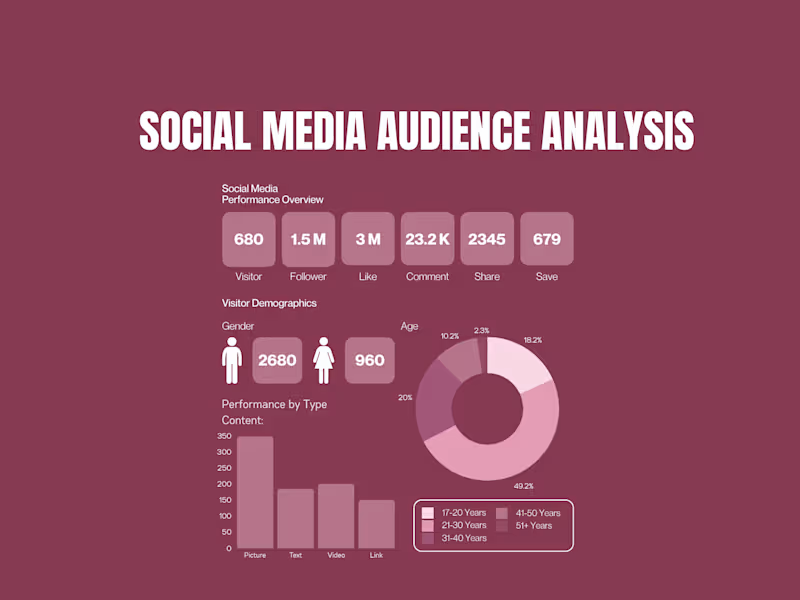

Pattern Recognition and Machine Learning Capabilities

API Integration Skills

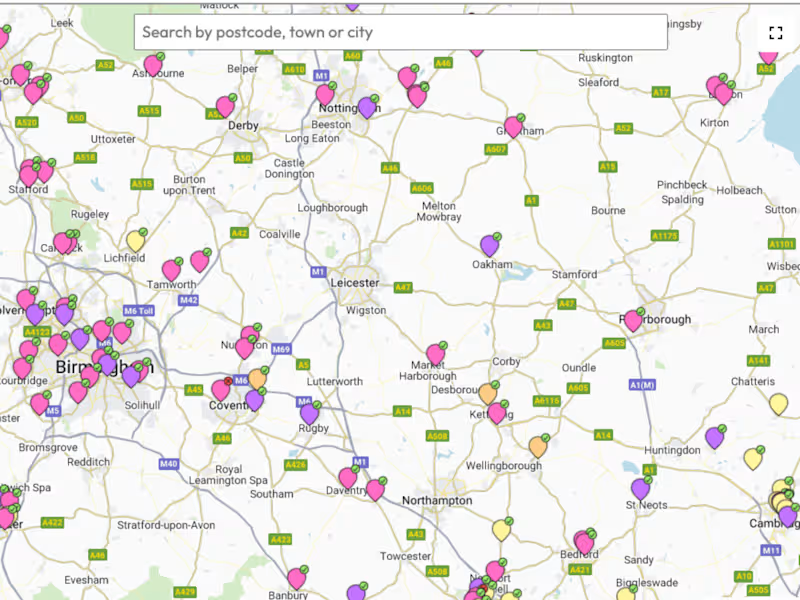

Where to Find Qualified Data Scraping Services

Specialized Technical Communities

Professional Networks and Forums

Remote Work Platforms

Cost Structures for Data Extraction Experts

Entry-Level Project Pricing

Mid-Scale Operation Costs

Enterprise Solution Investments

Hidden Cost Factors

Legal Compliance Requirements for Web Scraping Professionals

Computer Fraud and Abuse Act (CFAA) Considerations

GDPR and Data Protection Regulations

Terms of Service Agreement Compliance

Robots.txt and Rate-Limiting Protocols

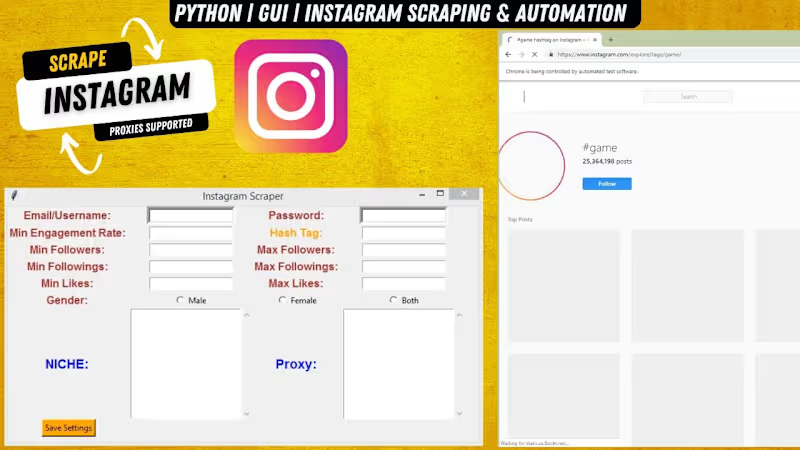

Technical Infrastructure and Tools

Cloud Platform Requirements

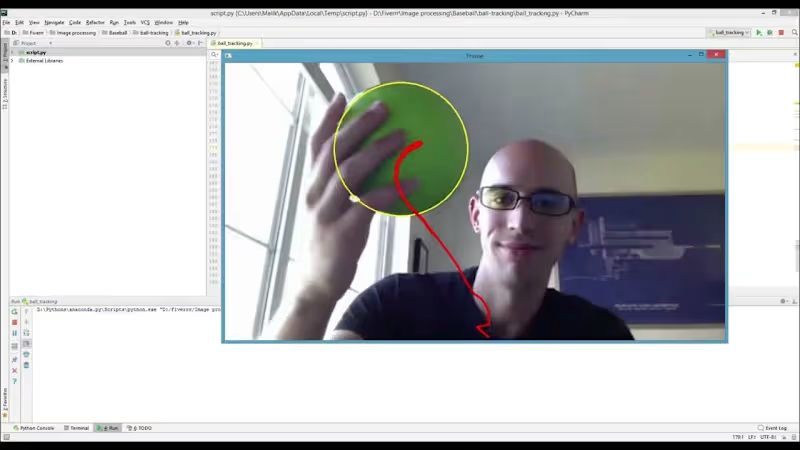

Headless Browser Technologies

IP Rotation Systems

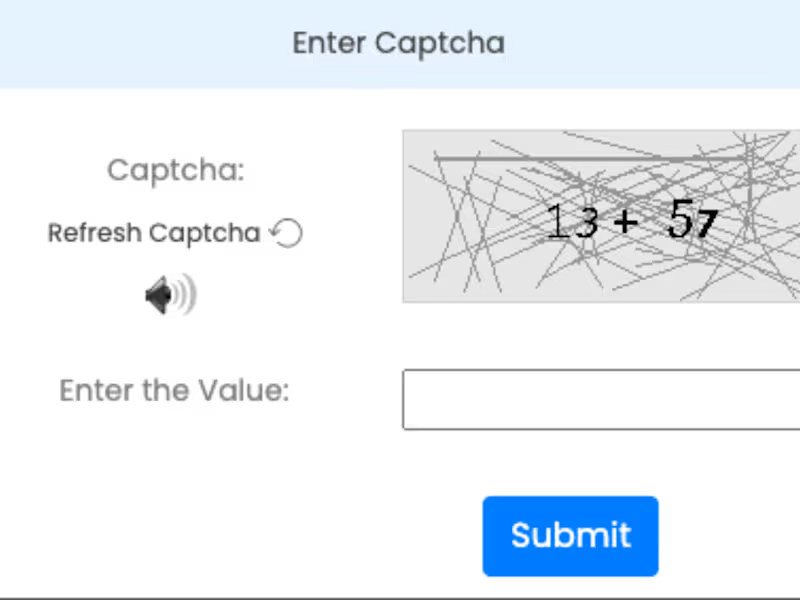

Anti-Bot Circumvention Tools

How to Evaluate Freelance Data Scrapers

Portfolio Assessment Criteria

Technical Testing Methods

Reference Verification Process

Project Management Best Practices

Scope Definition and Requirements

Communication Protocols

Quality Assurance Standards

Maintenance and Update Schedules

In-House Team vs Outsource Data Scraping

Control and Customization Benefits

Scalability Considerations

Cost-Benefit Analysis

Hybrid Model Implementation

Future Trends in Data Scraping

AI-Powered Extraction Systems

Blockchain-Based Scraping Networks

Real-Time Analytics Integration

Common Challenges and Solutions

Website Redesign Adaptation

Anti-Scraping Mechanism Updates

Data Quality Assurance

Performance Optimization

How to Structure Data Scraping Contracts

Scope of Work Documentation

Deliverable Specifications

Intellectual Property Rights

Confidentiality Agreements