Leveraging Internal Load Balancing on AWS ECS

This quick tutorial demonstrates how to overcome traffic distribution challenges in AWS ECS by integrating an external load balancer and optimizing autoscaling strategies.

As our user base grew, so did the demand on our Amazon Web Services (AWS) Elastic Container Service (ECS) infrastructure. At first, we relied on AWS’s default autoscaling for managing application load, scaling out tasks as the average CPU usage hit predefined thresholds. However, this approach started falling short as traffic increased, and our services faced challenges with efficient traffic distribution. This led us to rethink our architecture and explore new scaling strategies, ultimately involving an external load balancer setup to efficiently manage traffic and load across tasks.

The Problem: Uneven Traffic Distribution

Our ECS services, built with Node.js, handle growing volumes of user traffic. However, as traffic surged, so did the compute load, with CPU utilization remaining above 90% consistently. This was problematic for a few reasons:

Default Autoscaling Limits: ECS autoscaling typically uses average CPU utilization as the key metric for scaling tasks. This meant that tasks scaled reactively, not proactively, and it wasn’t tuned for the uneven load distribution we observed.

Node.js Single-Threaded Limitation: Node.js applications are single-threaded, meaning each task utilized just one vCPU. Scaling vertically to increase CPU allocation was ineffective for our setup; instead, scaling horizontally was necessary.

Task Scaling Complexity: As we added more tasks, we noticed that traffic was not equally distributed among them. Requests would often “stick” to certain tasks, creating an imbalance where some tasks ran under-utilized, while others were overloaded.

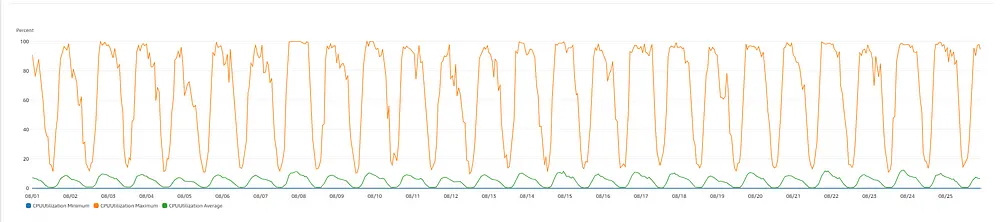

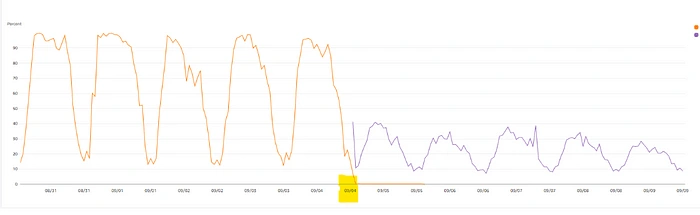

Figure 1.1 CPU Utilization of the service without the ALB

As we can see in the above metrics the average CPU utilization stays beneath 20% but the maximum utilization always stays above 90% in the peak hours with 18 running tasks.

Initial Attempt: Scaling Out Tasks with Autoscaling Policies

Our initial approach was to set up more granular autoscaling policies. We increased the number of tasks, configured autoscaling on average CPU utilization thresholds, and hoped that the load would distribute evenly among the tasks. Unfortunately, due to the inherent limitations of ECS’s default load balancer and the average CPU-based scaling, our new tasks didn’t alleviate the load sufficiently.

The traffic distribution remained uneven, causing request failures and high latency. Here’s why this didn’t solve our problem:

CPU-Based Scaling Delays: Autoscaling policies that rely solely on average CPU utilization don’t account for the real-time traffic spikes to individual tasks.

Inconsistent Traffic Spread: ECS’s internal load balancer didn’t optimally distribute requests, leading to an imbalance in task load.

The Solution: External Load Balancer with Target Group Mapping

After analyzing the limitations of ECS’s built-in load balancing, we opted for an external load balancer to control and distribute traffic across tasks more effectively. Here’s how we implemented the solution:

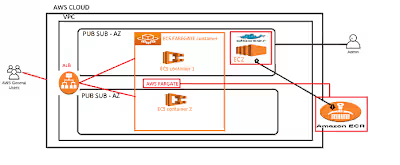

Setup of an External Load Balancer: We deployed an AWS Application Load Balancer (ALB) external to our ECS service. This allowed us more fine-grained control over the traffic routing to our tasks.

Mapping Tasks to Target Groups: With the ALB, we created target groups that corresponded to specific ECS tasks. By dynamically mapping our tasks to these target groups, we could ensure that traffic was more evenly spread.

Custom Traffic Distribution Policies: Using the ALB’s built-in health checks and weighted distribution capabilities, we optimized traffic flow based on task health and available resources. This approach prevented overloaded tasks and helped maintain steady application performance.

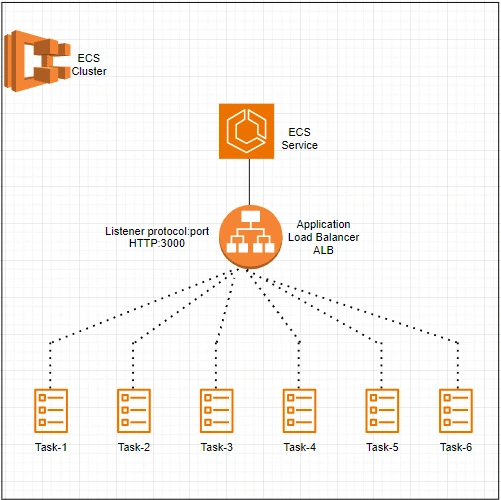

Updated Architecture Overview

Figure 1.2 Updated Architecture Diagram with ALB

The revised architecture allowed for more flexibility in managing traffic and optimizing task health:

Traffic flows through the ALB, which dynamically routes requests to ECS tasks registered in specific target groups.

Health checks regularly verify the status of each task, and traffic is diverted if a task begins to experience high load or connectivity issues.

Autoscaling is still in place for adding tasks, but the ALB now ensures that new tasks are added to the target group seamlessly and start receiving traffic immediately.

This updated setup resulted in:

Efficient Task Utilization: Tasks received an equal share of requests, minimizing idle resources while avoiding overloads.

Enhanced Scalability: The external ALB allowed for more dynamic, proactive scaling, with autoscaling based on real-time resource utilization.

Improved Reliability and User Experience: With optimized load distribution, we saw lower error rates and response times, contributing to a smoother user experience.

Figure 1.3 ECS service metrics after the Load balancer implementation

As we can see in the metrics, with same influx of traffic the service is handling the requests comfortably even with the reduced number of target tasks.

Key Takeaways

Managing and scaling Node.js applications in a containerized environment like ECS comes with its unique challenges, particularly when handling traffic spikes and distribution. Here’s what we learned:

Move Beyond CPU-Based Autoscaling: Average CPU utilization metrics alone are often insufficient for managing real-world traffic demands, especially when traffic patterns are unpredictable.

Leverage External Load Balancers: Offloading traffic distribution to an external load balancer, such as ALB, can significantly improve traffic flow, task health, and overall service reliability.

Tailor Autoscaling to Application Needs: Autoscaling policies should be tailored to the specific demands and constraints of your application, especially for single-threaded applications like Node.js that cannot leverage multiple cores effectively.

Implementing an external load balancer with ECS not only solved our immediate traffic distribution challenges but also future-proofed our architecture for continued growth. We’re now equipped to handle larger user loads while providing a smoother, more reliable experience, even during peak traffic times.

If your ECS setup is struggling with similar load distribution issues, consider integrating an external load balancer and target groups in your scaling strategy — it just might be the missing piece in your scaling puzzle!

Like this project

Posted Jan 9, 2025

This quick tutorial demonstrates how to overcome traffic distribution challenges in AWS ECS by integrating an external load balancer and optimizing autoscaling.