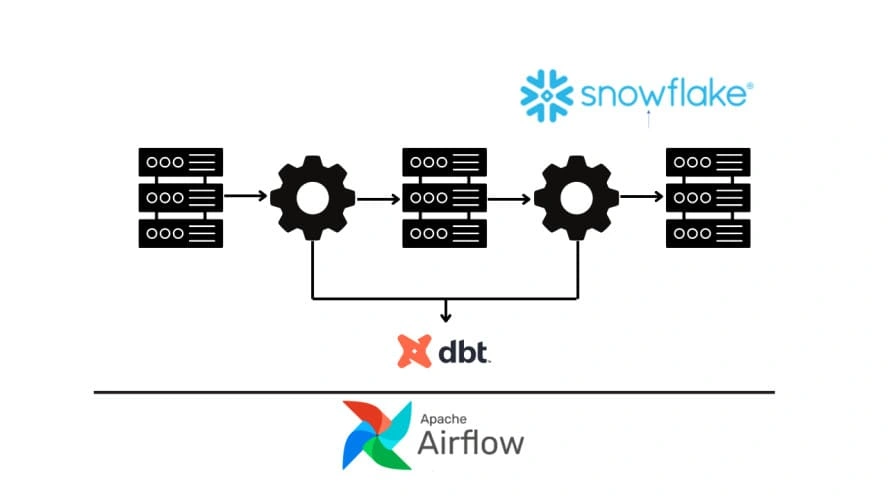

End-to-End ELT Pipeline with Snowflake, Amazon S3, Airflow & DBT

Designed and implemented an end-to-end ELT (Extract, Load, Transform) pipeline to streamline data ingestion, processing, and analytics for a data-driven organization. The solution utilized Snowflake as the data warehouse, Amazon S3 for data storage, Airflow for workflow orchestration, and DBT for transformations.

Technologies Used:

Data Warehouse: Snowflake.

Data Storage: Amazon S3.

Workflow Orchestration: Apache Airflow.

Data Transformation: DBT (Data Build Tool).

Visualization: Tableau, Looker.

Programming/Markup: Python (Airflow), SQL (DBT and Snowflake).

Architecture and Workflow:

Data Ingestion (Extract)

Extracted data from multiple sources, including REST APIs, third-party systems, and flat files.

Data was stored in Amazon S3 buckets in a structured hierarchy organized by date, source, and format.

Data Loading (Load)

Leveraged Airflow DAGs to automate the loading of raw data from S3 into Snowflake staging tables.

Implemented retry logic and alerting to ensure robust and reliable data loading.

Data Transformation (Transform)

Used DBT to define and execute SQL-based transformations in Snowflake.

Created modular and reusable DBT models for:

Cleaning and standardizing data.

Joining data from multiple sources.

Creating fact and dimension tables for analytics.

Orchestration and Scheduling

Designed Airflow workflows to manage the pipeline end-to-end, including:

Scheduling data extraction jobs.

Loading data into Snowflake.

Triggering DBT transformations.

Monitoring and alerting on pipeline status.

Analytics and Insights

Enabled near-real-time reporting and analytics by transforming raw data into actionable insights.

Data was consumed by BI tools like Tableau and Looker for visualization.

Key Features:

Modular Design: Separation of extraction, loading, and transformation processes for scalability.

Scalable Data Storage: Used Amazon S3 to handle large volumes of raw and intermediate data.

Version-Controlled Transformations: DBT ensured reproducibility and traceability of data models.

Robust Orchestration: Airflow ensured reliable pipeline execution with failure handling and alerting.

Optimized Performance: Snowflake's compute power enabled fast transformations and analytics.

Like this project

Posted Dec 3, 2024

This project highlights my skills in data engineering, workflow automation, and modern ELT practices using technologies Airflow, DBT, and Snowflake.

Likes

0

Views

96