Conur Webpage

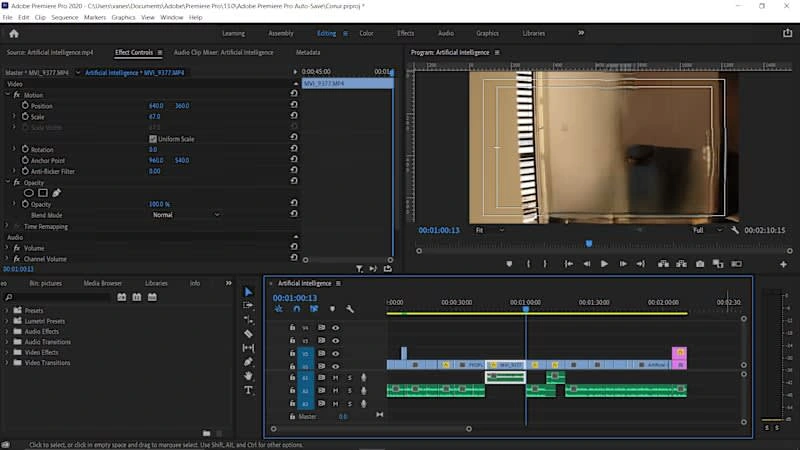

Conur Video Introduction

Elon Musk, Bill gates and Stephen Hawking all fear the dangerous threats of Artificial Superintelligence and should we all too?

Artificial Superintelligence is any AI that exceeds human levels of intelligence even slightly. However, any self-improving superintelligence is going to be sure to improve a lot very fast indeed. AI that reaches this level would soon be leagues ahead of us. This raises the question of how we can ensure that the ASI goals will still align with our goals even after losing control of it?

Like this project

Posted Mar 22, 2022

AI webpage design