AI Business Screener Web Application

Overview

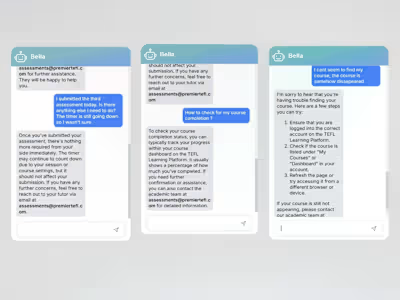

an AI Screener, that is designed to give startups and incubators a competitive edge by automating surface-level research and business auditing. Whether you're evaluating a batch of companies for investment, partnership, or market analysis, this tool delivers fast, actionable data by crawling through company URLs, extracting relevant business data, and presenting it in a highly interactive, easy-to-read worksheet.

But that's just the beginning—this AI-powered screener serves as a gateway to a suite of scalable solutions. The extracted data can be exported and seamlessly integrated into your own custom workflows, providing limitless potential for deeper analysis, strategic decision-making, and growth.

Challenge

Manually gathering business related data are often time consuming and tedious.

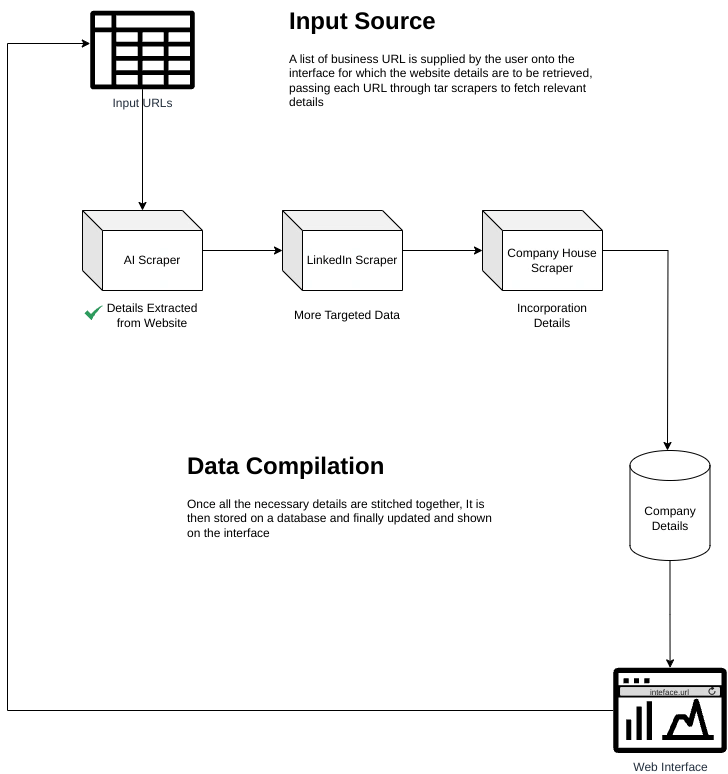

Workflow Design

Objectives

Automate Data Extraction: Utilize web scraping techniques combined with AI-driven analysis to gather comprehensive business details.

User-Friendly Interface: Provide a seamless experience for users to input data, view results, and manage their datasets.

Data Export: Enable users to export the extracted data in formats like CSV for easy integration with other tools.

Scalability and Deployment: Ensure the application is deployable on the cloud, facilitating accessibility and scalability.

Key Features

Automated Web Crawling

Customizable Workflows

Exportable Data

Interactive Dashboards

Batch Processing

Dynamic Data Handling: Supports adding, editing, and deleting rows of business data within the interface.

Status Indicators: Visual cues to indicate the status of data fetching (e.g., Fetching, Fetched, Error).

Local Storage Integration: Automatically saves data to

localStorage to preserve user input across browser sessions.Customizable Prompts: Future enhancements include allowing users to input custom prompts for tailored data extraction.

Robust Error Handling: Gracefully manages scenarios like protected emails and incomplete address information.

Use Cases

Investment Diligence

Market Landscape Analysis

Growth & Advisory Programs

Partnership Evaluation

Phases of Development

Phase I: Building Scraper + AI Retriever

Develop Web Scraper: Implement scripts to fetch and parse website content, extracting relevant information such as business name, contact details, and descriptions.

Integrate OpenAI API: Utilize OpenAI's GPT-4 model to analyze the scraped data and extract structured business details.

Standardize Data Fields: Ensure consistency in data categorization, aligning with industry standards like LinkedIn's categorization.

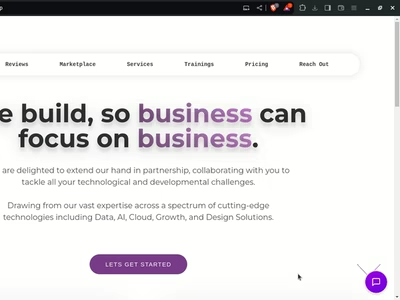

Phase II: Web Interface Development

Design Frontend: Create a React-based interface using Handsontable for dynamic data display and interaction.

2. Implement Features:

Input Mechanism: Allow users to input a list of business URLs.

Data Display: Present extracted data in an editable table format.

Export Functionality: Enable exporting data as CSV files.

3. Enhance User Experience: Incorporate responsive design principles and intuitive navigation.

Phase III: API Integration and Cloud Deployment

1. Backend Optimization: Refine Flask API endpoints for efficient data handling and processing.

2. Secure Deployment: Use ngrok for secure tunneling during development and deploy the application on a cloud platform for production.

3. Monitor and Scale: Implement monitoring tools to track application performance and scale resources as needed.

Project Timeline

Phase I: Building Scraper + AI Retriever

19.10.2024 Completed the standardization of industry categories and set constraints on description lengths.

20.10.2024 Enabled multi-location support, and refined AI prompts.

23.10.2024 Enhanced industry tagging using Linkedin Standard. Implemented from feedback and discussions

25.10.2024 [Delivered Phase I] Ensured consistency in country naming conventions.

Phase II: Web Interface Development

28.10.2024 Discussed frontend expectations and initiated development with basic feature implementations.

03.11.2024 Delivered a sample design for feature review and potential enhancements.

08.11.2024 [Delivered Phase II] Presented a working prototype demonstrating MVP operations.

Phase III: API + Interface Binding and Hosting

09.11.2024 Deployed the API on AWS, Configuring Domain, and set up continuous monitoring.

10.11.2024 [Delivered Phase III] Delivered Web APP Discussed Future Enhancement

Ideas for Future Enhancements

API Key Integration

Dynamic API Input: Implement an API input feature that allows users to add their own GPT API keys directly through the interface. This flexibility will help users manage and potentially reduce GPT-related costs, especially when sharing the app with others.

User Authentication

Login Functionality: Introduce a secure login system to authenticate users. This will enhance security, restrict unauthorized access, and prevent misuse of the application.

Scalability Improvements

Server Scaling: Increase server capacity and optimize resource allocation to handle higher loads. Currently, the application utilizes multiple distributed services, and heavy usage may lead to server overloads.

Component Scaling: Scale individual components and processes to ensure smooth operation under increased demand.

Enhanced Data Scraping and Insights

Additional Data Sources: Integrate scraping capabilities from platforms like Company House and Crunchbase to enrich the business data pool.

Advanced Insights: Provide deeper insights based on website data, including fetching relevant news headlines about the companies from across the internet.

Advanced Scraping Techniques

Handling Scraping Challenges: Address issues like IP blocking, Captcha, and Cloudflare bot protections by implementing more sophisticated scraping methodologies. This may include using proxies, rotating IP addresses, and integrating Captcha-solving services to ensure uninterrupted data extraction.

Cost Optimization Strategies

GPT Cost Minimization: Experiment with more efficient approaches for utilizing GPT, such as optimizing prompt designs, reducing unnecessary API calls, and exploring alternative models to minimize operational costs without compromising functionality.

Conclusion

The Business Screener web application successfully bridges the gap between data extraction and user-friendly data management. By implementing efficient automation, the project delivers a robust solution for businesses to gather and analyze essential information efficiently. Ongoing enhancements and future developments aim to further refine the application's capabilities, ensuring it remains a valuable asset for its users.

Like this project

Posted Feb 8, 2025

an AI Screener, that is designed to give startups and incubators a competitive edge by automating surface-level research and business auditing.

Likes

1

Views

17