WAVE / FORM: Theorizing a Discrete Sonic Materialism

*This article was featured in Adjacent, a journal of emerging technology affiliated with NYU's Interactive Telecommunications Program.

I very vividly remember the momentous occasion when I received my first iPhone. I was in 7th grade, it was a gift from my parents for my 12th birthday. Prior to my integration of the smartphone into a cybernetic-level relationship of dependency, I used a flip phone, and eventually one of those highly coveted cellphones with the slide out keyboard (these held a degree of cultural currency when I was in middle school). The mobile phones I had before my iPhone gave me sonic feedback with the push of every key; the beeps and vibrations became so tacit I barely noticed them, I took them as a scalable thing-at-hand, unnoticed, uninterrogated. Until I got my iPhone.

It was noisy, too, even more noisy than the other phones before it. It made those clicking sounds when I typed, emitted that “bubbly” noise when I sent a message (think about it, you know what I mean), and returned the familiar melodic tones of an analog phone when I pressed the flat “keys” to make a call. Then, suddenly, it stopped doing this. My phone fell silent, and it freaked me out—I assumed it was broken until I consulted Google. I was informed by Apple’s help page that there was actually a button on the side of my phone that easily turned the entire OS’ sound on and off, and I must’ve accidentally pressed it. I switched my phone back, and the sound I was so reliant on for direction through uncharted digital territory resumed as it had before. Relief flooded through my body; though I never before gave much consideration to audio cues, in this instance I realized they actually played quite a significant role in directing my participation and performance within an enclosed digital architecture.

Of course, I didn’t quite have the vernacular to describe interface metaphors at age 12. Examining the event retrospectively, though, the implementation of audio icons had a profound influence on my empirical assessments of digital materials. They directed my cognitive associations like a non-verbal language of sorts, communicating without enunciating , or guiding without directly telling me this or that. One might argue that my concern over the absence of a sonic interface was simply the result of a more complete integration of aural information into my tacit understanding of How Things Work, that I just responded in such a way because I was accustomed to hearing and seeing at the same time. To say this, though, would be grossly technologically deterministic and, if further extended, might assume the dangerous behaviorist approach—this opinion ignores the complex imbrication of human intention and material agency within sociotechnical systems, and questions the existence of free will, for example, B.F. Skinner’s “superstition experiment” with the trained pigeon: the bird pushes a button not because it makes relational semiotic judgments about what will happen, but because it is hungry, and accustomed to receiving food if it presses the button. Pure stimulus response.

In current academic conversations surrounding sound and sonic materialism, or the physical and spatial qualities of sound, it has been widely posited that sound evades representation, it doesn’t abide by the signified/signifier relationship that prosaic language does, but follows more of a poetic ontological mode—its expression is tightly coupled to the content itself. What I mean by this is that sound does not dichotomize between what is experienced subjectively as a felt duration and what that the experience means in a linguistic manner. My experience with my iPhone, though, has led me to believe otherwise. The “digital sounds” which have become deeply embedded in our quotidian lives are signifying: they assume a specific referent, and depend on that referent for coherence. Referents, for those who aren’t familiar with semiotics, are the object or phenomenon that a sign or symbol stands for, usually arbitrary. As I will explain later though, the signal/referent relation between a digital sound and its signified are often experienced simultaneously, as a shared impression amongst disparate bodies—semiotics permit us to comprehend a panoply of heterogenous phenomena by the same token. What should be an experience of the singular, varying from individual to individual, coagulates into qualia , which Lily Chumley and Nicolas Harkness define as an experience of “things in the world”, a fundamental unit of aesthetic perception. Sound is part of that qualic system of valuation. The beauty (or horror, depending on your perspective) of GUI’s is that they are semiotic. They exist for us and because of us and will never confront users with an “in-itself” ontology . In fact, the very material composition of digital sounds in their discreteness presupposes a simulative nature, these noises are deliberate and adhere to strict models based on an existing analog reality in efforts to reproduce the same psychical response we have to feeling sound as a interior temporality, or the way we hear sound resonate inside of us as subjects, despite its exterior constitution.

Let us briefly recourse to a history of digital sounds, beginning at Bell Labs in the late 1960’s. Computers had been making noise as an epiphenomenon since the genesis of the ENIAC , but it was computer scientist Max Matthews who wrote the first actual music program, called MUSIC. It initially only played simple tones, but as Matthews continued to develop the software, he released different iterations that built off of the previous version’s capacities; MUSIC II introduced four-part polyphony and ran on largest mainframe computer available. MUSIC III made use of a new “unit generator” subroutine that required minimal input from the composer, making the whole process more accessible. MUSIC IV and MUSIC V further tempered the program, and the technology for computer composition, both hardware and software, began to proliferate across various research centers and universities including MIT, UC San Diego, and Stanford.

Despite these advances, the process of computer composition remained tedious and often times grueling. From one account:

“A typical music research center of the day was built around a large mainframe computer. These were shared systems, with up to a dozen composers and researchers logged on at a time. (Small operations would share a computer with other departments, and the composers probably had to work in the middle of the night to get adequate machine time.) Creation of a piece was a lot of work. After days of text entry, the composer would ask the machine to compile the sounds onto computer tape or a hard drive. This might take hours. Then the file would be read off the computer tape through a digital to analog converter to recording tape. Only then would the composer hear the fruits of his labor.”

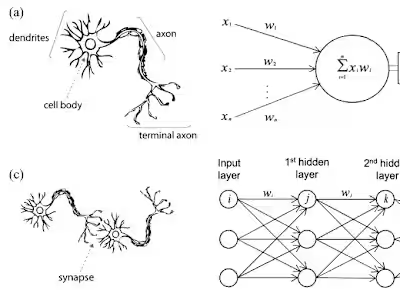

It wasn’t until Matthews devised the hybrid system that allowed a computer to manipulate an analog synthesizer that digital music really became fathomable for a more mainstream public, as the analog synth was the most commonly sought after mechanism for music production at the time. Coincidentally, Matthews discovered that the elemental circuitry of a sequencer is actually digital. A sequencer is the apparatus that allows one to record audio using a keyboard and play it back, but the essential difference between a sequencer and a basic audio recording is that a sequencer records sound as data. “Think of it as a word processor for music,” Phil Huston explains. What this means is that the way sequencers operate is necessarily fragmented and discrete, it represents a sound in various informational forms, breaking down a continuous sound wave into, well, a sequence of digits. Here, what has been posited as the a-signifying or a-subjective (autonomous) quality of sonic materialism becomes questionable, because digital sound is built off of different types of models of the acoustical and physical sort. Acoustical models are based on the sensation of hearing itself, as a person receiving information and integrating it into their subjective experience of the sound’s texture; Chris Chafe aptly calls this method “psychoacoustical.” Physical models focus on the “objective” materiality of sound, timbre, vibrations, frequency, etc., the very literal composition of a sound source. Chafe tells us that this modeling technique is based on analyzing the “principles”, a set of patterned dynamics, and building from the granular up, adding new variations that seems significant. Though these models and diagrams seem to make no distinction between content and expression, we have to remember that they exist to signify or replicate an analog referent, in this case, the numbers themselves (which follow sets of parameters) are bound to something else, they are made from the particularities of a certain input, and they refer to this input through their very existence.

So, where does this leave us now? We have made great advancements since the advent of digital music, but, if we deconstruct the fundamental continuities behind the whole process of digital sound creation, the semiotics remain the same. The digital music of Matthews’ time existed for the purpose of replicating the experience of sound through informational representation. We think of OS sounds differently today—though analog synth composition is still very fashionable it is not what most people would think of if I say the words “digital sound” together. One of the most ubiquitous desktop tunes of the past three decades is ambient musician Brian Eno’s composition for the Windows startup interface. This melody can be thought of as a “music logo” of sorts; it has come to signify something about the essence of Windows as a brand, to communicate a feeling to us and direct us to participate with technologies in a particular way.

Since I began studying skeuomorphism for a thesis project, I became fascinated with auditory icons and “earcons”, those noisy cues which communicate information about an OS object, event, or function. Signifying sounds are mostly limited to practical applications in the fields of UX design and human-computer interaction, as well as integration into vehicular atmospheres and medical equipment, if we consider interfaces in the broader sociotechnical sense. The purpose of audio cues is to direct bodies and command a subject to behave in a certain way. We encounter these sounds every day in moments of interface, both digital and otherwise (i.e. when driving a car or riding the subway). They are commonly applied to enhance user reaction time and accuracy, learnability, and overall performance. While these aural messages are valuable tools for engagement with an operating system, they also illuminate much about analog materials themselves, adding a new dimension to “sonic materialism” once characteristics like timbre, frequency, and resonance are not only coupled to a referent, but derive all purpose and meaning from these parent forms.

It is important to distinguish between auditory icons and earcons, as their origins, both psychoacoustical and practical, are not the same. The term “earcon” was developed by computer scientist Meera Blattner, and is used to describe manufactured sounds, new additions to the sonic lexicon such as simple tones and beeping. Earcons are more of an indirect audio cue used to communicate information about a certain interface object or event, aesthetically they play a significant role in reticulating the interface that I would argue is equivalent to visual designs themselves. Auditory icons, developed in 1986 by Bill Gaver, serve the same purpose but instead emulate a familiar sound we already have established associations with.

Most published work on auditory icons is found in the forms of patents or review articles, so the field of aural interface interaction is largely undertheorized. Auditory icons were originally used in the field of informatics to supplement the desktop metaphor with all of its skeuomorphic buttons, the idea was to ensure digital technologies were as intuitive as possible by using discrete features to represent an analog reality, much like the application of a sequencer in analog synth composition. In the instance of UI, and digital sound in general, representation is indispensable. Interfaces cannot persist without signification, and they rely on interplay between aural and visual cues to foment universal accessibility . Usability/learnability is the most coveted and evaluated attribute of audio icon; as a metric, user testing indicates how, when, and where certain icons may be properly implemented, and how successful they may be in providing direction through reference. The necessity of user testing itself proves that this genre of sound mandates reception by a listening subject, their own individual synthesis, and making their own sense out of a material reality.

Gaver proposed two metrics for evaluating auditory icons. One surveys the icon’s “proximal stimulus qualities”: these are the material attributes of the sound, like frequency, duration, and intensity. The other judges “distal stimulus qualities” which are related to how accurately the icon’s materials represent the intended referent. Mapping is also essential for the production of auditory icons, and as a practice it is quite literally exactly what it sounds like. Think of that Borjes quote from “On Exactitude in Science”: “…In that Empire, the Art of Cartography attained such Perfection that the map of a single Province occupied the entirety of a City, and the map of the Empire, the entirety of a Province. In time, those Unconscionable Maps no longer satisfied, and the Cartographers Guilds struck a Map of the Empire whose size was that of the Empire, and which coincided point for point with it.”

The idea is for mapping to appear natural, the sound an icon makes when you click on it should seem intuitive and reflect the physical qualities of the digital materials at hand. The more believable the mapping, the more closely coupled the sound to the analog referent. For example, a “heavier” object will emit a lower tone, a lighter object corresponds to higher frequency. Mapping accounts for a more total relationship between two qualitatively unrelated substances (and treats sound more as a substance than an event), it “maps” our existing concept structures onto a digital interface through simulated likeness. Gibson’s theory of affordances, which brought the ecological attitude to perception, espouses the idea that we cannot experience the world directly and thus grasp phenomena through signifying chains of representation. Mapping, which was taken from visual studies, ensures that UI sounds are scaled to the size of the empire; they are composed according to the qualities of a referent and function solely as a synthetic surrogate.

Gaver offers three different categories of auditory icons: symbolic icons are the most abstract of the triad; they have a completely arbitrary relationship with their intended referent and hold only by repeated and routinized association with such referent. Metaphorical icons are not entirely arbitrary, but they simply gesture, they provide direction to the referent without offering a description of it, and they usually coexist with the referent in our actual sonic environment. Iconic/nomic icons physically and materially resemble the source of the referent sound, and they are cited as the strongest mappings, meaning they derive positive results from user testing. The choice of which audio icon to use in a specific instance is entirely dependent on the way the digital environment is arranged, and how closely that environment can be configured to activate analog associations.

The goal in applying auditory cues to computing is to collapse the boundary between sight and sound, to synthesize a more total UI experience. Auditory icons function as an aural syntagma, their purpose is to direct; their materials do not operate independently of reception and cannot be excised from the signified/signifier algorithm. UI sonic environments are necessarily signifying, and material intensive quantities, or the attributes of the sound that can be represented numerically, serve a semiotic purpose. From Cabral and Remijn’s article, “Physical features of the sound(s) used in auditory icons, such as pitch, reverberation, volume, and ramping, can be manipulated to convey, for example, the perceived location, distance, and size of the referent.” The sounds of our digital lives, and particularly the aforementioned processes of aural discretization, complicate sonic materialism as a content-blind approach. The mythology of sound as pure expression without any underlying meaning cannot hold for digital noises. Within the confines of a UI, and even in the case of ostensibly pure information (the sequencer’s role within a synthesizer), content and intention are everything. Conversion from the continuous to the discrete does not discard of the continuous just because the formal qualities of the information have changed, there is still a coherence of sense across different means of expression, a residual meaning structure. Numbers, in this instance, are still qualic, because the prior phenomenal is communicated through sequences of numbers. Like my reliance on the aural reassurance of my iPhone’s functionality, I needed sound to make sense of my digital surroundings, to align my experience with some recognizable mode of participation, ultimately enabled by the ways in which sound is configured materially.

Like this project

Posted Aug 1, 2024

How can we better understand the audio interfaces that surround us? A brief history of digital sounds and their contemporary sociotechnical implications.