Big Data Aggregation and Processing System

Like this project

Posted Dec 30, 2024

A robust and performant data ETL / ELT system was developed as a solo project. Working with TBs of data on the order of billions of rows.

Likes

0

Views

20

Summary 💎

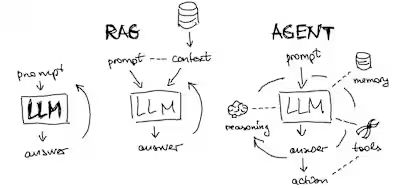

A robust and performant data ingestion and processing system was developed as a solo project. The workflow involved aggregating large quantities of data on the order of billions of data points in size from multiple sources and cleaning, standardizing, and extracting useful metrics from the data for eventual use in a web app. The system was deployed on AWS EC2 and ECS using Apache Airflow and, later, Dagster for observability and scheduling. Databricks was used for distributed analysis with PySpark.

Core Features ✅

Observability, scalability, and scheduling achieved via carefully configured orchestration tools like Airflow and Dagster.

Full ETL on data from over 100 million distinct URLs as well as other sources in under 24 hrs.

Handling TBs of data effectively.

Well-managed concurrency of job steps to minimize runtime and, therefore, costs.

Well-defined, relational DB architecture that respects normal forms.

Error handling, recovery, and failure notification.

Approach 🏗️

ETL practices were commonly followed where data processing was done in-memory on the deployed Airflow / Dagster instances. In some occasions an ELT approach was taken when data was better suited to being transformed on the DB directly. For example when needing to apply a simple transformation, like truncating a string, to a larger-than-memory dataset. Databricks was uses when the analysis required was more complex than what could be achieved in SQL alone.

Basic Steps:

Extract data from a number of public online sources via a combination of scraping, public APIs, and API reverse engineering.

Clean and standardize the data. Use statistical methods to isolate and handle anomalous data points. Standardize using well-defined, reproducible approaches.

Extract meaning and value from data chunks through analysis.

Write data to PostgreSQL DB.

(Optional step) Send large datasets on to Databricks for distributed processing.

Tools 🛠️

Apache Airflow and Dagster - Workflow orchestration, monitoring, and scheduling.

Pandas. Handled the majority of data processing and analysis in the cases where data was received in smaller, manageable chunks.

Databricks + PySpark - Big-data distributed processing and analysis.

AWS EC2 - Deployment of self-hosted Airflow instance.

AWS ECS - Deployment of self-hosted Dagster instance (we later switched to cloud hosted for easier console access management).

AWS Cloudformation - IAC solution for managing deployment of necessary AWS resources.

AWS S3 - Object storage and data archival.

PostgreSQL - Used as central DB to manage all the data. Sometimes also used for processing. Additionally, interfaced with the backend API to serve the app.