Credit Card Fraud Transaction Model

Like this project

Posted Sep 26, 2024

Machine Learning model to detect whether transactions are fraudulent or non-fraudulent

Likes

0

Views

10

Credit card fraud is a significant problem in the financial industry, costing 30 billion in losses from 2014-2022 (Best, 2024). In this project, I explored how XGB can be used to detect fraudulent credit card transactions. I used a real-world dataset from Kaggle, which contains a mix of fraudulent and non-fraudulent transactions.

I developed a sophisticated credit card fraud detection model using the XGBoost algorithm, demonstrating my proficiency in handling complex, real-world data challenges. The project involved extensive data preprocessing and feature engineering on a diverse customer information dataset.

I addressed several key challenges, including dealing with irrelevant columns and missing values through strategic imputation techniques. I employed Variance Inflation Factor analysis to combat multicollinearity, ensuring stable model estimates. The project also required tackling imbalanced data, a common issue in fraud detection, which I resolved using undersampling and oversampling methods. My feature engineering efforts included creating industry-standard financial ratios like Loan-to-Value (LTV) and Loan-to-Income (LTI), enhancing the model's predictive power. I applied Principal Component Analysis for dimensionality reduction to optimize performance and reduce noise.

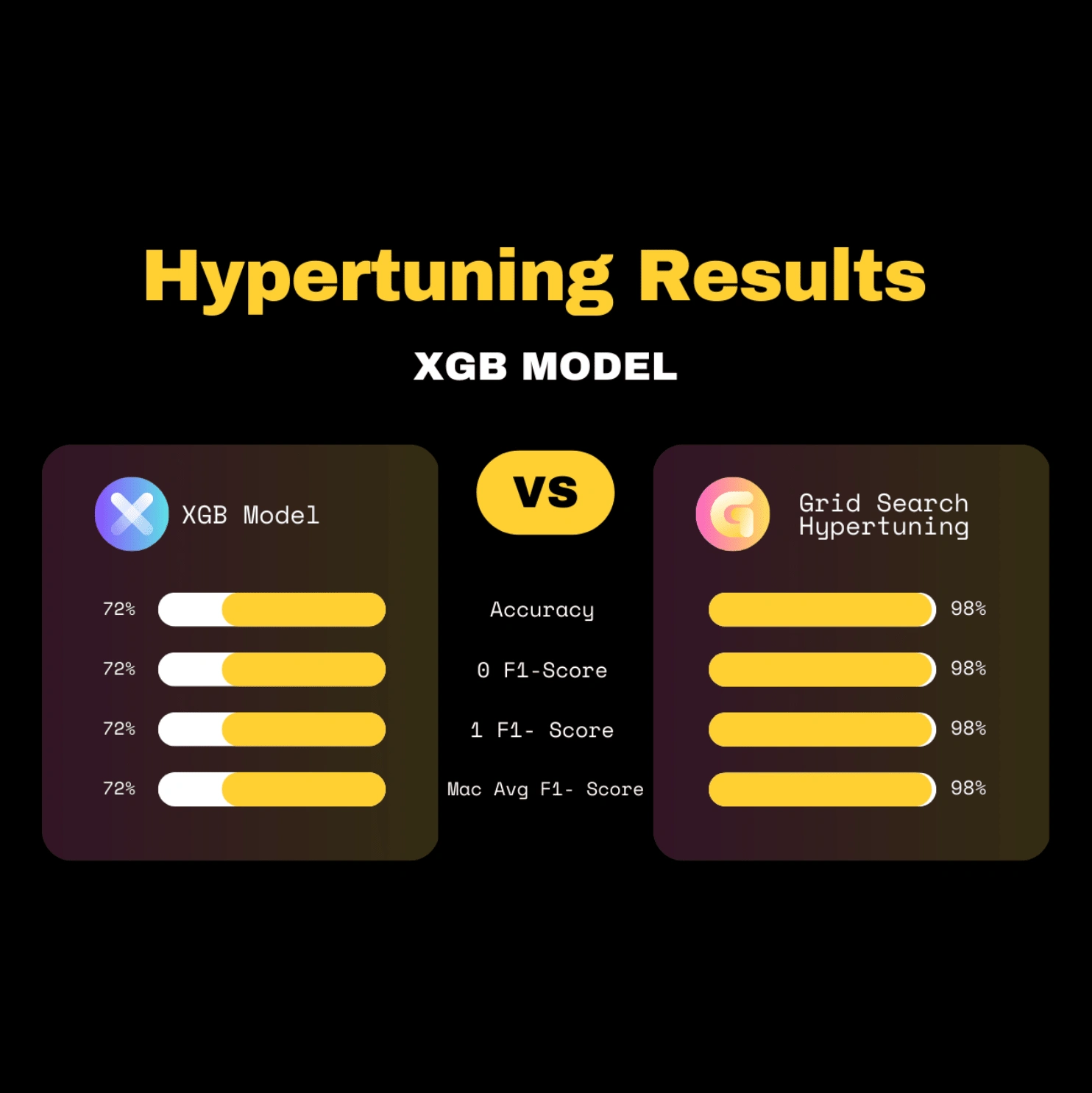

The final model was fine-tuned using grid search

The model with hyperparameter tuning using grid search has a significantly better performance compared to the original XGBoost model.

The accuracy and macro average F1-score have improved from 0.71 to 0.98, indicating a substantial increase in the model's ability to correctly classify both classes.

The F1 scores for individual classes have also improved, showing that the tuned model performs better for each class. This demonstrates the importance of hyperparameter tuning for improving model performance.

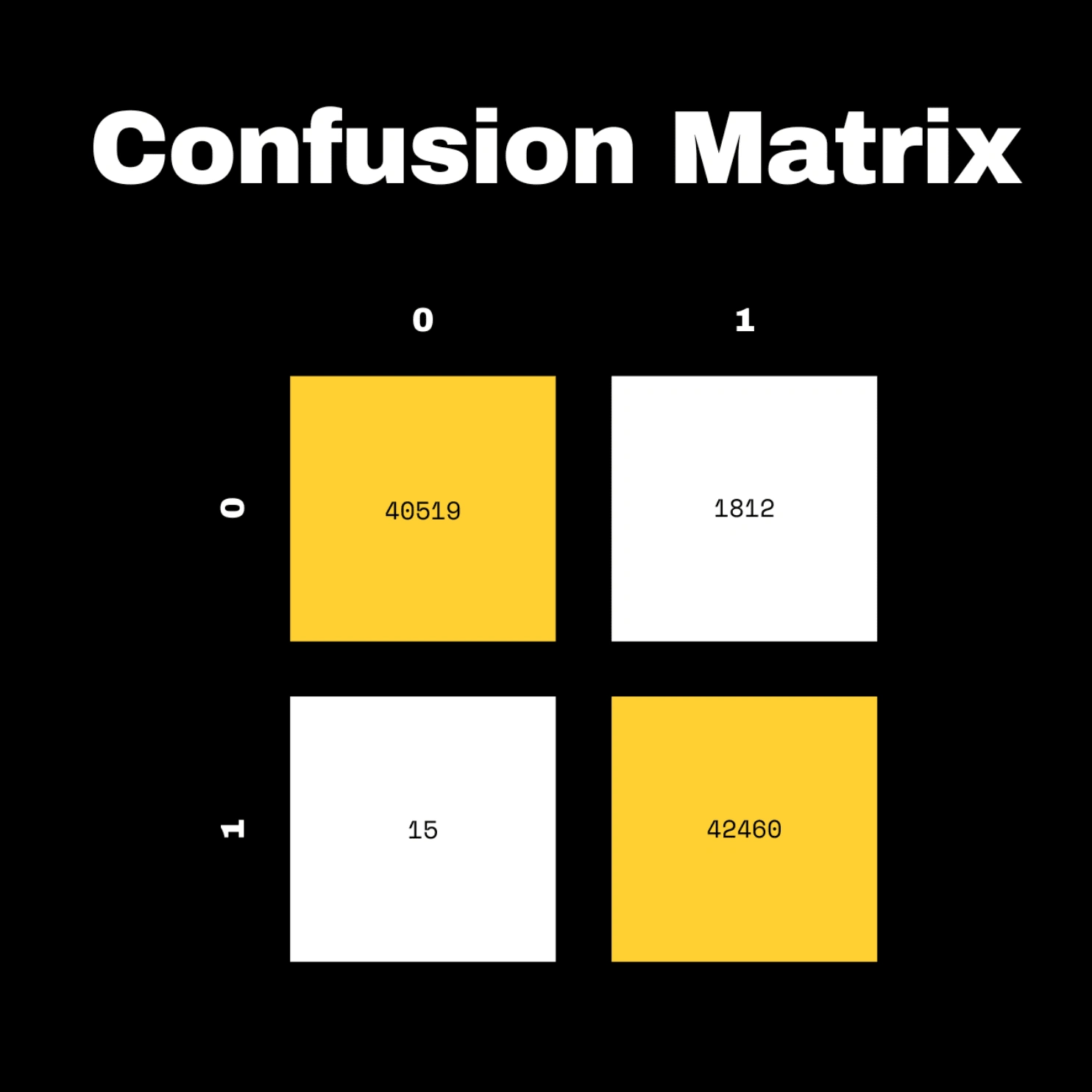

A confusion matrix is a table that is used to describe the performance of a classification model on a set of data for which the true values are known.

Here's the interpretation:

40,519 instances were correctly predicted as class 0 (True Negatives).

1,812 instances were incorrectly predicted as class 1 when they were actually class 0 (False Positives).

15 instances were incorrectly predicted as class 0 when they were actually class 1 (False Negatives).

42,460 instances were correctly predicted as class 1 (True Positives).