End-to-End Data Collection and Processing Automation

Client Overview

Client: A data broker managing a team of 3–4 employees

Industry: Data Brokerage

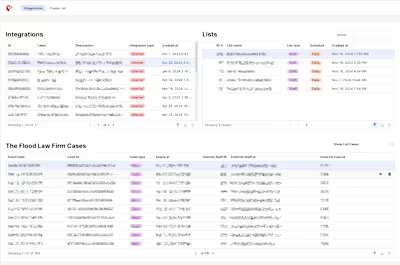

Challenge: The client manually gathered, processed, and uploaded data from 3 websites into their database daily. This process was time-consuming, error-prone, and limited the client’s ability to scale.

Problem Statement

The client’s existing workflow involved:

Manually gathering data from three different websites.

Compiling the data into an Excel sheet.

Importing the processed Excel sheet into their website’s database.

This process consumed significant time and resources, constrained scalability, and prevented the client from focusing on expanding their business.

Solution Delivered

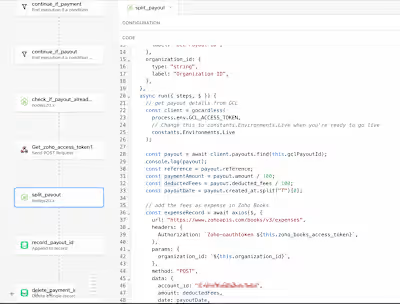

I designed and implemented a fully automated solution to streamline the entire workflow:

Data Collection Automation:

Deployed web scraping scripts to extract data from the target websites.

Ensured robust handling for changing website structures and large volumes of data.

Data Processing:

Integrated scripts to clean, validate, and format the data automatically.

Ensured data consistency to meet the client’s database requirements.

Data Upload to Database:

Automated the process of importing cleaned data directly into the client’s website database.

Scheduled tasks for daily updates without human intervention.

Scalable Infrastructure:

Implemented the solution on 3 servers using Google Cloud Platform (GCP) for reliability and scalability.

Configured each server for specific tasks (data collection, processing, and uploading).

Results & Impact

Time Savings:

Eliminated manual effort, saving the client and their team several hours daily.

Scalability:

Enabled the client to grow from collecting data from 3 websites to 8 websites within 18 months.

Business Growth:

Achieved a 100% growth in their operations within 1.5 years due to the efficient and scalable automation system.

Reliability:

The automated solution runs 24/7 with minimal supervision, reducing errors and ensuring consistent updates.

Technologies Used

Web Scraping: NodeJs (Puppeteer)

Data Processing: Custom NodeJs Script

Database Integration: PostgreSQL with automated data imports

Infrastructure: Google Cloud Platform (Compute Engine, Cloud Run)

Task Scheduling: Cron jobs for daily automation

Key Highlights

End-to-End Automation: Data collection, processing, and uploading—all automated.

Infrastructure Optimization: Leveraged Google Cloud servers for cost-effective scalability.

Seamless Expansion: Solution easily adapted to handle 8 websites, with the capacity for future growth.

Client Testimonial

"The automation solution revolutionized my business. We no longer worry about manual errors or delays, and the scalability allowed us to expand our operations significantly. It’s one of the best decisions we made!"

Conclusion

This project demonstrates how a well-designed automation solution can transform a manual, resource-heavy process into an efficient, scalable workflow. It highlights my expertise in understanding client challenges, designing tailored solutions, and delivering measurable results.

Like this project

Posted Nov 20, 2024

Automated data collection, processing, and upload for a data broker, enabling 100% growth and scaling from 3 to 8 websites via GCP servers.

Likes

0

Views

14