Deploying Dockerized App on AWS EKS Cluster using ArgoCD and Gi…

🙋♂️ Introduction

Hi everybody, my name is Ankit Jodhani. and I'm a DevOps enginner. I recently graduated from university and building my career in DevOps and cloud. I have written many blogs and projects on could and Devops you can see that on my Hashnode profile Ankit Jodhani. I want to thank Piyush Sachdeva for providing valuable guidance through the journey.

📚 Synopsis

in this blog, I am going to show you how can we deploy the dockerized app on the aws EKS cluster using continuous integration and continuous deployment with GitOps methodology. in this blog, I'm exactly going to break down how can we utilize CircleCI for continuous integration and ArgoCD for continuous deployment and GitOps workflow.

🔸 Story

Firstly, we will have three Git repositories:

🔹Application code repository (committed by the developer)

🔹Terraform code repository (to build the infrastructure ➡️ EKS Cluster)

🔹Kubernetes manifest repository (YAML files)

The developer creates the feature and commits it to the Application code repository. When CircleCI notices any change or new commit in the application code repository, it starts running the pipeline that we have set up. The pipeline setup can vary depending on the implementation, but here I've set up 4 jobs or steps:

🔸Test (application code)

🔸 Build (Docker image)

🔸 Push (Push to Docker registry)

🔸 Update manifest (update the manifest repo with a new TAG)

After the successful completion of the pipeline, we will have a new Docker image packed with new features or changes and an updated manifest file. To implement the GitOps workflow, we've set up syncing ArgoCD with our GitHub manifest repository. When ArgoCD notices any changes in the manifest file, it will pull the new manifest file and apply it to the cluster. If someone manually tries to change any object on the Kubernetes cluster, ArgoCD will revert back to the previous state. This means ArgoCD will consider only the manifest repository as the single source of truth.

✅ Prerequisites

📌 AWS Account

📌 CircleCI Account

📌 GitHub Account

📌 Docker Account

📌 Basic knowledge of Linux

📦 List of AWS services

👑 Amazon EKS

🌐 Amazon VPC

🔒 Amazon IAM

💻 Amazon EC2

⚖️ Amazon Autoscaling

🪣 Amazon S3

🚀 DynamoDB

💡 Plan of Execution

🎯 Architecture

👑 EKS and How it works

😺 GitOps methodology

🚀A Step-by-step guide

🧪 Test

🙌 Conclusion

🎒 Resources

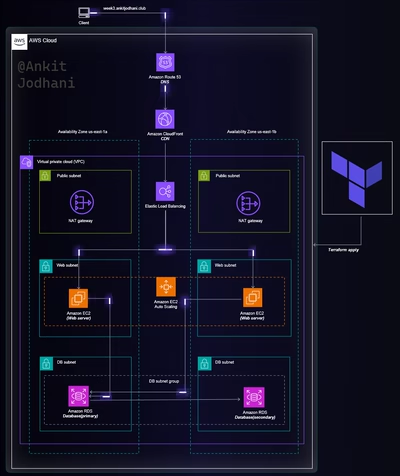

🎯 Architecture

let's understand the architecture that we are going to build in this blog or project. we are going to follow a goal-driven approach that helps us to put in minimum effort and gain lots of results. it's very important to understand what we are going to build and to understand you can follow the below architecture. I request you please go through it once. it helps you a lot while building this project.

Architecture

architecture without animation Link

👑 EKS and How it works

AWS EKS (Elastic Kubernetes Service) is a managed service provided by AWS that simplifies the deployment and management of Kubernetes clusters. With EKS, you don't have to worry about the underlying control plane and its related objects; AWS takes care of that for you. The service ensures high availability and fault tolerance, allowing you to focus on deploying and managing your applications. You can see this in the above architecture.

In EKS, you can solely concentrate on managing the worker nodes, which can be EC2 instances or Fargate profiles. This flexibility allows you to choose the most suitable computing resources for your applications. Whether you opt for EC2 instances or Fargate, AWS handles the operational aspects, including scaling, monitoring, and patching of the control plane, so you can focus on your application deployment and development.

EKS provides integration with other AWS services, such as Amazon VPC for networking, Amazon IAM for secure access control, and Amazon EC2 for flexible computing resources. Additionally, EKS supports advanced features like automatic scaling, load balancing, and native integrations with AWS services like CloudWatch and CloudFormation.

When you create an EKS cluster, the master node or control plane remains hidden from direct visibility. As I mentioned before, Amazon takes care of managing the control plane. AWS automatically creates a dedicated VPC for the control plane, although you won't be able to see it in your account. However, you will receive an endpoint that allows you to communicate with the API server

There are three networking modes available for establishing communication with the API server. These modes provide different ways to facilitate communication with the EKS control plane, the user's machine (

Kubectl), and its worker node.📢 Public

🔒 Private

📢 Public and🔒private

📢 Public

In the case of the public mode, anyone can communicate with the control plane that has the endpoint. However, this is not recommended for security reasons, although it can be useful for learning purposes. When the worker nodes and the control plane's API server communicate with each other, they may leave the VPC but not the AWS network.

🔒 Private

The private mode offers a more secure way to communicate with the EKS cluster. In this case, a Route 53 hosted zone is created and attached to the VPC you've created in your account. The control plane and worker nodes communicate through Route 53. The Route 53 hosted zone will be owned by Amazon, and you won't be visible in your acc. To apply commands, you would need to use a bastion host within the VPC that you've created.

📢 Public 🔒 Private

This is a common and secure way to communicate with an EKS cluster. In this mode, you don't need to use a bastion host, and you can execute commands (e.g.,

kubectl) directly from your local machine. Communication between worker nodes still occurs via Route 53 owned by AWS, as explained in the previous paragraph.Note: You might wonder if the EKS control plane uses an Internet Gateway (IGW) or NAT Gateway to communicate with the worker nodes. However, when you create an EC2 instance or node group, each instance is assigned an Elastic Network Interface (ENI) owned by AWS. Communication between the control plane and worker nodes happens through these ENIs.

😺 GitOps methodology

GitOps is a methodology for deploying applications and infrastructure on a Kubernetes cluster. In GitOps, we consider the Git repository containing Kubernetes manifests as the single source of truth. This Git repository can be hosted on any code hosting service, not limited to just GitHub.

Before adopting GitOps, it was challenging to track changes made in the Kubernetes cluster and determine who made those changes. Additionally, reverting back to a previous state became difficult if configurations were missed or if there were issues with application objects running within the cluster.

However, with GitOps, we can employ a GitOps controller like ArgoCD or FluxCD. These controllers continuously monitor our Kubernetes manifest repository. When changes are detected, they pull the updated manifests and apply them to the cluster. This approach enables easy review of changes, facilitates collaboration among team members, and simplifies change tracking.

🚀A Step-by-step guide

Now let's see the step-by-step guide on How to implement that architecture that I've shown you above.

Note: I've divided this part into four blogs. and you will find all the links below. this blog is the first blog.

💎 ⛏️ Provisioning Amazon EKS Cluster using Terraform

💎 📐 Configure pipeline (CircleCI) and GitHub for Kube manifest

💎 👀 Installation and Syncing of ArgoCD on the EKS cluster

🧪 Test

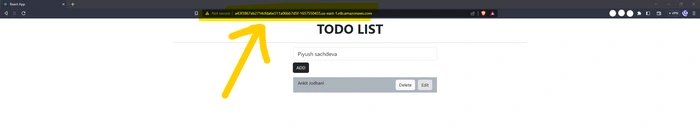

I hope you've completed all three sections OR the blog. now let's test the application.

paste the load balancer DNS that points to the application which is running inside the cluster(pod).

testing application

As we know we've implemented GitOps workflow. so let's change the application code and commit to the application code repository.

let's see Is pipeline working properly? Is ArgoCD able to pull the new image and apply it on the cluster?

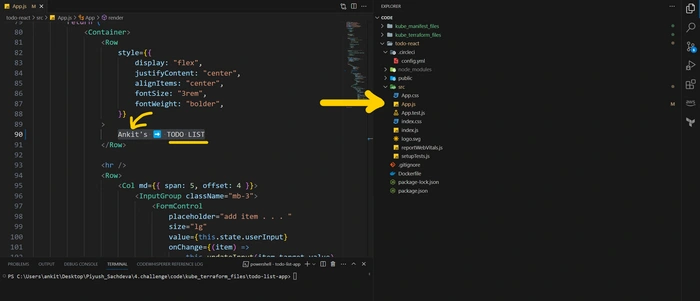

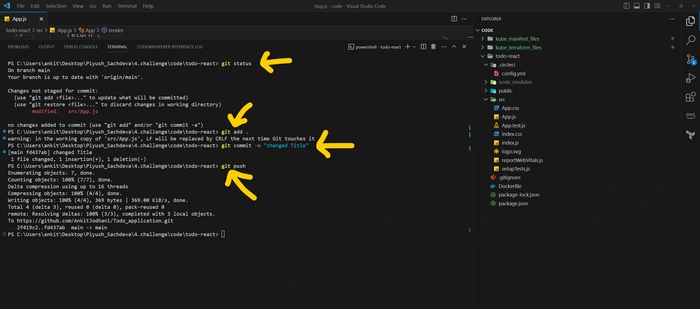

I'm going to make a small change in the application code.

change

Commit on GitHub.

commit on Github

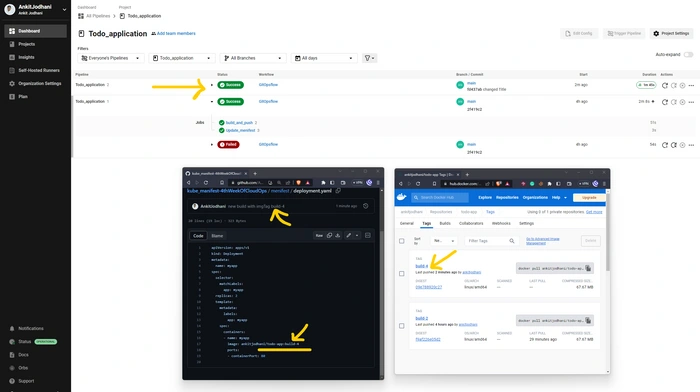

Yes, we can see in the below image that Pipeline works successfully. Build a new image, push it on the Docker hub, and updated the manifest file on the Kubernetes manifest repo.

Pipeline execution

Now argocd takes a little bit of time to apply the new Kubernetes manifest. please wait for at least 3-5 minutes.

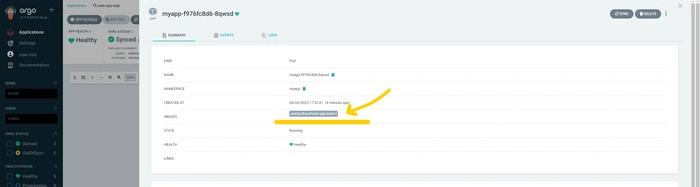

Yup, we can see in the below image that the sync happen successfully, and applied the new build.

sync

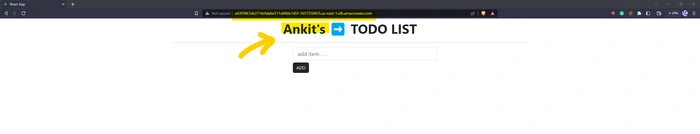

let's visit the application, and here we go

updated build

🙌 Conclusion

In conclusion, this blog explored the process of deploying a Dockerized application on an AWS EKS cluster using ArgoCD and the GitOps methodology with CircleCI. We started by provisioning the Amazon EKS cluster using Terraform, ensuring a solid foundation for our deployment. Next, we configured the pipeline with CircleCI and GitHub, establishing a seamless integration between our Kubernetes manifests and the CI/CD workflow.

And here it ends... 🙌🥂

if you like my work please message me on LinkedIn with "Hi and your country name"

🙋♂️ Ankit Jodhani.

📨 reach me at ankitjodhani1903@gmail.com

🔗 LinkedIn https://www.linkedin.com/in/ankit-jodhani/

📂 Github application code repo: https://github.com/AnkitJodhani/Todo_application.git

📂 Github Terraform code repo: https://github.com/AnkitJodhani/kube_terraform-4thWeekOfCloudOps.git

📂 Github Kubernetes manifest repo: https://github.com/AnkitJodhani/kube_manifest-4thWeekOfCloudOps.git

😺 Github https://github.com/AnkitJodhani

🐦 Twitter https://twitter.com/Ankit__Jodhani

🎒 Resources

Like this project

Posted Aug 12, 2023

Deploying Dockerized App on AWS EKS Cluster using ArgoCD and GitOps methodology with CircleCI, provisioning EKS cluster using terraform