Ferret-UI: Grounded Mobile UI Understanding with Multimodal LLMs

I. Introduction

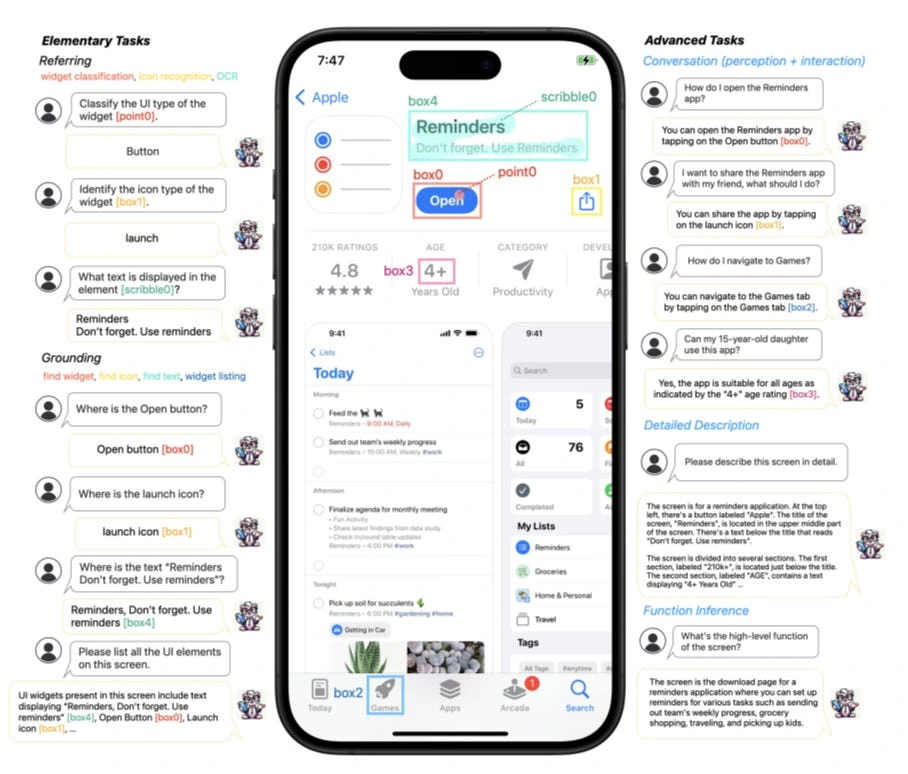

The digital age is marked by the proliferation of mobile applications, transforming them into indispensable tools for day-to-day activities. From facilitating mundane tasks such as information retrieval and making reservations to serving as platforms for entertainment, mobile applications have cemented their place in the fabric of modern life. This pervasive reliance underscores the imperative for sophisticated systems capable of automating the intricate dance of perception and interaction within user interface (UI) confines. Amidst this backdrop emerges Ferret-UI, a pioneering endeavor that introduces a new multimodal large language model (MLLM) tailored for the nuanced comprehension and interaction with mobile UI screens. This innovative model is engineered to bridge the existing gap, offering a more intuitive and accessible means of navigating the digital world.

II. Ferret-UI: Overview and Architectural Innovations

Navigating the challenges of mobile UI screens necessitates a departure from conventional approaches. Unlike natural images, UI screens are characterized by their elongated aspect ratios and the presence of smaller, more densely packed elements. These attributes present a unique set of challenges for MLLMs, primarily due to the loss of crucial visual details when adapting these models directly to UI screens. Ferret-UI addresses these challenges head-on through architectural innovations, most notably the integration of an "any resolution" feature. This feature allows the model to magnify details and leverage enhanced visual features, ensuring a comprehensive understanding of and interaction with UI elements. This section delves into the architectural nuances of Ferret-UI, highlighting its innovative approach to enhancing MLLM interaction with mobile UI screens.

Thanks for reading YallaAI! Subscribe for free to receive new posts and support my work.

III. Data Curation and Task Formulation

The foundation of Ferret-UI's proficiency lies in meticulously curating its training dataset. This process involved gathering training samples across a broad spectrum of elementary UI tasks, such as icon recognition, text finding, and widget listing. Additionally, to further refine the model's reasoning capabilities, an advanced task dataset was compiled. This dataset includes tasks requiring detailed descriptions, interaction dialogues, and function inference. The culmination of these efforts is a comprehensive benchmark designed to thoroughly evaluate Ferret-UI's capabilities in understanding and interacting with UI screens. This section outlines the strategic approach to data curation and task formulation, underscoring their pivotal role in the development of Ferret-UI.

IV. The Technical Fortitude of Ferret-UI

Ferret-UI's empirical evaluation reveals its exceptional comprehension of UI screens and its adeptness at executing open-ended instructions. This performance not only surpasses most open-source UI MLLMs but also illustrates the critical importance of the model's architectural innovations, data curation strategies, and insights gained from benchmark evaluation. This section explores the technical fortitude of Ferret-UI, highlighting the factors contributing to its exemplary performance and offering insights into the model's operational efficacy.

V. Empirical Evaluation

The empirical evaluation of Ferret-UI serves as a testament to the efficacy of its architectural innovations and the strategic formulation of its training dataset. Through comparative analysis with existing MLLMs, Ferret-UI demonstrates superior performance, underscored by its integration of "any resolution" and the comprehensive scope of elementary and advanced tasks. This section provides a detailed account of Ferret-UI's performance evaluation, emphasizing the impact of architectural innovations and task formulation on its success.

VI. Discussion on Findings and Insights

Reflecting on the development of Ferret-UI, this segment explores the pivotal architectural decisions, the strategic data curation approaches, and the profound insights derived from benchmark evaluation. It highlights the critical role these elements play in Ferret-UI's development, shedding light on the broader implications for MLLM interaction with UI screens. This discussion underscores the nuanced considerations that underpin Ferret-UI's success and its potential to redefine MLLM interaction within the digital realm.

VII. Conclusion and Future Directions

Ferret-UI represents a significant advancement in the domain of MLLM interaction with mobile UI screens, heralding a new era of enhanced comprehension and interaction capabilities. This paper encapsulates Ferret-UI's contributions to the field, outlining its innovative approach and the potential pathways for future research. As we look ahead, the prospects for further enhancements and the broader application of this groundbreaking model promise to revolutionize our interaction with the digital world, making it more intuitive and accessible than ever before.

Thanks for reading YallaAI! Subscribe for free to receive new posts and support my work.

Like this project

Posted Apr 21, 2024

Revolutionizing Mobile Interface Interaction: The Advent of Ferret-UI

Likes

0

Views

43