Machine Learning Deployment Project

Problem

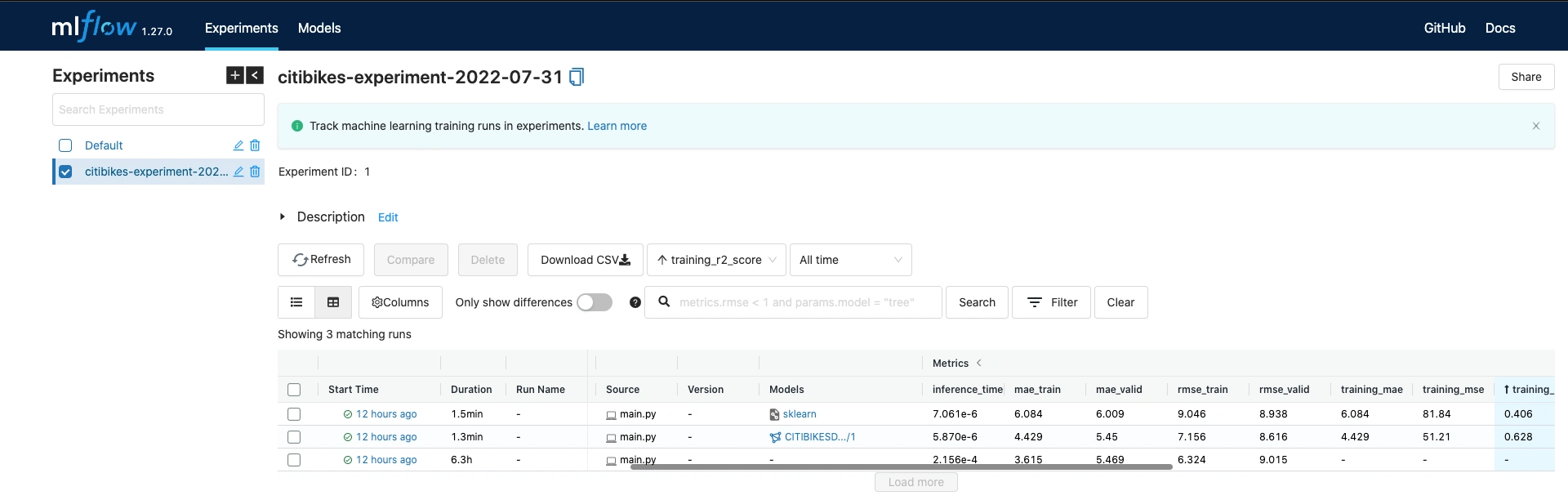

This is a simple end-to-end mlops project which takes data from capital bikeshare and transforms it with machine learning pipelines from training, model tracking and experimenting with mlflow, ochestration with prefect as workflow tool to deploying the model as a web service.

The project runs locally and uses AWS S3 buckets to store model artifacts during model tracking and experimenting with mlflow.

Dataset

The chosen dataset for this project is the Capital Bikeshare Data

Improvements

In the future I hope to improve the project by having the entire infrastructure moved to cloud using AWS cloud(managing the infrastructure with iac tools such as terraform), have model deployment as either batch or streaming with AWS lambda and kinesis streams, a comprehesive model monitoring.

Project Setup

Clone the project from the repository

Change to mlops-project directory

Setup and install project dependencies

Add your current directory to python path

Start Local Prefect Server

In a new terminal window or tab run the command below to start prefect orion server

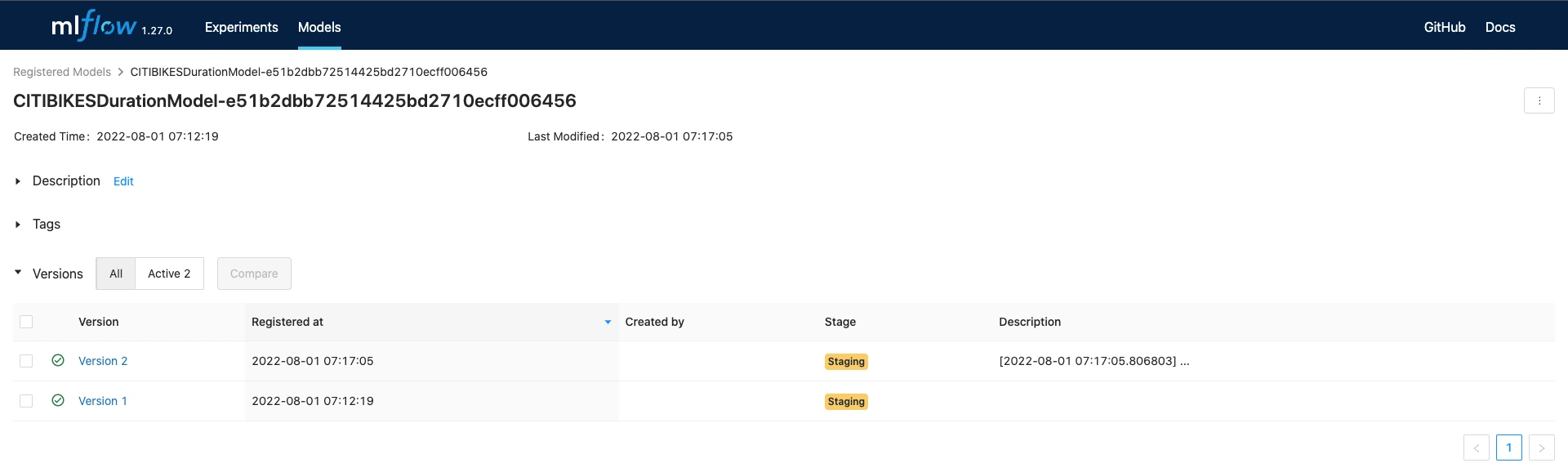

Start Local Mlflow Server

The mlflow points to S3 bucket for storing model artifacts and uses sqlite database as the backend end store

Create an S3 bucket and export the bucket name as an environment variable as shown below

In a new terminal window or tab run the following commands below

Start the mlflow server

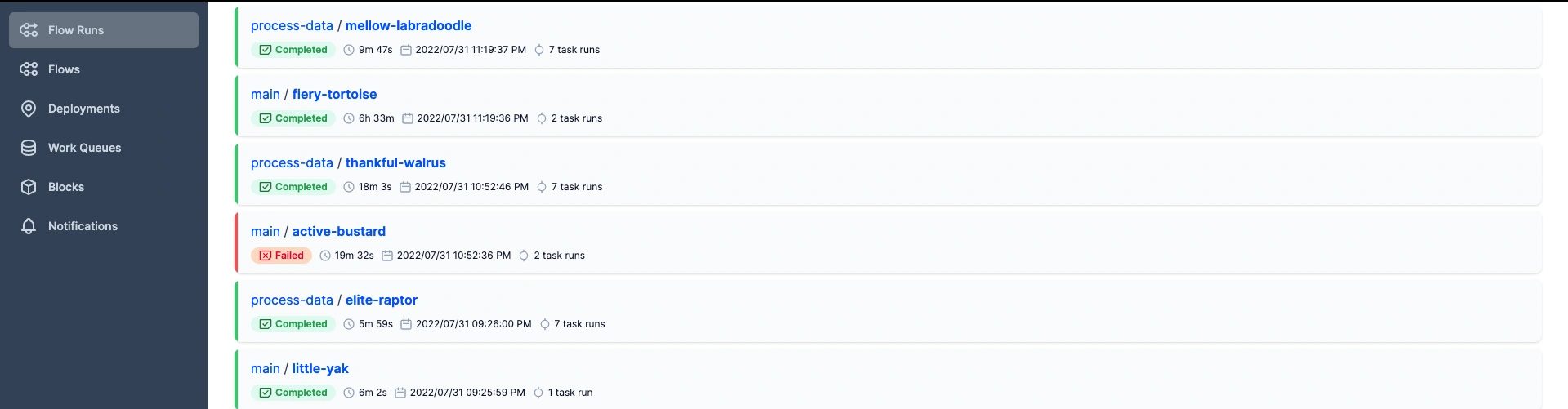

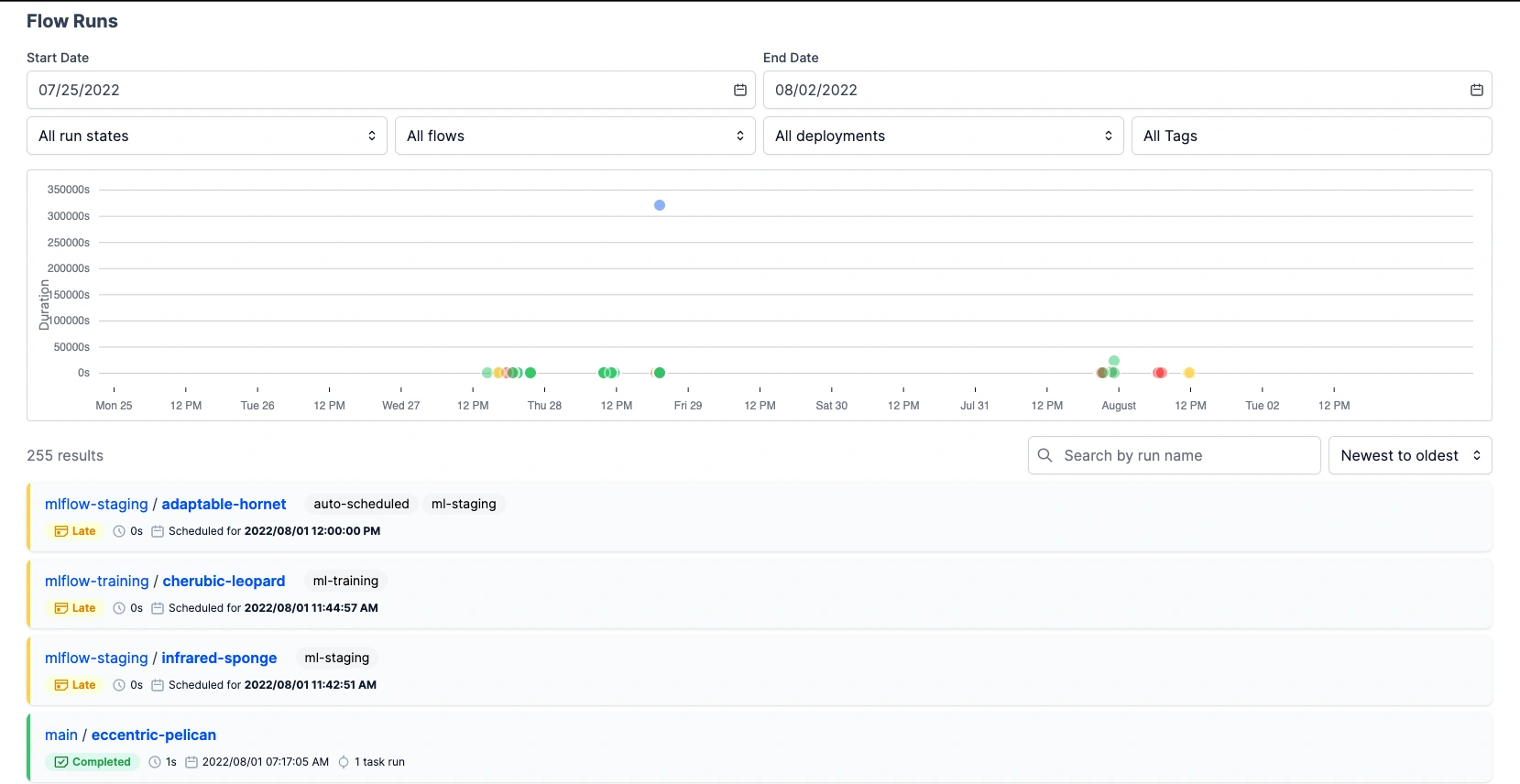

Running model training and model registery staging pipelines locally

Model training

Register and Stage model

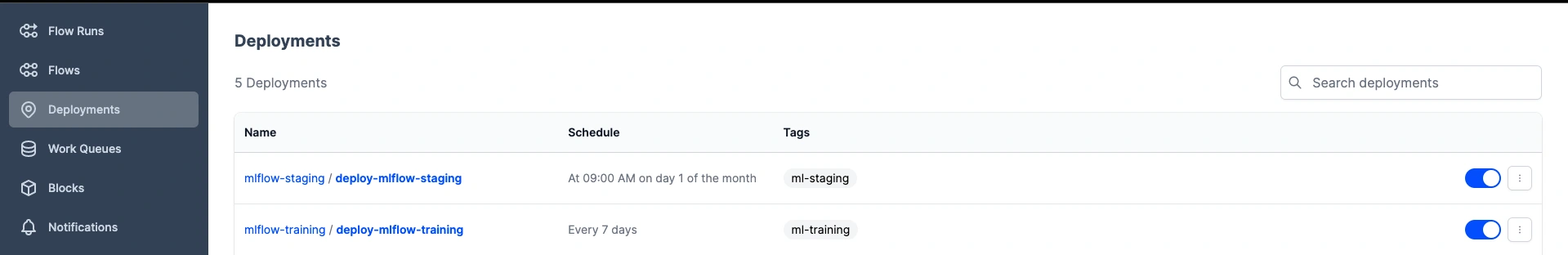

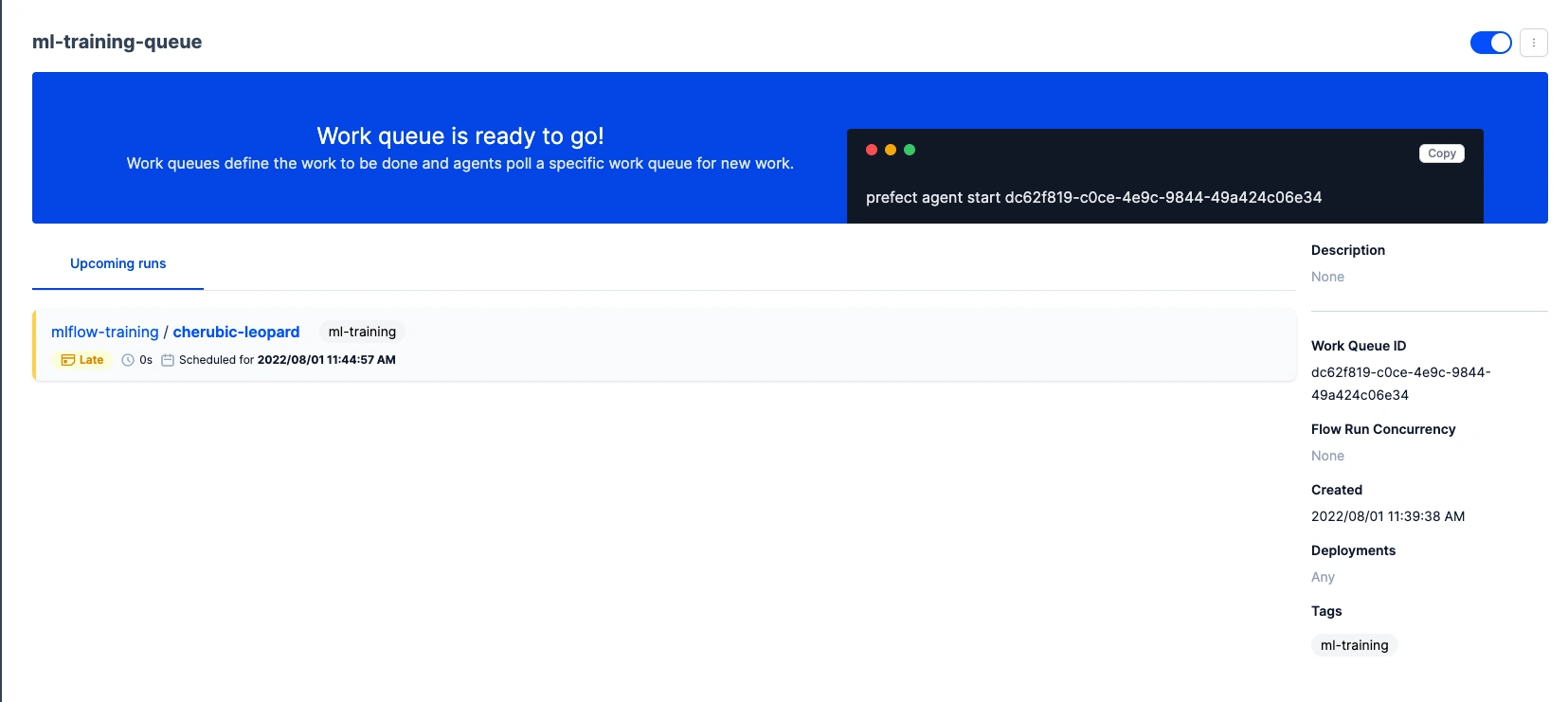

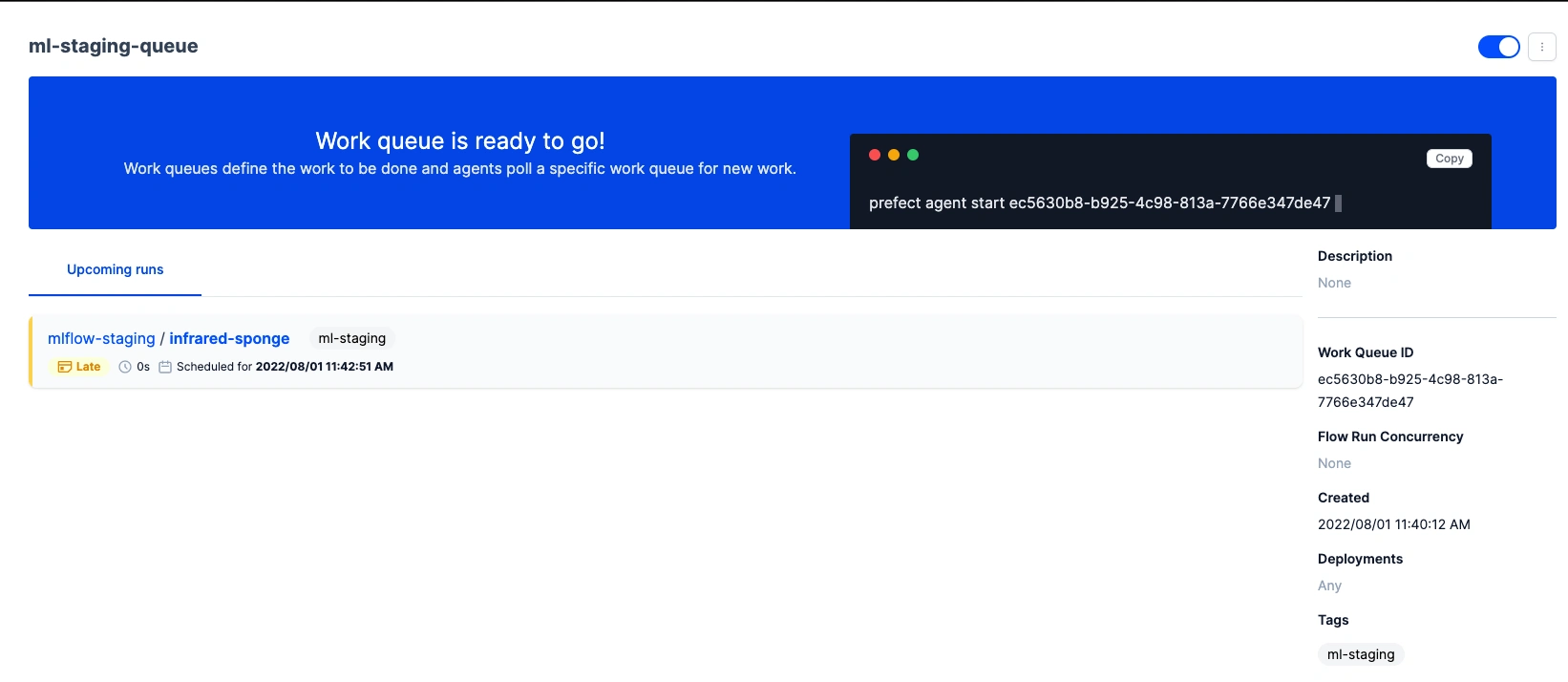

Create scheduled deployments and agent workers to start the deployments

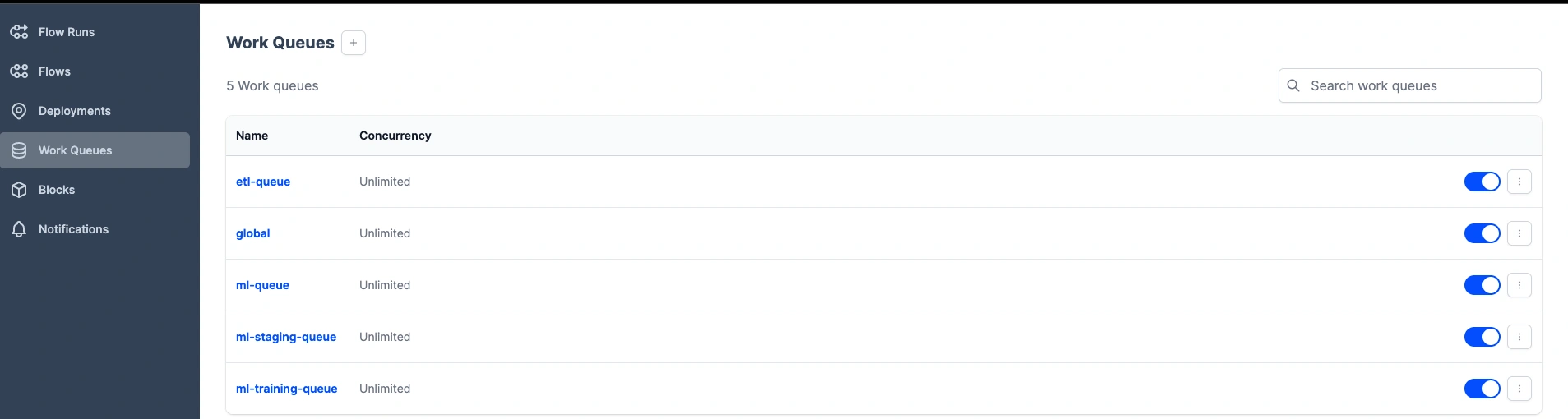

Create work queues

Run deployments locally to schedule pipeline flows

Deploy model as a web service locally

Change to

webservice directory and follow the instructions hereLike this project

Posted Jan 12, 2024

An end-to-end machine learning (mlops) project. Contribute to DanielOX/mlops-project development by creating an account on GitHub.

Likes

0

Views

0