Additional resources

What Is Content Moderation

User-Generated Content Moderation

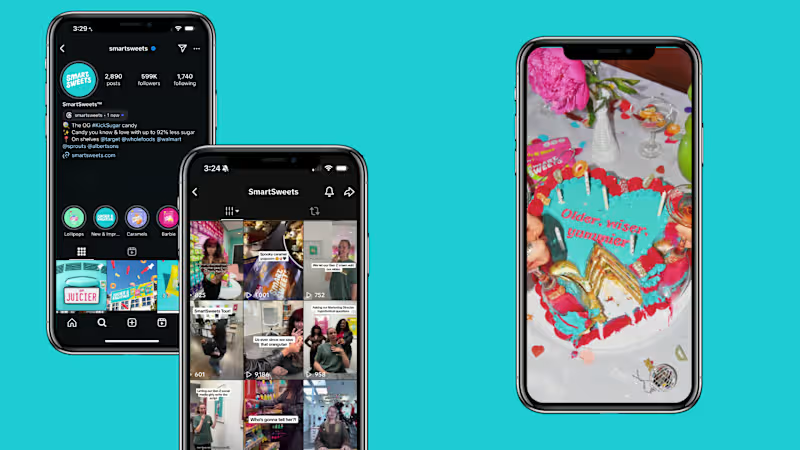

Social Media Content Moderation

Online Content Moderation

Essential Skills for Content Moderators

Technical Skills and Digital Literacy

Language and Cultural Competencies

Emotional Intelligence and Psychological Resilience

Critical Thinking and Decision Making

Where to Find Content Moderators

Remote Talent Pools

Regional Recruitment Partners

Professional Networks and Communities

Educational Institutions and Training Programs

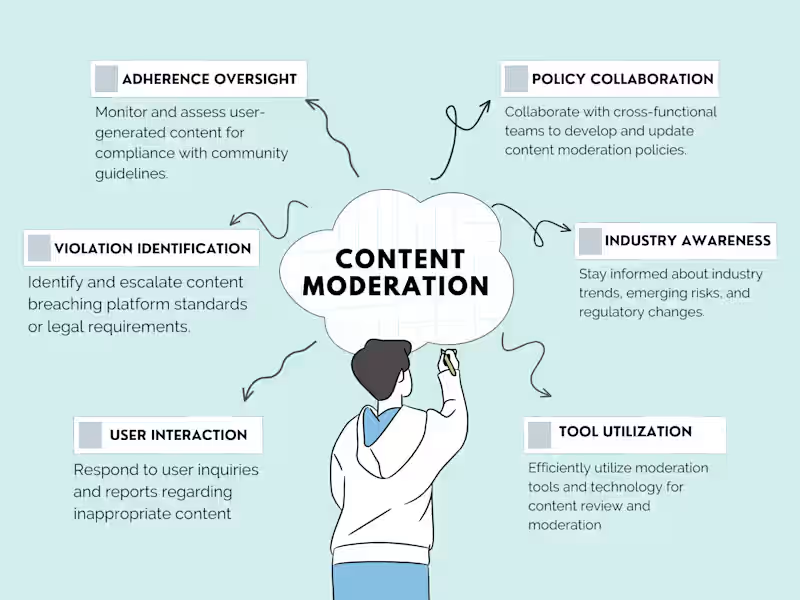

Content Moderator Roles and Responsibilities

Primary Content Review

Quality Assurance Positions

Team Lead and Supervisor Roles

Specialized Moderation Areas

Creating Effective Job Descriptions for Content Moderation Jobs

Transparency About Role Challenges

Highlighting Support Systems

Required Qualifications and Experience

Career Development Opportunities

Screening and Interview Process

Psychological Assessments

Technical Skill Evaluations

Cultural Awareness Testing

Bias Detection Methods

Training Content Moderators

Policy and Guidelines Education

Platform-Specific Tool Training

Practice Scenarios and Mock Reviews

Ongoing Professional Development

Building Content Moderation Teams

Team Structure Models

Shift Planning and Coverage

Cross-Functional Collaboration

Performance Monitoring Systems

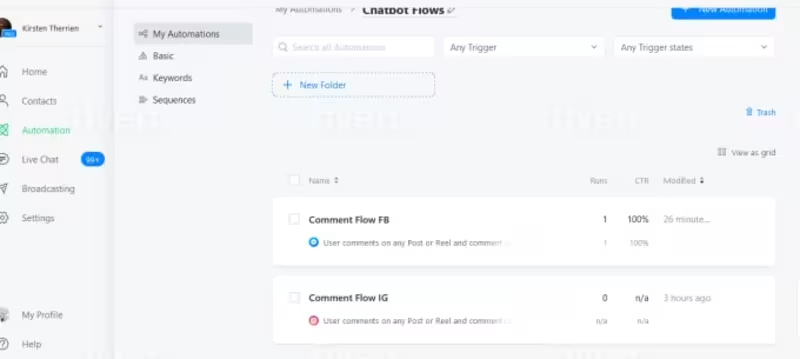

Technology and Tools for Content Moderation Services

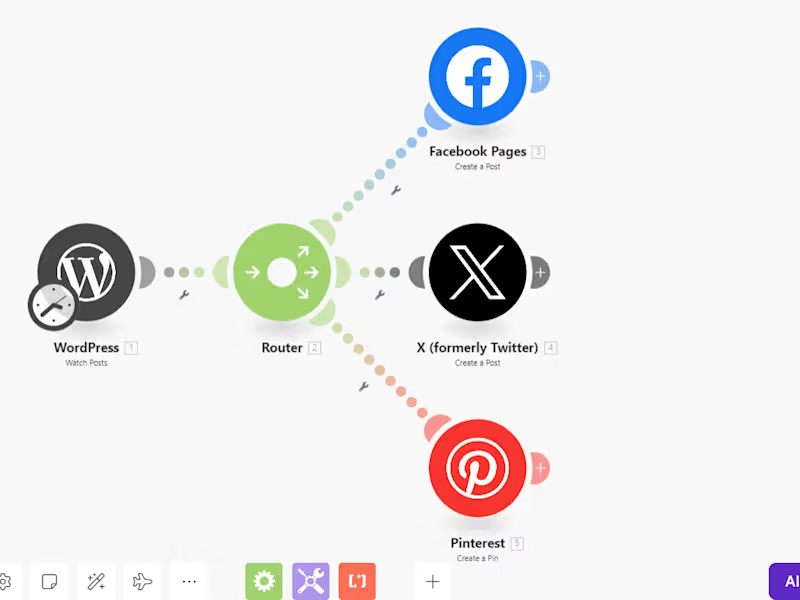

AI and Automated Detection Systems

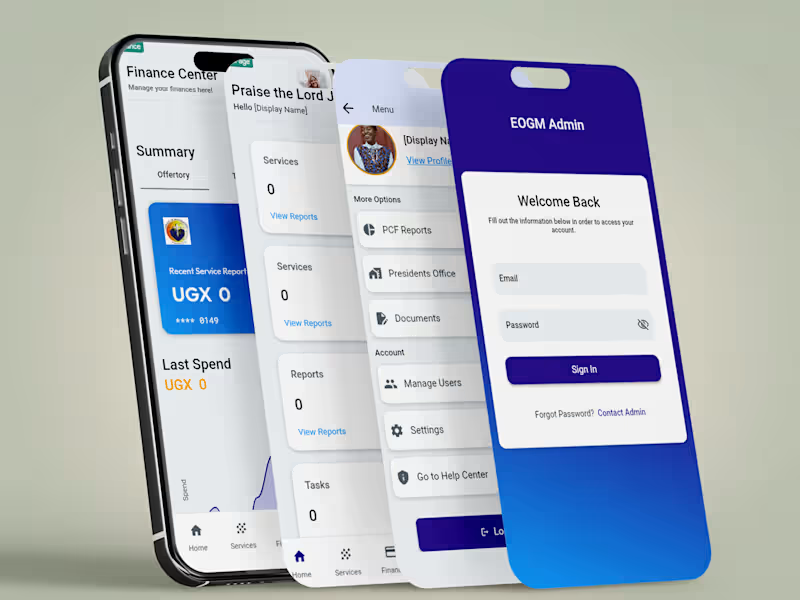

Content Management Platforms

Review Queue Systems

Analytics and Reporting Tools

Compensation Strategies

Regional Salary Benchmarks

Performance-Based Incentives

Benefits and Wellness Programs

Career Advancement Pathways

Mental Health and Wellbeing Support

On-Site Counseling Services

Stress Management Programs

Exposure Rotation Policies

Peer Support Networks

Legal and Compliance Considerations

Regional Regulatory Requirements

Platform Liability Guidelines

Data Privacy and Security

Documentation and Audit Trails