Sincere enthusiast Data Engineer that really loves what it does.Leonardo Rickli

My enthusiasm into this wonderful data world is what sets me apart is my passion for data organization and cleanliness, reflected in meticulous attention to detail throughout the entire project lifecycle. From architecting streamlined cloud data architectures to implementing robust ETL pipelines and establishing rigorous data quality assurance frameworks, I am committed to delivering solutions that not only meet your business needs but also resonate with my enthusiasm for tidy and organized data structures.

What's included

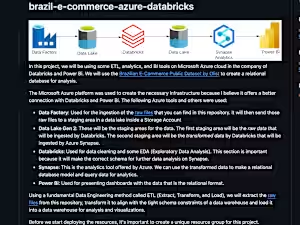

Cloud Data Architecture Blueprint

- Description: A comprehensive blueprint outlining the architecture design for cloud-based data processing and storage, tailored to the client's specific needs and requirements.

- Format: Digital document (PDF or Word) detailing the architectural components, data flow diagrams, and technology stack recommendations.

- Quantity: 1 blueprint document.

- Revisions: Up to 2 rounds of revisions based on client feedback.

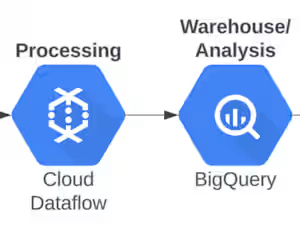

ETL Pipeline Implementation

- Description: Fully functional Extract, Transform, Load (ETL) pipeline deployed on the client's chosen cloud platform, integrating with their data sources and target systems for seamless data processing.

- Format: Cloud-based solution deployed on the client's cloud environment (e.g., GCP Dataflow, Azure Data Factory).

- Quantity: 1 implemented ETL pipeline.

- Revisions: Testing and debugging support provided for up to 1 week post-deployment.

Data Quality Assurance Framework

- Description: A robust framework for ensuring data quality throughout the data lifecycle, including data validation, cleansing, and error handling mechanisms, tailored to the client's data governance policies.

- Format: Documentation outlining the data quality framework, including code snippets, validation rules, and best practices.

- Quantity: 1 comprehensive data quality assurance framework document.

- Revisions: Up to 2 rounds of revisions to fine-tune the framework based on client feedback and evolving requirements.

FAQs

Cloud-based data engineering offers scalability, flexibility, and cost-efficiency, allowing organizations to scale their data processing capabilities on-demand, access a wide range of powerful data tools and services, and reduce infrastructure costs by paying only for what they use.

The duration of a data engineering project varies depending on factors such as project scope, complexity, and available resources. However, a typical project may take anywhere from a few weeks to several months to complete, with timelines determined during the initial scoping and planning phase.

Absolutely. I specialize in integrating with existing data infrastructure and tools to ensure seamless compatibility and minimal disruption to your operations. Whether you're using on-premises databases, legacy systems, or cloud-based platforms, I can adapt my solutions to integrate with your existing ecosystem effectively.

Leonardo's other services

Starting at$15

Duration1 week

Tags

AWS

Azure

Google Cloud Platform

Python

SQL

Cloud Infrastructure Architect

DevOps Engineer

Security Engineer

Service provided by

Leonardo Rickli Lisbon, Portugal

- 1

- Followers

Sincere enthusiast Data Engineer that really loves what it does.Leonardo Rickli

Starting at$15

Duration1 week

Tags

AWS

Azure

Google Cloud Platform

Python

SQL

Cloud Infrastructure Architect

DevOps Engineer

Security Engineer

My enthusiasm into this wonderful data world is what sets me apart is my passion for data organization and cleanliness, reflected in meticulous attention to detail throughout the entire project lifecycle. From architecting streamlined cloud data architectures to implementing robust ETL pipelines and establishing rigorous data quality assurance frameworks, I am committed to delivering solutions that not only meet your business needs but also resonate with my enthusiasm for tidy and organized data structures.

What's included

Cloud Data Architecture Blueprint

- Description: A comprehensive blueprint outlining the architecture design for cloud-based data processing and storage, tailored to the client's specific needs and requirements.

- Format: Digital document (PDF or Word) detailing the architectural components, data flow diagrams, and technology stack recommendations.

- Quantity: 1 blueprint document.

- Revisions: Up to 2 rounds of revisions based on client feedback.

ETL Pipeline Implementation

- Description: Fully functional Extract, Transform, Load (ETL) pipeline deployed on the client's chosen cloud platform, integrating with their data sources and target systems for seamless data processing.

- Format: Cloud-based solution deployed on the client's cloud environment (e.g., GCP Dataflow, Azure Data Factory).

- Quantity: 1 implemented ETL pipeline.

- Revisions: Testing and debugging support provided for up to 1 week post-deployment.

Data Quality Assurance Framework

- Description: A robust framework for ensuring data quality throughout the data lifecycle, including data validation, cleansing, and error handling mechanisms, tailored to the client's data governance policies.

- Format: Documentation outlining the data quality framework, including code snippets, validation rules, and best practices.

- Quantity: 1 comprehensive data quality assurance framework document.

- Revisions: Up to 2 rounds of revisions to fine-tune the framework based on client feedback and evolving requirements.

FAQs

Cloud-based data engineering offers scalability, flexibility, and cost-efficiency, allowing organizations to scale their data processing capabilities on-demand, access a wide range of powerful data tools and services, and reduce infrastructure costs by paying only for what they use.

The duration of a data engineering project varies depending on factors such as project scope, complexity, and available resources. However, a typical project may take anywhere from a few weeks to several months to complete, with timelines determined during the initial scoping and planning phase.

Absolutely. I specialize in integrating with existing data infrastructure and tools to ensure seamless compatibility and minimal disruption to your operations. Whether you're using on-premises databases, legacy systems, or cloud-based platforms, I can adapt my solutions to integrate with your existing ecosystem effectively.

Leonardo's other services

$15