Rahul Shevgan

Turning messy datasets into clear, reliable insights

New to Contra

Rahul is ready for their next project!

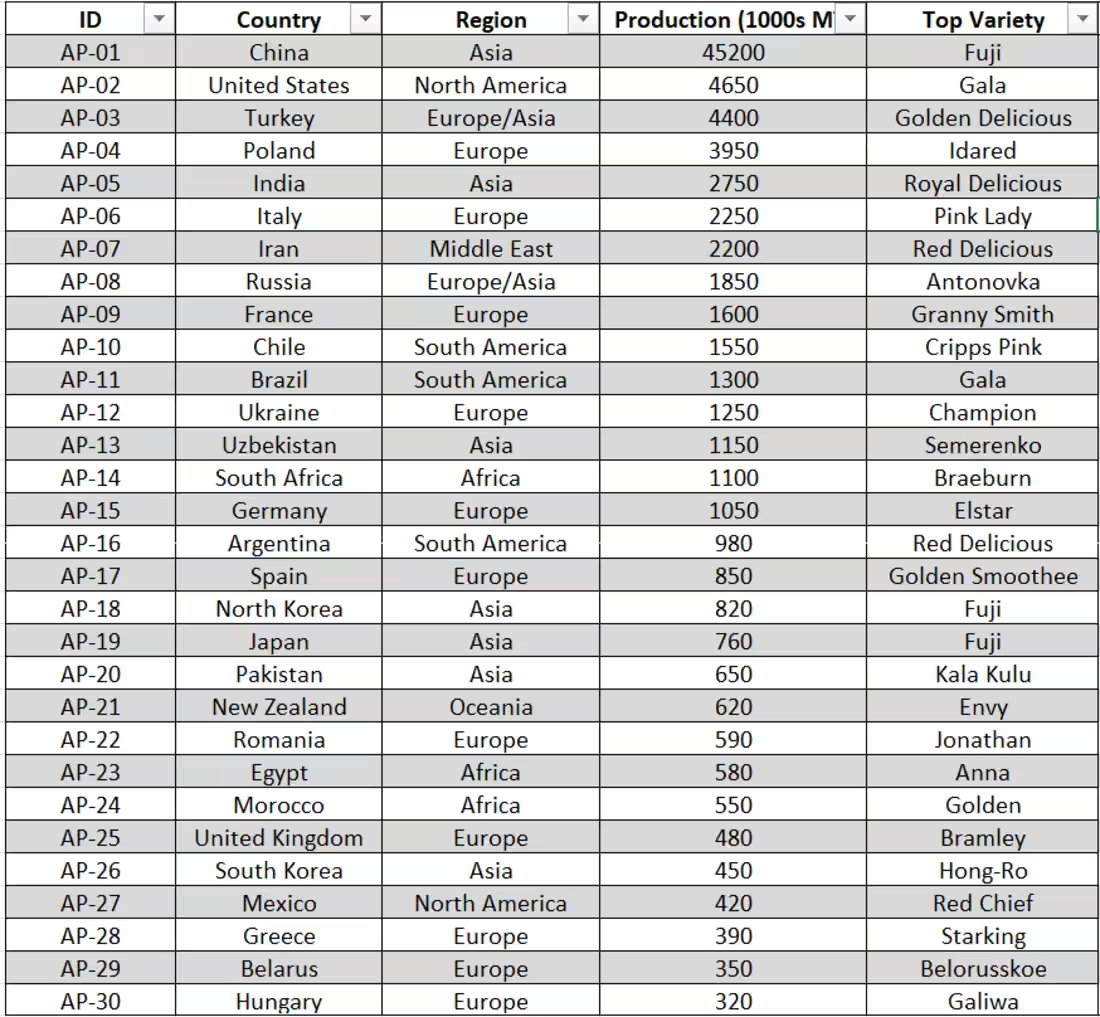

I developed a unified dataset for global apple production, reconciling data from the FAO and various national agricultural ministries for the 2025/26 season. The core of this project was unit conversion normalizing reporting from bushels and bins into standard Metric Tonnes. I successfully resolved data conflicts for transcontinental producers (Turkey/Russia) and filled gaps in 'Top Variety' metadata through secondary research. This cleaned dataset provides a reliable baseline for supply chain logistics and global export-import forecasting.

0

6

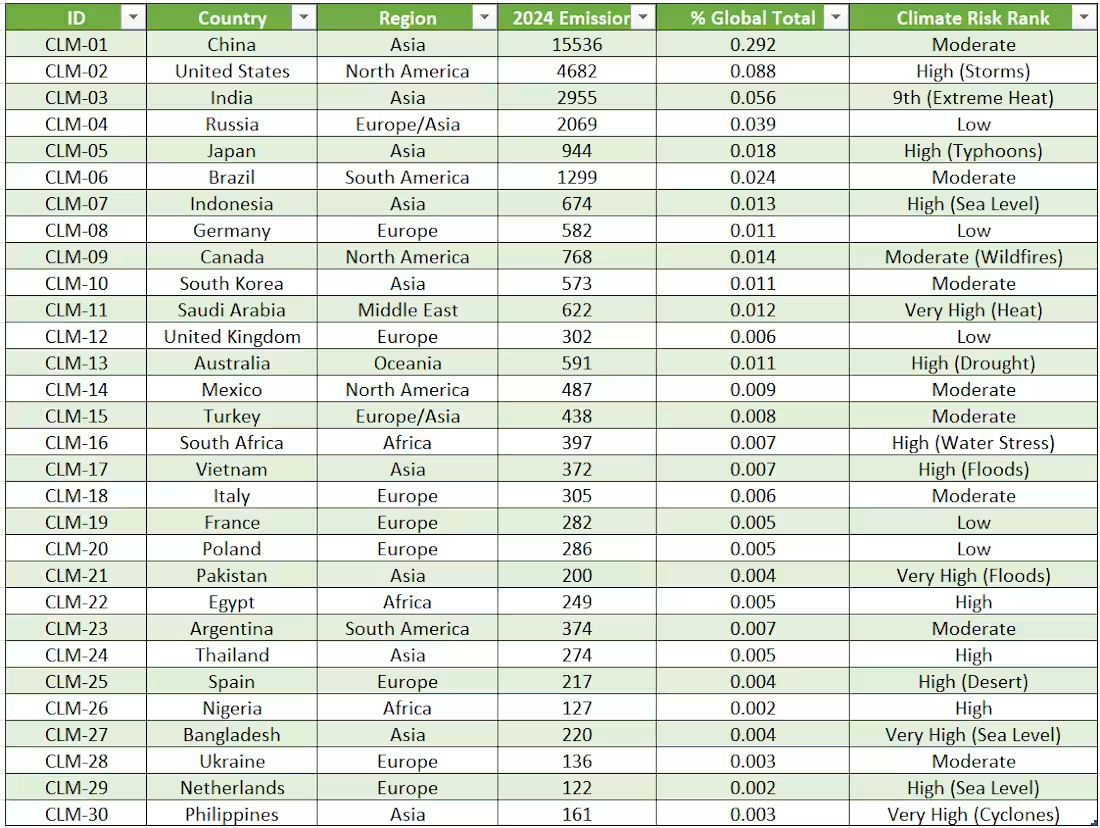

I consolidated and cleaned a complex dataset merging carbon emission metrics with the 2026 Climate Risk Index. This involved normalizing data from diverse sources (IEA, NOAA, and Germanwatch) to provide a clear view of how industrial output correlates with environmental vulnerability.

Key Deliverables:

Unit Normalization: Converted various emission reports into a unified $MtCO 2e$ scale.

Risk Mapping: Linked absolute emission volumes with qualitative risk levels for 30 nations.

Integrity Check: Resolved naming discrepancies for transcontinental regions (e.g., Turkey, Russia).

Result: A clean, analysis-ready CSV for environmental researchers and policy analysts.

0

10

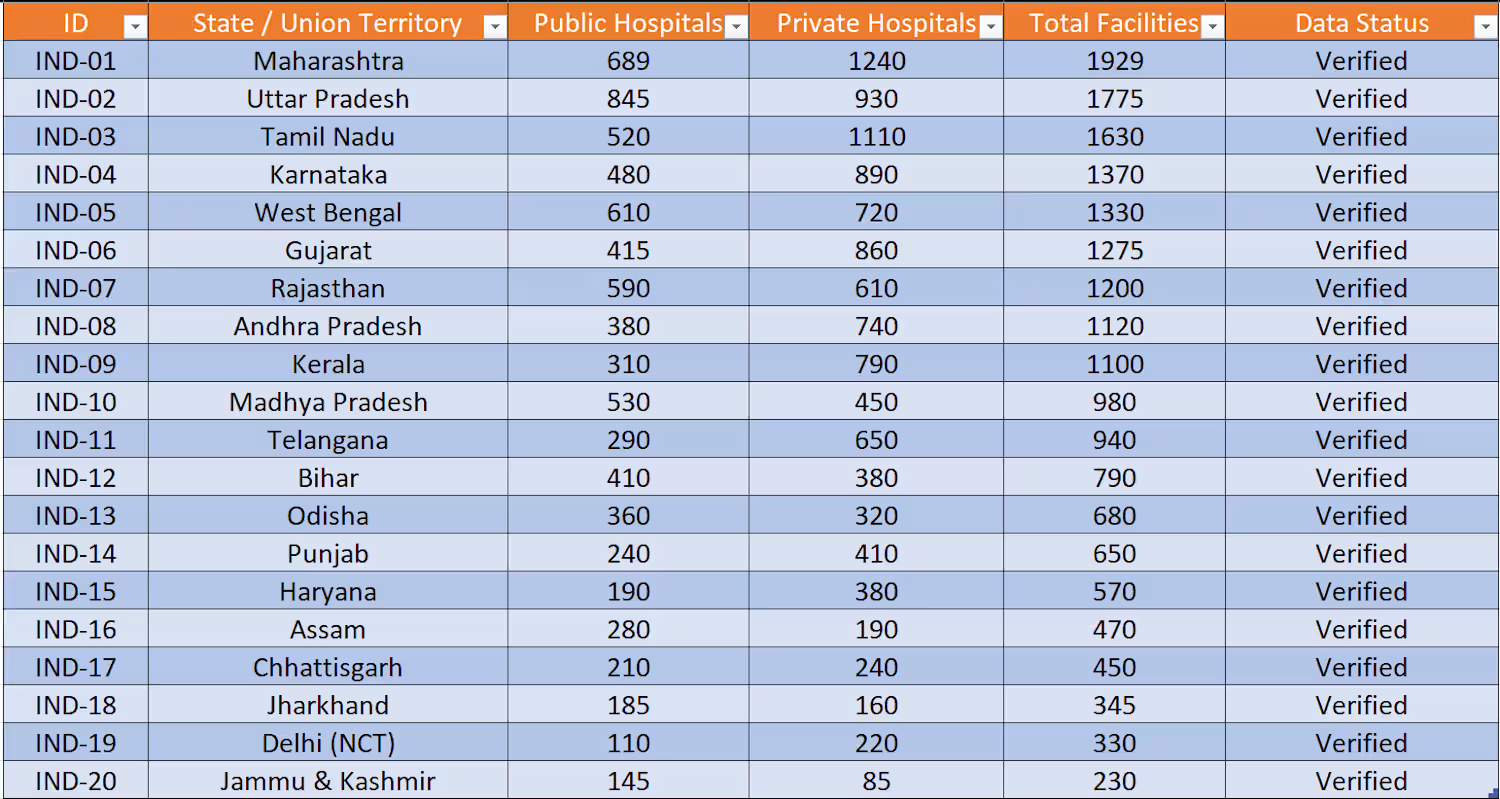

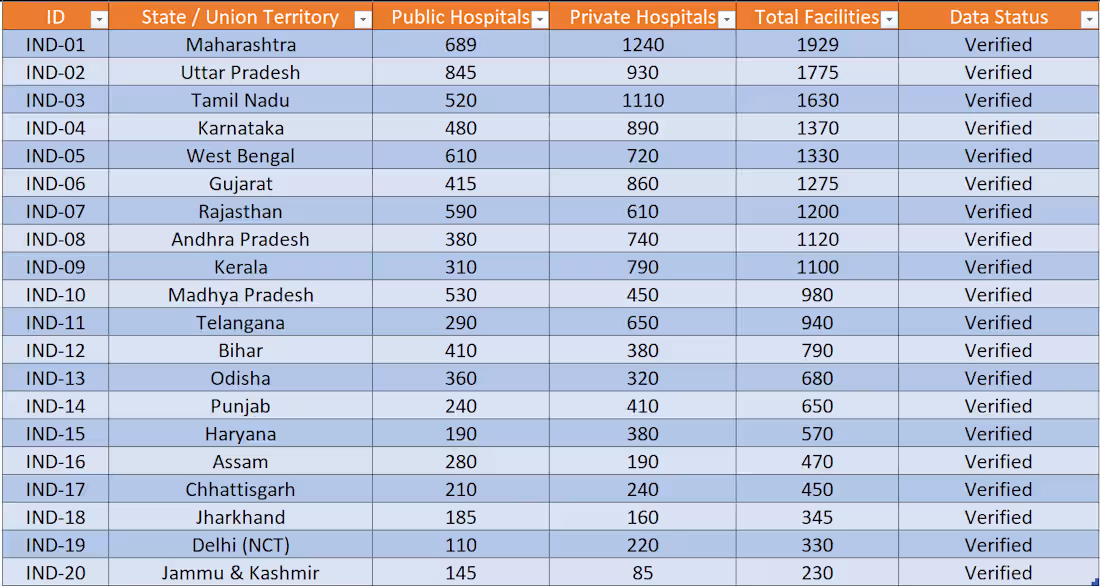

I transformed a fragmented healthcare dataset into a standardized, 100% accurate database. By manually resolving naming inconsistencies across 20 Indian states and verifying facility counts, I eliminated the 'noise' that leads to poor business decisions.

1

3

38

Data Cleaning: Turning Chaos into Clarity

The Problem: Messy, inconsistent data was making accurate analysis impossible.

The Fix: Removed duplicates and handled missing values.

Standardized formats for seamless reporting.

Validated data integrity for 100% accuracy.

The Result: A ready-to-use dataset that provides clear, reliable insights.

2

4

60