Metropolis (1927) and the Labor of Generative AI

Fritz Lang’s Metropolis (1927) imagines a future cleaved in two, where the affluent from lofty skyscrapers rule over a subterranean caste of laborers. The class tension is so palpable that the invention of a ‘maschinenmensch’ (a robot capable of work) upends the social order. Its notions of invisible labor are all too familiar to today’s audience, who will recognize in the film metaphors for the outsourcing of labor to poverty-stricken nations in the global South.

Metropolis begins by showing us the labor required to keep the lights on in this Eden-in-Art Deco: a subjugated populace of forlorn and helpless workers. ‘Shift change’ reads the first title card of the silent film, after which we see elevators full of workers plunge deep underground to the machines that run the city and relieve the previous 10-hour shift. Working conditions are so nightmarish that one deadly workstation, in a hallucinatory sequence, transforms into a pagan temple where captives (a metaphor for the laborers) are sacrificed to a cruel and ancient deity.

In order to further stress the importance of labor in the creation of modern day marvels, the film, instead, harkens back to the past. One sequence reimagines the story of the Tower of Babel and applies it to 20th century labor disputes. Told by Maria (a popular figure among the masses) during a clandestine meeting of workers, the parable explains that “those who conceived of the Tower of Babel could not build the Tower of Babel,” while, on the other hand, “the hands that built the Tower of Babel knew nothing of the dream of which the head that had conceived it had been fantasizing.” A title card, reading ‘Babel,’ starts dripping with blood, as images of workers drag stones and slowly build the mythical structure. A fundamental disconnect existed between the alienated workers and their overlords or, as the film succinctly puts it: “the hymns of praise of one man became the curses of another.” Eventually, the workers take their revenge by destroying a genuine feat of human ingenuity. The basic political thesis of the film is then summed up by Maria in one aphorism: “the mediator between the head and the hands must be the heart!”

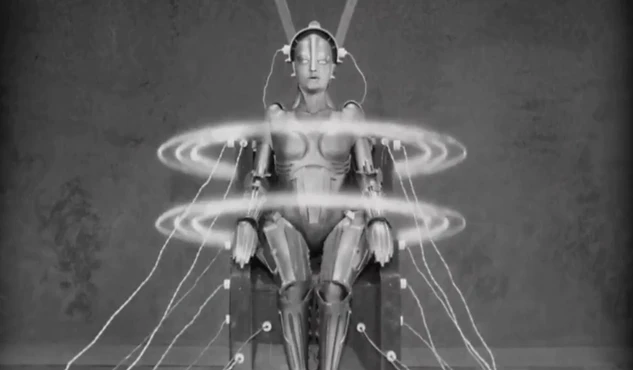

When the city’s reliance on workers is shaken by the invention of the Maschinenmensch, a robot that can work tirelessly for 24 hours a day, it sows havoc in the city albeit indirectly. The automaton is created by Rotwang (archetypally evil scientist) to destroy Metropolis. After copying Maria’s resemblance, the Maschinenmensch incites workers to rise up and destroy the machines that keep the city functioning. Here, there is a suggestion to associate this new invention with an unraveling of the social order, which is sure to happen when disharmony prevails between worker and capitalist. The creation of the robot, heralding the automation of all labor, has inevitably brought ruin to a great city.

Metropolis proves that (post)modern-day anxiety about this automation is not as recent as one may think. The inescapable conversations surrounding technologies fairly similar to the Maschinenmensch, like ChatGPT and DALL-E, exemplify this anxiety.

Such programs, owned by Silicon Valley’s OpenAI, have been hailed as the replacement for writers, photographers and artists much like the Maschinenmensch, but do they really annul the need for labor completely? It turns out that the pursuit of such software has recreated the same revolting conditions depicted in the film—subjugated masses toiling away for pennies. According to an investigation by TIME magazine, OpenAI has contracted Sama, a US-based firm operating in Kenya, Uganda and India to label and classify “toxic content,” so that ChatGPT can distinguish and filter out things like hate speech, violent and sexual content (Williams, Miceli & Gebru, 2022).

The nature of the work is traumatic. Workers must label data that describes, the investigation found, child sexual abuse, bestiality, murder, suicide, torture, self harm, and incest. In order to teach programs like ChatGPT how not to indulge in such topics, it must first identify them. Scores of marginalized workers in Kenya, for example, are charged with this thankless task while also being paid wages that range from $1.32 to $2 (Williams, Miceli & Gebru, 2022). It should also be noted that Meta (previously Facebook) has hired content moderators via the same firm, and tasked them with labeling disturbing videos under heavy surveillance. As a result, they reportedly suffer from a slew of mental health issues (including PTSD, anxiety, and depression) (Perrigo, 2023).

It would seem that instead of generative technology replacing labor altogether, it has indulged in a pre-existing modality of labor abuse. This goes to show that Metropolis’s depiction of brutalized workers is still frighteningly on-point. Lang’s future — our present — still has the unacceptable conditions that plagued the early 20th century, only the Maschinenmensch is not nearly as impressive as he imagined. To put it simply, it appears that the Maschinenmensch of today is being constantly assembled and tweaked and improved by thousands of workers. As Lang predicted, labor still has a central relevance. Instead of the film being relevant only to typical blue collar workers who languish in factories, rail yards and coal mines, it now speaks directly to a whole slew of new jobs: data labelers and content moderators whose working conditions are just as crowded and hardly remunerative.[1]

But what of Metropolis’s main political thesis? Lang seems to find hope that labor and capitalists (‘the hand’ and ‘the head’) may eventually reconcile, which is a long shot at preserving what Lang thinks are genuine marvels of human ingenuity. That said, ChatGPT is nowhere near achieving anything resembling the Tower of Babel. Does the film even apply to such technologies if they don’t genuinely pioneer or reshape how industry functions? One may even contend that generative technology is merely cannibalizing pre-existing and copyrighted content, and passing it off as original work.[2] If that is true, then, beyond its labor abuses, generative AI is not worth our intellectual attention, only our ridicule.

Notes

[1] See, for example: https://www.nytimes.com/2019/08/16/technology/ai-humans.html

[2] Concerns about such predatory practices are discussed in more depth here: https://www.nytimes.com/2023/02/13/technology/ai-art-generator-lensa-stable-diffusion.html

References

Perrigo, B. (2023). ‘OpenAI Used Kenyan Workers on Less Than $2 Per Hour to Make ChatGPT Less Toxic’. TIME https://time.com/6247678/openai-chatgpt-kenya-workers/

Williams, A., Miceli, M., & Gebru, T. (2022). ‘The Exploited Labor Behind Artificial Intelligence’. NOEMA https://www.noemamag.com/the-exploited-labor-behind-artificial-intelligence/

Like this project

Posted Sep 4, 2024

Did a German film from the 1920s predict the philosophical questions behind AI?

Likes

0

Views

2

Tags