CaseLaw AI: Advanced Legal Research Platform Development

CaseLaw AI: Advanced Legal Research Platform

🚨 MASSIVE DATASET WARNING 🚨

This is NOT a typical web application. The data requirements are ENORMOUS:

Component Size Description Raw Dataset 81GB 1,000 parquet files from Hugging Face Vector Storage ~50GB Qdrant database with 30M+ embeddings SQLite Index 6.3GB Metadata and case classification database Total Disk Space ~150GB Minimum required for full dataset RAM Required 64-128GB 64GB minimum, 128GB+ recommended Processing Time 48-72 hours On modern multi-core hardware

DO NOT attempt to run this project without proper infrastructure!

Overview

CaseLaw AI is a sophisticated legal research platform that combines semantic search, vector databases, and AI-powered analysis to help legal professionals navigate through millions of court cases efficiently. Built with a modern tech stack including React, TypeScript, and Python, it provides an intuitive interface for searching, analyzing, and managing legal documents.

Features

Semantic Search: AI-powered search using OpenAI embeddings for contextual understanding

Advanced Filtering: Filter by jurisdiction, court level, date ranges, and case types

Real-time Results: Fast search results with relevance scoring

Case Management: Save cases, add notes, and track research history

Dark Mode: Full theme support for comfortable reading

Responsive Design: Works seamlessly on desktop and mobile devices

PDF Export: Generate formatted PDFs of case documents

Research Notes: Create and manage notes linked to specific cases

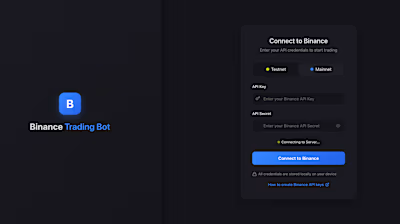

Screenshots

Home Page

Search Interface

Case Details

Filtering Options

User Features

Help System

Dataset

CaseLaw AI utilizes the comprehensive Caselaw Access Project dataset, containing:

6.8 million legal cases spanning from 1662-2020

30+ million vector embeddings for semantic search

58 jurisdictions across the United States

3,064 unique courts

6.3GB SQLite index for efficient metadata retrieval

45GB+ Qdrant vector database for similarity search

Each case includes:

Full text of the court decision

Metadata (court, date, jurisdiction, citations)

Case type classification (Criminal, Civil, Administrative, Constitutional, Disciplinary)

Tech Stack

Frontend

React 18 with TypeScript

Vite for fast development and building

TanStack Query for data fetching and caching

Tailwind CSS with shadcn/ui components

React Router for navigation

Lucide Icons for consistent iconography

Backend

FastAPI for high-performance Python API

Qdrant vector database for semantic search

SQLite for metadata and full-text search

OpenAI for embeddings generation

PyPDF2 for PDF processing

Pydantic for data validation

Data Pipeline Architecture

System Architecture

Case Types Supported

Criminal: Cases involving violations of criminal law and prosecution by the state

Civil: Disputes between private parties including torts, contracts, and property disputes

Administrative: Cases involving government agencies and regulatory matters

Constitutional: Cases interpreting constitutional provisions and rights

Disciplinary: Cases involving professional misconduct and disciplinary proceedings

Prerequisites

Node.js 18.x or higher

Python 3.9 or higher

Docker and Docker Compose

Minimum 64GB RAM (recommended 128GB for production)

500GB+ SSD storage for databases

NVIDIA GPU (optional, for faster embeddings)

Installation

1. Clone the Repository

2. Set up the Qdrant Vector Database

Run with:

3. Backend Setup

4. Frontend Setup

5. Environment Configuration

Create

.env files in both frontend and backend directories:Backend

.env:Frontend

.env:Data Ingestion

Processing Pipeline

The data ingestion pipeline handles millions of legal documents. Warning: This process takes 48-72 hours and requires ~150GB of disk space!

Key Processing Scripts

parallel_processor.pyDownloads and processes the entire dataset from Hugging Face:

Downloads 1,000 parquet files in parallel

Generates embeddings using OpenAI's text-embedding-3-small

Handles token limits and memory optimization

Saves embeddings as pickle files for later upload

Runtime: 48-72 hours on high-end hardware

create_sqlite_index.pyCreates the SQLite metadata database:

Processes all parquet files to extract metadata

Classifies cases into types (Criminal, Civil, etc.)

Creates optimized indexes for fast filtering

Output: 6.3GB SQLite database

upload_vectors.pyUploads processed embeddings to Qdrant:

Reads pickle files from

parallel_processor.pyUploads in batches to avoid overwhelming Qdrant

Handles retry logic and error recovery

Runtime: 2-4 hours depending on hardware

Data Volume Considerations

Raw Data: ~500GB of court documents (81GB compressed)

Processed Data: ~100GB of parquet files

Vector Database: ~45GB in Qdrant

SQLite Database: ~10GB of metadata

Processing Time: 48-72 hours on modern hardware

Usage

Starting the Application

Start the backend:

Start the frontend:

Access the application at

http://localhost:5173API Endpoints

POST /api/v1/search - Semantic search with filtersGET /api/v1/cases/{case_id} - Retrieve specific caseGET /api/v1/filters - Get available filter optionsPOST /api/v1/export/pdf - Generate PDF exportSearch Query Examples

Performance Optimization

Vector Search Optimization

HNSW Index: Configured with

m=16, ef_construction=100Quantization: Scalar quantization reduces memory by 75%

Batch Processing: Process queries in batches of 100

Caching: Redis cache for frequent queries

Database Optimization

SQLite: Full-text search indexes on case_name, summary

Partitioning: Date-based partitioning for faster queries

Connection Pooling: Max 50 concurrent connections

Development

Running Tests

Code Style

Backend: Black formatter, flake8 linting

Frontend: ESLint with Prettier

Contributing

Fork the repository

Create a feature branch

Commit your changes

Push to the branch

Create a Pull Request

Deployment

Production Considerations

Use dedicated Qdrant cluster or Qdrant Cloud

PostgreSQL instead of SQLite for better concurrency

Redis for caching layer

CDN for static assets

Load balancer for API servers

Docker Deployment

Troubleshooting

Common Issues

Out of Memory: Increase Docker memory limits

Slow Searches: Check Qdrant index configuration

Missing Cases: Verify data pipeline completion

API Timeouts: Adjust FastAPI timeout settings

Debug Mode

Enable debug logging:

Dataset Limitations

Time Range: Cases from 1662-2020

Jurisdictions: 58 jurisdictions (federal and state)

Completeness: Some jurisdictions have more comprehensive coverage than others

Processing: Some cases may have OCR artifacts or formatting inconsistencies

Quick Start Options

Option A: Use Pre-built Data (Recommended)

If you want to avoid the 48-72 hour processing time, contact the repository owner for:

Pre-populated Qdrant backup (~50GB)

SQLite database file (

case_lookup.db - 6.3GB)Instructions for data restoration

Option B: Build From Scratch

Follow the Data Ingestion section above. Ensure you have:

150GB+ free disk space

64GB+ RAM

Stable internet connection

48-72 hours for processing

License

This project is licensed under the MIT License - see the LICENSE file for details.

Acknowledgments

OpenAI for embeddings API

Qdrant team for vector database

shadcn/ui for component library

Caselaw Access Project for the dataset

All contributors and legal data providers

⚠️ CRITICAL WARNING: This application requires MASSIVE computational resources. The full dataset includes over 6.8 million legal cases, generating 30+ million vector embeddings. Ensure your infrastructure can handle:

Minimum 64GB RAM (128GB recommended)

500GB+ fast SSD storage

Modern multi-core CPU (16+ cores recommended)

Stable internet for API calls

Sufficient OpenAI API credits for embeddings

This is NOT a typical web application - it's a data-intensive platform designed for serious legal research. DO NOT attempt to run this without proper infrastructure!

Disclaimer

This project is a technical demonstration of semantic search capabilities using publicly available legal data from the Caselaw Access Project. It was developed as part of a consulting project with the client's encouragement to make legal research tools more accessible. No proprietary client data or confidential information is included in this repository.

Like this project

Posted May 25, 2025

Developed CaseLaw AI, a legal research platform with AI and semantic search.

Likes

1

Views

2