Credit project

Responsibilities:

• Analyzed data using Hadoop components Hive and Pig Queries, HBase queries.

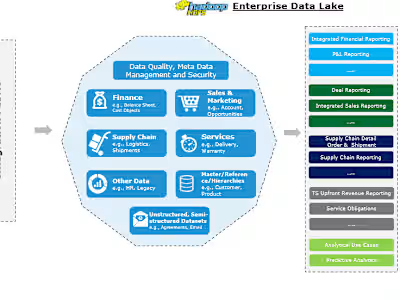

• Load and transform large sets of structured, semi structured, and

unstructured data using Hadoop/Big Data concepts.

• Involved in loading data from the UNIX file system to HDFS.

• Responsible for creating Hive tables, loading data, and writing hive queries.

• Handled importing data from various data

sources, performed transformations using Hive, Map Reduce/Apache Spark, and

loaded data into HDFS.

• Extracted the data from Oracle Database into HDFS using the Sqoop.

• Loaded data from Web servers and Teradata using Sqoop, Spark Streaming API.

• Utilized Spark Streaming API to stream data from various sources. Optimized

existing Scala code and improved the cluster performance.

• Experience in working with Spark applications like batch interval time, level

of parallelism, memory tuning to improve the processing time and efficiency.

• Tech Stack:

Hadoop 2.x · HDFS · spark SQL · Eclipse · Apache Kafka · Apache Sqoop · Apache

Spark · Hive · Linux

Like this project

Posted Feb 24, 2023

Analyzed and load the data using sqoop and hive. Create tables in hive data warehouse and bring meaningful insights to the data. Then processing the data.

Likes

0

Views

18