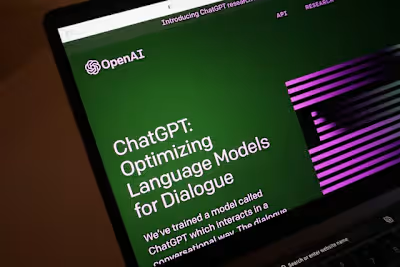

Navigating the OpenAI Playground to understand its capabilities

OpenAI created the web-based interface called Playground, which simplifies the process of constructing and evaluating predictive language models. The platform further offers tutorials and resources to aid in the initiation of AI development and research, in addition to tools for investigating the different techniques involved in reinforcement learning.

Naturally, comprehending the functionality and capabilities of the OpenAI Playground necessitates an understanding of its mechanics and abilities.

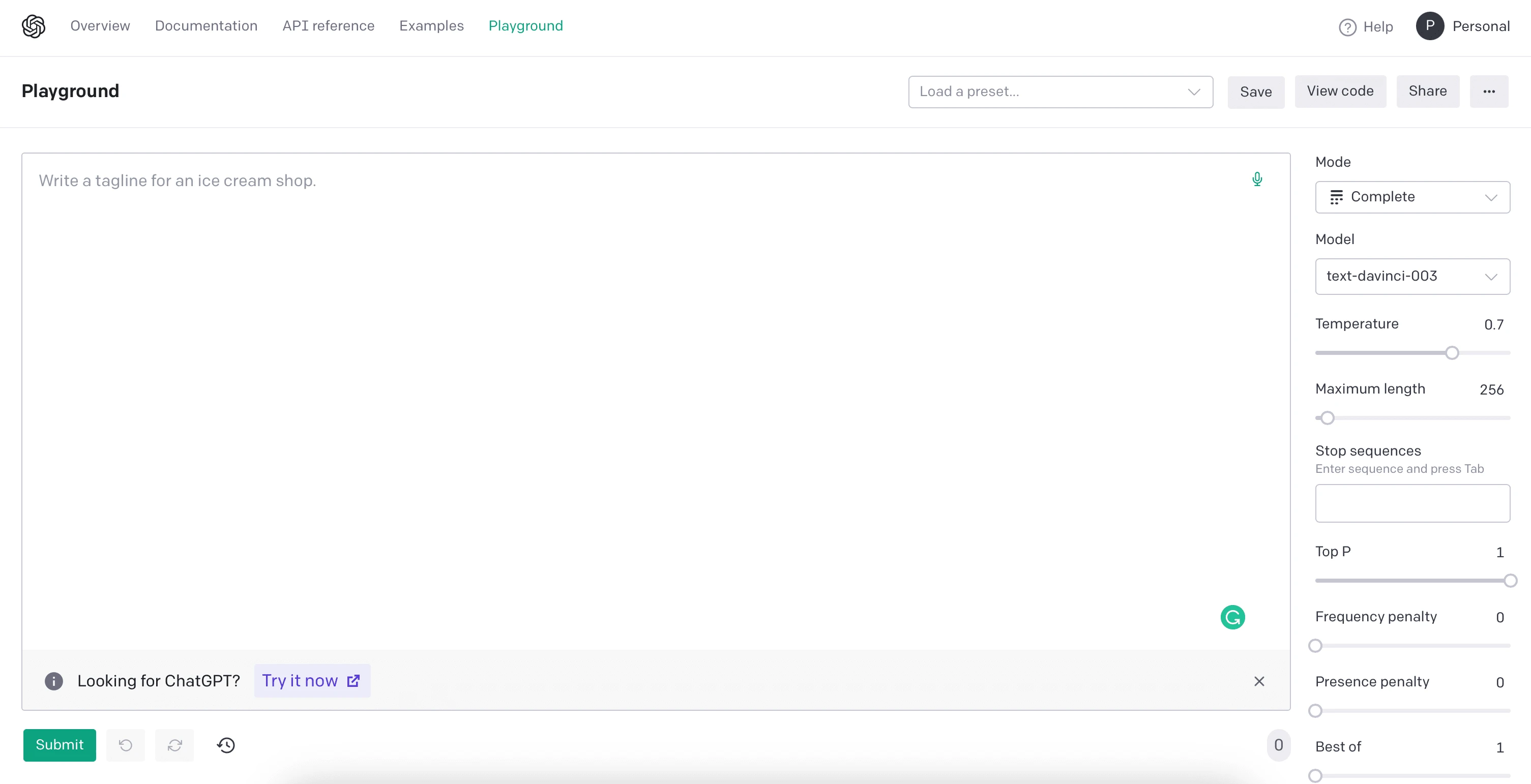

This is what the interface looks like when you land on the Playground page.

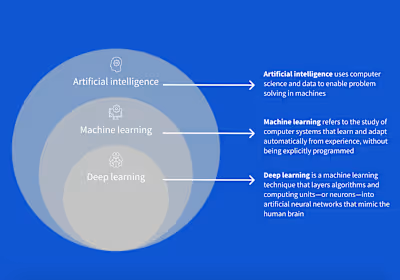

Normally, employing a predictive writing tool, like ChatGPT or GPT-4, necessitates training a machine learning model on a corpus of text. Once trained, the model can generate text by providing it with a seed phrase, allowing it to use the patterns it learned from the training data to complete the text.

Thankfully, OpenAI's Playground has completed this task on your behalf, with various machine-learning models, including all GPT-3, ChatGPT, and GPT-4 models, as well as several others. This allows for a straightforward, streamlined interface for exploring various reinforcement learning models and fine-tuning the model for your specific use case, producing optimal results.

How to use the Playground

Specificity is key

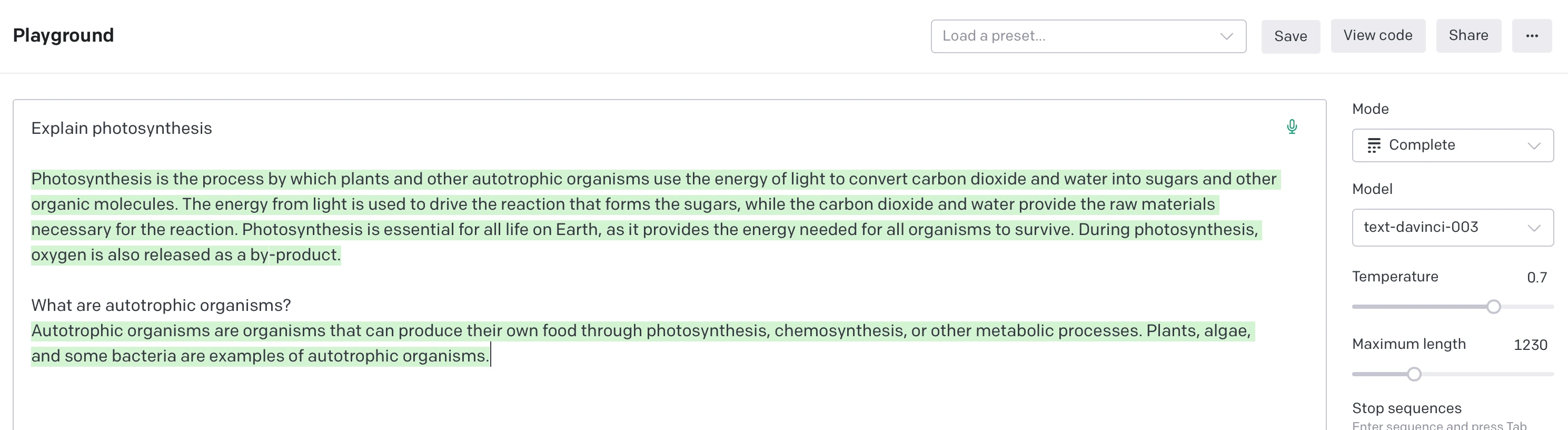

It is essential to be clear and specific when using OpenAI's playground, as the tool can accomplish numerous tasks. The key to achieving better results is providing precise instructions, which is why demonstrating what you want the system to do is crucial, rather than just verbalizing it.

Furthermore, commencing with statements instead of inquiries typically yields more elaborate responses. For instance, using a statement like "explain X" would typically provide a more detailed answer than asking a question about X. Subsequently, you can refine your questions or obtain more specific responses.

To learn more about prompt engineering and how to optimize your prompts, refer to the prompt design section in the OpenAI documentation.

What are presets?

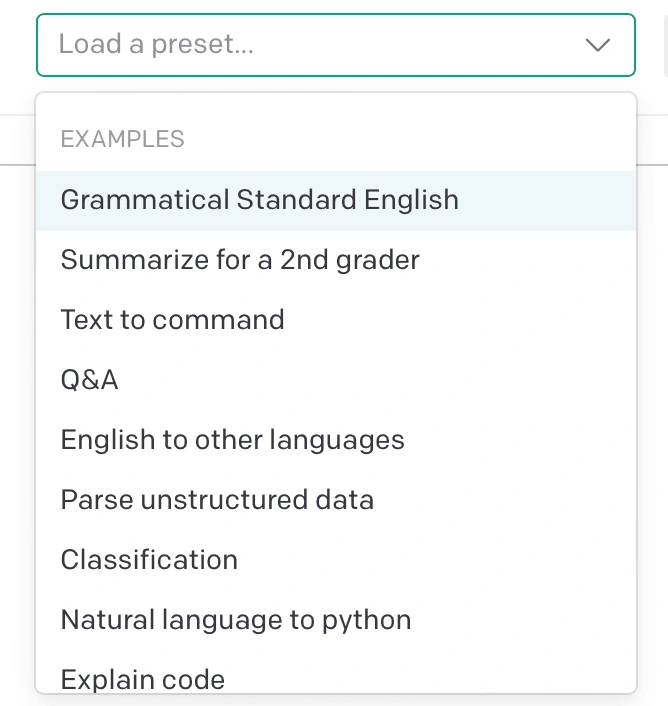

If you're struggling to generate ideas, you can try exploring the "Load a preset" drop-down menu located in the top-right corner. OpenAI has already included numerous pre-made prompts that you can utilize by simply clicking on them to insert them into your project. To access additional presets, visit https://beta.openai.com/examples.

Saving presets

Have you crafted a custom prompt with unique settings that you're particularly fond of? If so, you can effortlessly save your settings as presets by selecting the Save button and assigning it a name and description. This will enable you to use your preset settings again in the future, as well as share them with fellow OpenAI users.

To share a preset, all you need to do is click on the Share button, which will generate a shareable link that you can copy and paste to share with others.

Playing with the Settings

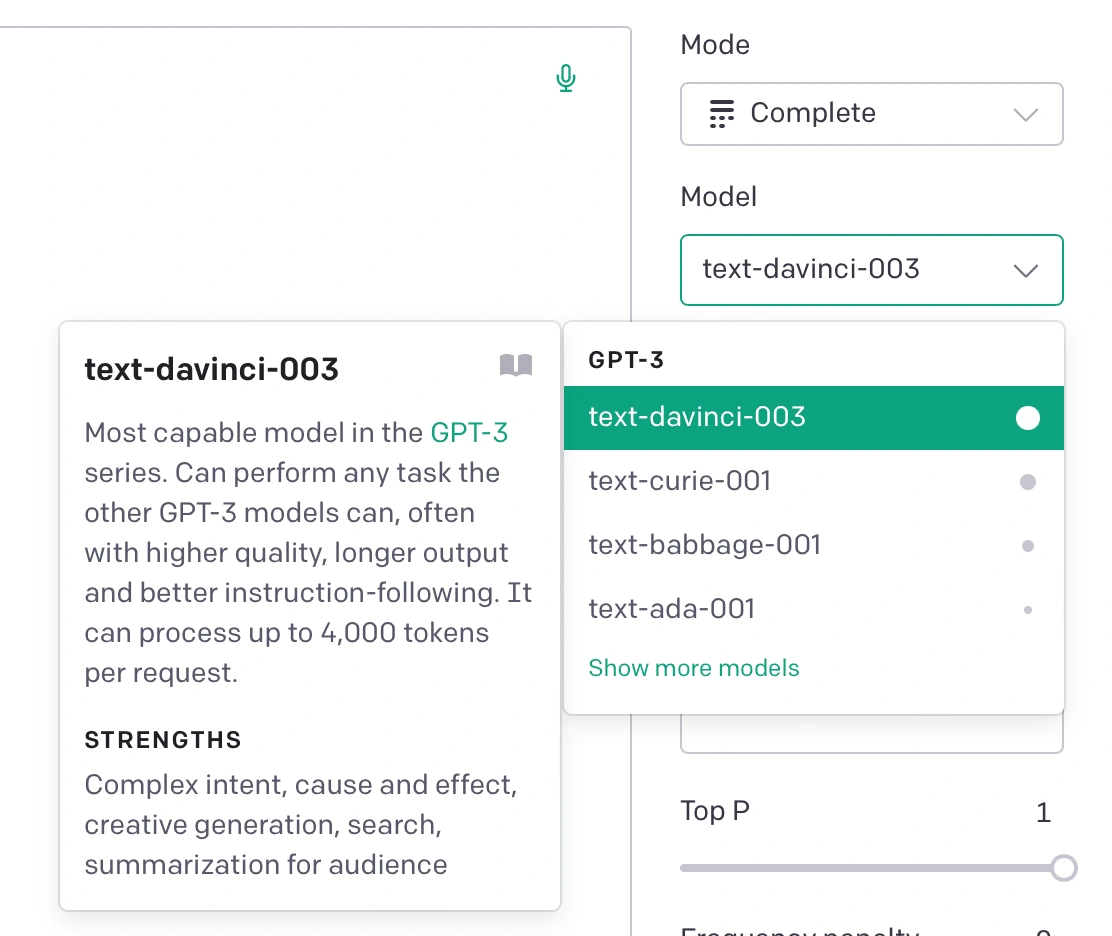

Mode

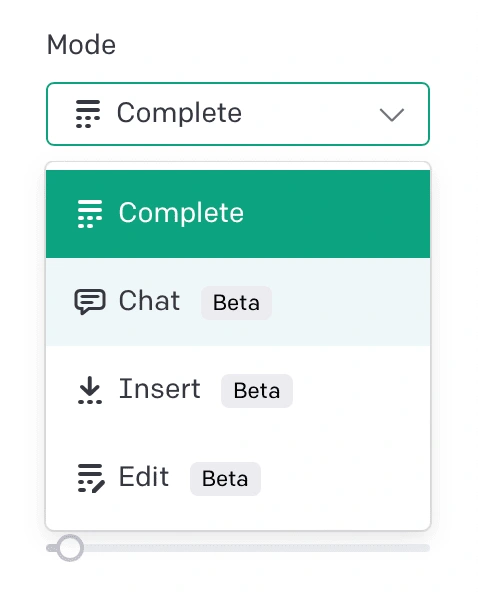

The Mode setting provides you with the option to choose how you want the system to respond. You can opt to have it respond to your prompts, insert new text into existing content, or edit the text that you've pasted into the text box.

Model

You can select which AI you wish to communicate with using the Model options. The default model for the Completion mode is text-davinci-003, which is the most advanced. While the other AIs may not be as intelligent, they use fewer tokens compared to text-davinci-003. You can hover over each model to understand its capability.

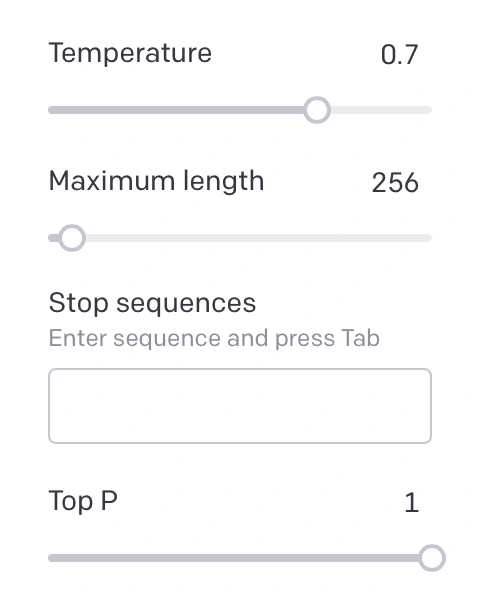

Temperature

You can use the Temperature Setting slider to control the level of "randomness" in the output generated by the system. Lowering the temperature increases the probability of the system producing a response that is more likely to occur. This feature can be beneficial if your prompt only has one correct answer.

However, if you're looking for something more unique, such as when generating business ideas, you can increase the temperature to introduce more variety in the responses.

Maximum length

By adjusting the Maximum Length slider, you can modify the length of the responses generated by the system. This feature enables you to specify the desired length of your responses, for example, if you want the system to write a 500-word essay, you will need to adjust this setting accordingly.

Stop sequences

Stop Sequences can be employed to prompt the model to halt at a specific point, such as the conclusion of a sentence or a list. This feature can be used to prevent the "speaker" (AI or human) from speaking twice consecutively or to generate a list with a predetermined number of items.

Top P

The Top P slider enables you to refine the Temperature setting when it's set to 1. Reducing the slider value will result in slightly broader responses, while increasing it will make the generated text more precise.

It's essential to remember that the Temperature and top P settings regulate the level of determinacy (not necessarily creativity or ingenuity) in the model's response generation. If you're seeking a response that has only one correct answer, then these settings should be lower. Conversely, if you're aiming for more diverse responses, then they should be higher.

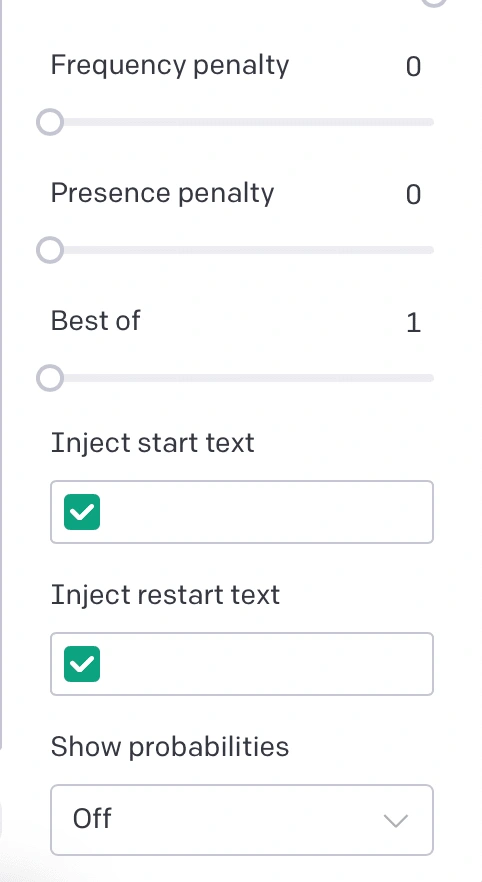

Frequency and Presence penalties

You can use the Frequency and Presence penalties to minimize the occurrence of repetitive token sequences in the generated text, thereby reducing the likelihood of the system repeating itself.

Additionally, you can use the Best of setting to locate the most probable and optimal answers to a question. However, keep in mind that this setting examines numerous possibilities and may consume a significant number of tokens, so use it judiciously.

Best of

The Best of option can be used to have the model generate multiple responses to a query. The Playground then selects the best one and displays it.

Inject start text

When requesting a response from the model, we often need to input a specific prefix manually. However, by using the Inject start text feature in the settings, we can specify the text that should automatically be added to the input before it's sent to the model.

Inject restart text

The inject restart text option in the sidebar can be used to automatically insert some text after the GPT-3 response, so we can use it to autotype the next prefix.

Show probabilities

The last option in the settings sidebar is Show probabilities, which is a debugging option that allows you to see why certain tokens were selected. The darker the background of a word the more that word was likely to be chosen. If you click a word you will see a list of all the words that were considered in that position of the text. When setting this option to Least likely the colorization works in reverse, with the darker backgrounds assigned to the words that were selected in spite of not being a likely choice. If you set the option to Full spectrum you will see both the least and most likely words colorized, with green tones for most likely words and reds for the least likely.

Conclusion

The Playground is the best way to play around with the latest technology and models OpenAI has to offer. If the API isn't generating the desired output, it's recommended to double-check the settings. Different settings can produce significantly distinct outputs.

Like this project

Posted Apr 7, 2023

A comprehensive guide to OpenAI playground