Technical Article on Role Prompting in Prompt Engineering

AI Automated CI/CD Error Handling Pipeline

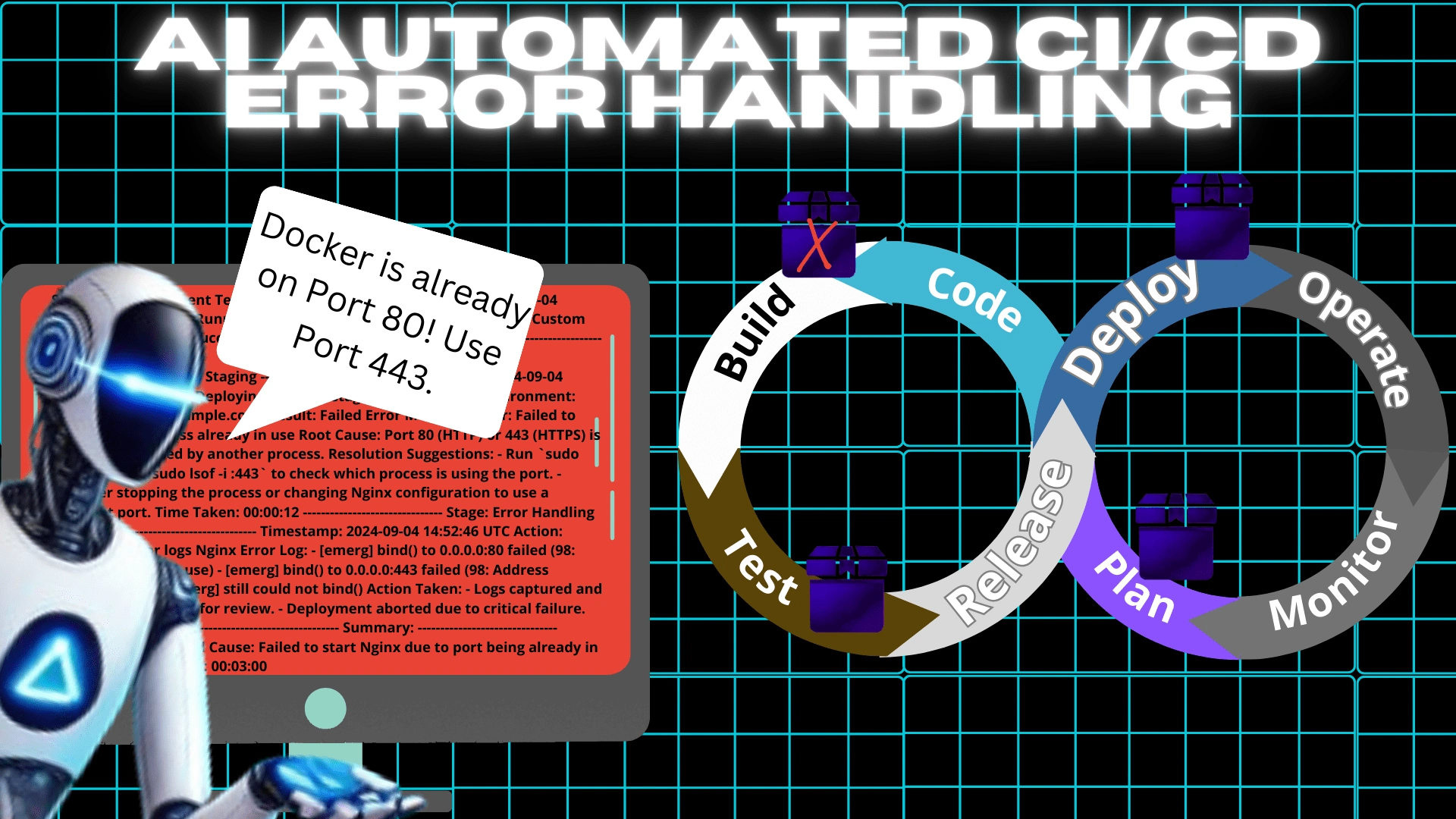

AI Automated CI/CD Error Handling PipelineWe're living through a once-in-a-lifetime shift and artificial intelligence is restructuring industries. Having the skill to fine-tune AI interactions with prompt engineering is a mission-critical skill for developers and technologists, and it will only impact your day-to-day DevOps functions even more.

How do we us AI to make our jobs easier? The answer is surprisingly methodical and reproducible as other aspects of the development cycle. This multi-part guide outlines specific steps to optimize for effective results when working with LLMs (Large Language Models). Strategic communication with AI can dramatically enhance the functionality of LLM-driven CI/CD automation by up to 295%.

The Role of AI in CI/CD Automation

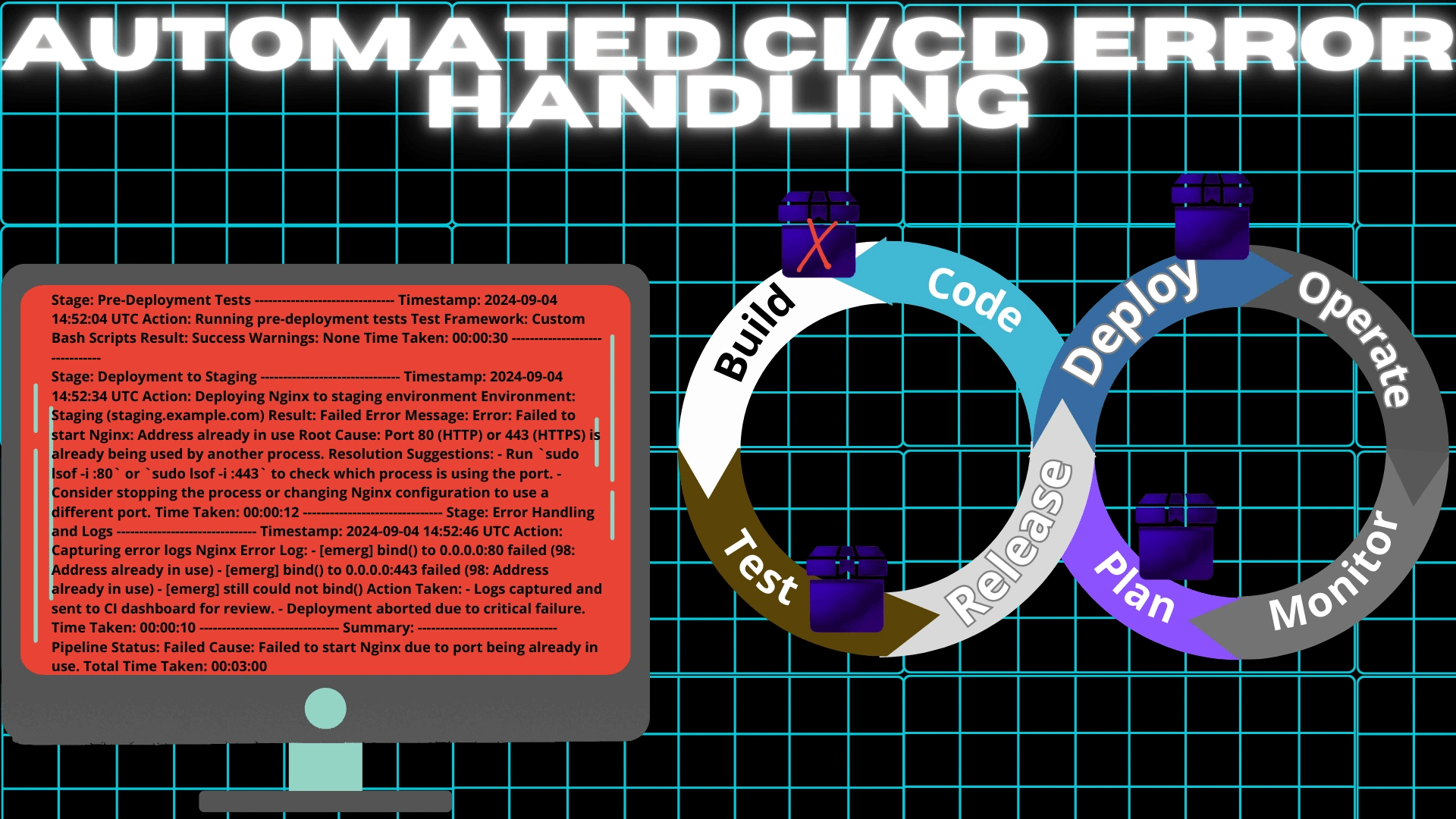

For this series, we present the use case of automated error handling in Continuous Integration (CI) pipelines- a utilization that can greatly save you time.

Use Case

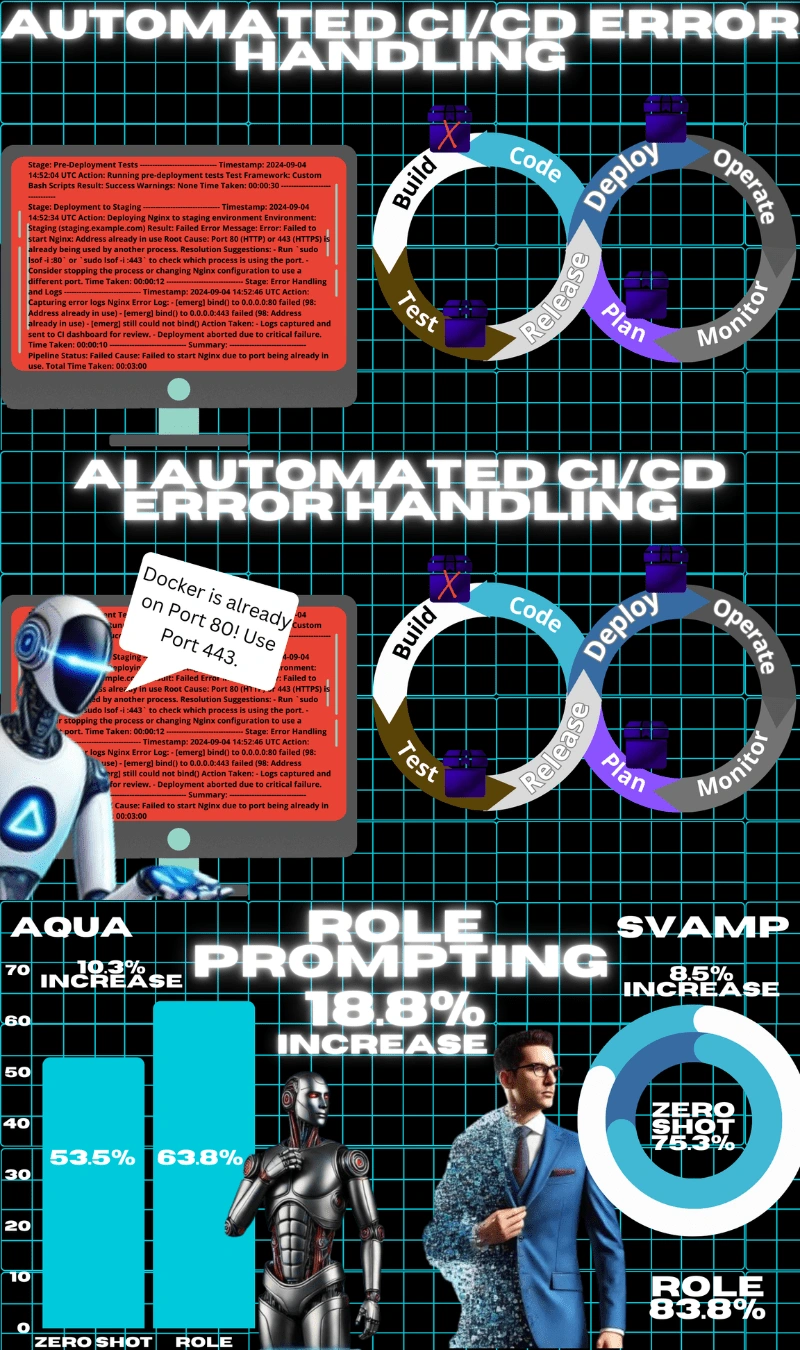

Automated CI/CD Error Handling Pipeline

This could have the benefits of speeding up development cycles through more efficient error handling and enhancing scalability and manageability in complex pipelines.

We will use this example as we explore the different methods to increase your accuracy. It is important to note, that there are some drawbacks to having a truly autonomous and AI-driven error-handling process.

Integral human oversight involves

Proper planning

Strategic execution

Ongoing project management

However, integrating AI into your error-handling process can

Aid in pattern recognition

Allow you to work with large amounts of data

Help you detect the root cause of errors much quicker

Introduction to Prompt Engineering

McKinsey defines prompt engineering as "the practice of designing inputs for AI tools that will produce optimal outputs." [1]With generative AI, prompt engineering uses natural language to speak with an LLM to create increasingly predictable and reproducible results. The more effective you are at prompt engineering, the more accurately your program will run.

Zero-Shot Prompting - Baseline Metric

If you've sat down to play with ChatGPT, typed in questions the way you would type them into Google, and received responses you have done the baseline form of prompt engineering. When you treat the AI like a search engine, and get an answer, that is called zero-shot prompting. Zero-shot prompting is when an AI is given a task with neither topic-specific training nor output examples.

Example:

"Analyze incoming error messages."

Zero-shot prompting isn't as accurate as it could be after integrating more advanced methods covered throughout this series. I use zero-shot prompting as the foundational benchmark for evaluating the accuracy of other prompting techniques like role prompting.

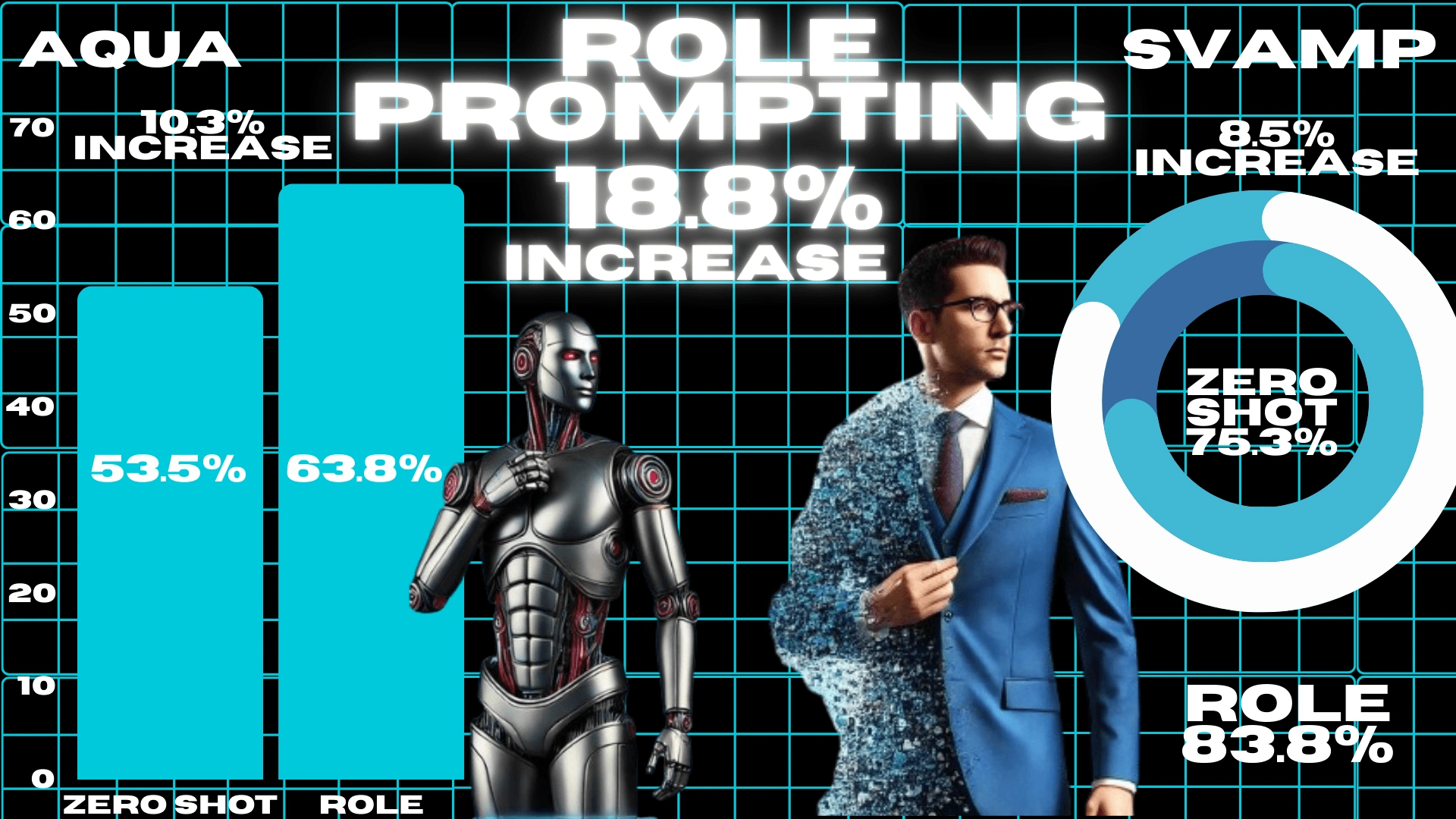

Role Prompting - 18.8% Increase

Role Prompting for Prompt Engineering increases accuracy by 18.8%

Role Prompting for Prompt Engineering increases accuracy by 18.8%An easy way to boost your accuracy is role-playing which can help you see an increase of up to 18.8%. Reviewing the findings of Better Zero-Shot Reasoning with Role-Prompting, [2]published in March of 2024, it is important to examine two metrics as they pertain to DevOps environments:

Algebraic Question and Answering (AQuA)

Simple Variations on Arithmetic Math word Problems (SVAMP)

AQuA

AQuA [3]is a benchmark and dataset developed by Google DeepMind, that's used to evaluate mathematical reasoning in LLMs. The dataset consists of 100 000 algebraic word problems that each have 3 components:

A question statement

A correct answer

A step-by-step solution

AQuA uses 2 performance metrics:

Whether the model answers correctly

Whether the model generates a correct step-by-step solution

While the impacts of AQuA in automating CI/CD is an emerging area to be explored and researched, we can draw one main connection between this metric and our example scenario. An AI that can reason through 100 000 word-math problems can also understand an error message and solve the problem behind the error.

SVAMP

SVAMP [4] is a challenge set developed to improve upon the ASDiv-A and the MAWPS datasets which had false positives due to the reliance on shallow heuristics.

The SVAMP shows how well language models can handle slight variations in problem formulation and go deeper than pattern matching to assess their true understanding of mathematical concepts. The SVAMP has 3 characteristics:

Contains 1000 word-math problems

Variations of the 100 seed problems of the ASDiV

Changed subtly from the original problems

SVAMP is a similar metric to AQuA which measures how LLMs handle slight variations and understanding of mathematical concepts. Let's examine how these 2 benchmarks are affected by role prompting.

Results

Role prompting tested against these benchmarks with significant results :

AQuA shows an increase of 10.3% in accuracy

SVAMP shows an increase of 8.5% in accuracy

Total increase of 18.8% in accuracy[2]

Assigning your LLM a specific, advantageous role tailored to these tasks, enhances its accuracy and significantly boosts its performance in critical areas.

Translating directly into a more reliable CI/CD pipeline. With an 18.8% increase in accuracy, AI is better equipped to analyze error messages logically and think through root causes autonomously, reducing the need for manual intervention. Leading to faster, more efficient deployment cycles and a more streamlined and error-resistant DevOps pipeline.

How to Create a Role Prompt

Example:

You are a highly sought-after DevOps automation engineer with 20 years of experience adept at optimizing continuous integration and delivery pipelines.

When outlining your role:

Go beyond a basic job description

Add details to highlight the role's strengths

Assign the LLM a role that improves the accuracy and relevance of its responses

Match task's complexity and required expertise

Focusing on the AI's experience and specialization boosts its ability to provide more effective solutions, resulting in a stronger CI/CD process. This approach ensures the LLM handles tasks with 20 years of expertise comparable to industry best practices.

Impact of Prompt Engineering

Giving your AI a role in the DevOps pipeline creates more focused error handling and reducing manual intervention. This is just one use case, and AI's role in DevOps continues to evolve. It is essential to stay competitive through learning.

Wrap Up

Role prompting is an important first step to improving your accuracy, but there is still much more that you can do above and beyond the 18.8%. The next part of this series will explore the effectiveness of specific task prompting and how you can greatly improve the error-handling process.

Have you been using prompt engineering in your DevOps pipeline? How has role prompting changed your accuracy and effectiveness?

CI/CD Pipeline Automation and Prompt Engineering for AI

References

[1] "What Is Prompt Engineering?" McKinsey & Company, 22 Mar. 2024. [Online]. Available: www.mckinsey.com/featured-insights/mckinsey-explainers/what-is-prompt-engineering.

[2] A. Kong, S. Zhao, H. Chen, Q. Li, Y. Qin, R. Sun, X. Zhou, E. Wang, and X. Dong, "Better Zero-Shot Reasoning with Role-Play Prompting," 2024.

[3] Google DeepMind, "AQuA: Algebraic Question Answering Dataset," GitHub. [Online]. Available: https://github.com/google-deepmind/AQuA. [Accessed: 9/5/2024].

[4] A. Patel, "SVAMP: Simple Variations on Arithmetic Math Word Problems," GitHub. [Online]. Available: https://github.com/arkilpatel/SVAMP. [Accessed:9/5/2024].

Like this project

Posted Sep 13, 2024

Wrote article on the science behind Role Prompting in DevOps through using AI assisted automation of CI/CD pipelines.

Likes

0

Views

4

Tags