AI Customer Support Bot - MCP Server Development

Like this project

Posted May 27, 2025

Developed an MCP server for AI-powered customer support using Cursor AI and Glama.ai.

Likes

0

Views

6

AI Customer Support Bot - MCP Server

A Model Context Protocol (MCP) server that provides AI-powered customer support using Cursor AI and Glama.ai integration.

Features

Real-time context fetching from Glama.ai

AI-powered response generation with Cursor AI

Batch processing support

Priority queuing

Rate limiting

User interaction tracking

Health monitoring

MCP protocol compliance

Prerequisites

Python 3.8+

PostgreSQL database

Glama.ai API key

Cursor AI API key

Installation

Clone the repository:

Create and activate a virtual environment:

Install dependencies:

Create a

.env file based on .env.example:Configure your

.env file with your credentials:Set up the database:

Running the Server

Start the server:

The server will be available at

http://localhost:8000API Endpoints

1. Root Endpoint

Returns basic server information.

2. MCP Version

Returns supported MCP protocol versions.

3. Capabilities

Returns server capabilities and supported features.

4. Process Request

Process a single query with context.

Example request:

5. Batch Processing

Process multiple queries in a single request.

Example request:

6. Health Check

Check server health and service status.

Rate Limiting

The server implements rate limiting with the following defaults:

100 requests per 60 seconds

Rate limit information is included in the health check endpoint

Rate limit exceeded responses include reset time

Error Handling

The server returns structured error responses in the following format:

Common error codes:

RATE_LIMIT_EXCEEDED: Rate limit exceededUNSUPPORTED_MCP_VERSION: Unsupported MCP versionPROCESSING_ERROR: Error processing requestCONTEXT_FETCH_ERROR: Error fetching context from Glama.aiBATCH_PROCESSING_ERROR: Error processing batch requestDevelopment

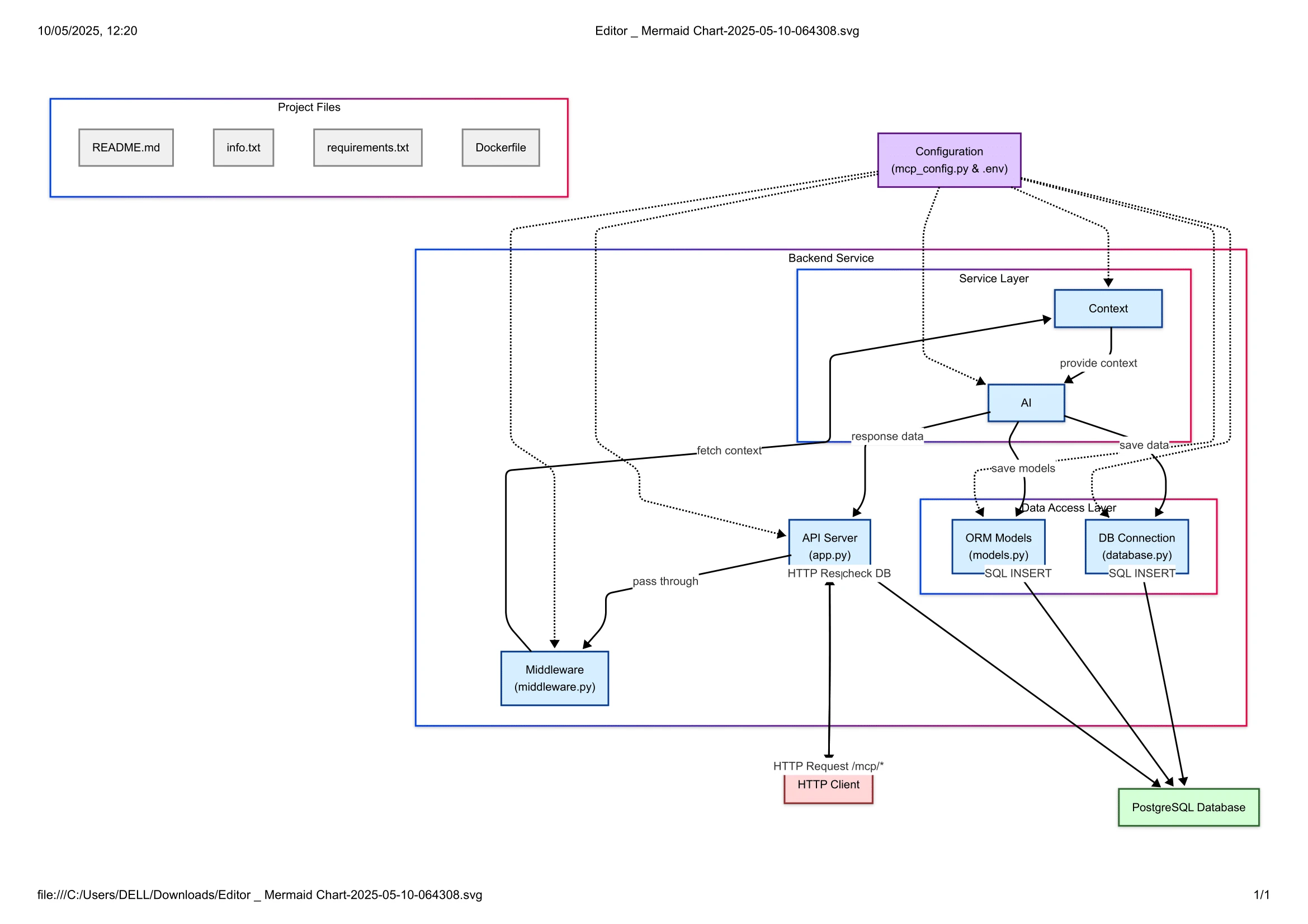

Project Structure

Adding New Features

Update

mcp_config.py with new configuration optionsAdd new models in

models.py if neededCreate new endpoints in

app.pyUpdate capabilities endpoint to reflect new features

Security

All MCP endpoints require authentication via

X-MCP-Auth headerRate limiting is implemented to prevent abuse

Database credentials should be kept secure

API keys should never be committed to version control

Monitoring

The server provides health check endpoints for monitoring:

Service status

Rate limit usage

Connected services

Processing times

Contributing

Fork the repository

Create a feature branch

Commit your changes

Push to the branch

Create a Pull Request

Flowchart

Verification Badge

License

This project is licensed under the MIT License - see the LICENSE file for details.

Support

For support, please create an issue in the repository or contact the development team.