Deploying Payment Processing Infrastructure to AWS: Corefy's Ex…

Hi all! I'm Dmytro Dziubenko, Co-founder & CTO of Corefy, a white-label SaaS platform that empowers clients to launch their own payment systems in a few clicks.

Our platform helps numerous payment providers and companies successfully cover all their payment acceptance needs.

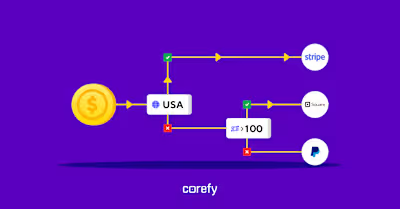

The key value of our platform for clients is that it eliminates the difficulties of payment provider integrations.

After a single integration with us, clients get access to hundreds of ready-made integrations with PSPs and acquirers worldwide, allowing them to connect any payment method easily.

In this article, I will share our experience deploying a payment processing infrastructure to Amazon Web Services (AWS).

The article is based on my 2021 speech at the “AWS Cloud for Financial Services” webinar, so I'll talk about our experience from that time.

2018: Migrating to AWS in a Month

In 2018, our product consisted of the following:

Payment gateway. Back then, we handled card processing with third-party providers' help, as most integrations implied redirecting to another payment provider where users entered their card details. Since our platform supports any payment method, we can integrate mobile wallets, invoicing, crypto processing, etc.

Payout gateway. Its main functionality (amount splitting, P2P payments) has mostly stayed the same since then.

Exchange rates service. Payment providers must work with multiple currencies, so we had an exchange rates service from the beginning. It still works almost unchanged.

These capabilities were enough for some of our clients, so we launched relying on these three pillars in 2018.

When launching, our main priority was to do it quickly and inexpensively while ensuring the system's scalability.

Why quickly? We gained our first client quite unexpectedly. We worked on our platform for a long time, and finally, we got lucky and got the first client who had to go live as soon as possible.

Within a month, we had to migrate to Amazon Web Services from our self-made clusters, which were deployed to OVH VPS using Kontena.

At that time, we had minimal experience with AWS, but hiring new team members allowed us to complete the project on time.

Ode to AWS Services

The infrastructure was made in the Amazon management console using the ClickOps method without any automation. Since we only had one client at the time, there wasn't much traffic.

However, the load gradually increased as we got new clients.

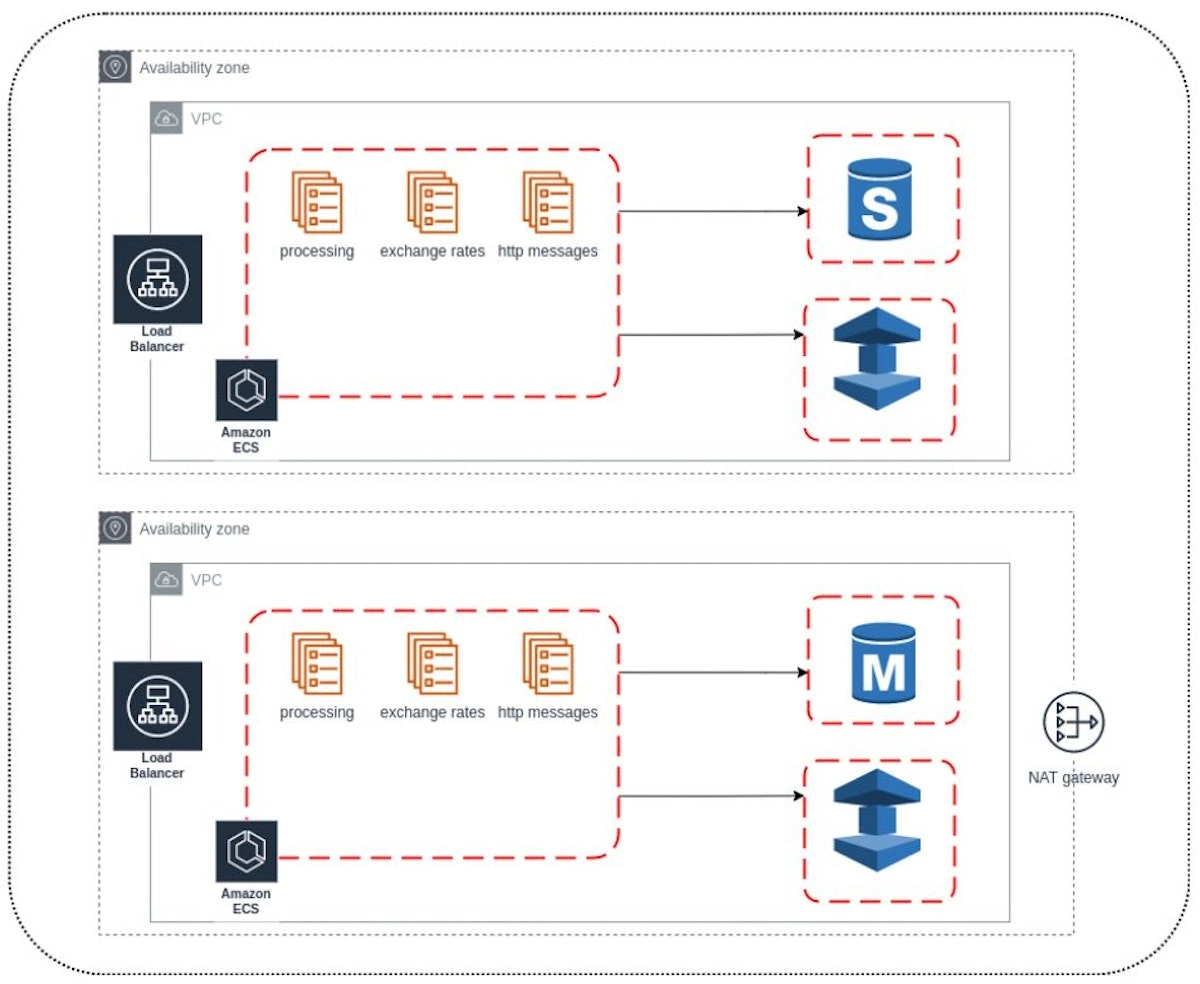

The critical services we used were ElastiCache, RDS, and ECS.

ElastiCache is an excellent service that allows you to work with Memcached and Redis. It supports working with single nodes and clusters and provides the ability to create snapshots.

You can deploy all of this to different availability zones. All the functionality of Redis is also available in the ElastiCache service.

RDS is my favorite service, making it possible to work with various DBMS (MySQL, PostgreSQL, Oracle) without worrying about physical problems. It can be deployed both on Provisioned storage and in regular mode.

RDS eliminates the need to organize custom backups because snapshots will be sufficient for most needs. Plus, they don't affect the productivity of your DB server.

Another possibility is restoring your database from a snapshot taken at any point in time (point-in-time recovery).

ECS is a docker container orchestration service from AWS, which has been fundamental for us for a long time – almost all of our resources run in it.

At that time, EKS had just been rolled out on AWS and had a limited toolkit to support it, so we couldn't even get started.

AWS itself invests quite a lot of effort in the development of ECS. However, it's primarily aimed at integrations with internal services – FireLens (logs), App Mesh, Blue-green deployment (CodeDeploy), Container Insights (CloudWatch), SecretManagement (SSM), and Serverless (Fargate).

There are also comical moments. For example, they added the ability to delete a task definition just recently. It's worth noting that it doesn't require paying for control plane nodes, unlike in the EKS case.

As a result, by the end of 2018, we had the following infrastructure: two availability zones, several minor services, a database (primary/secondary), and Redis.

But due to limited time, ClickOps, and lack of experience, we rolled out one NAT in two availability zones.

The main issues we encountered during that time were EBS and T2 instances credits. EBS has a limit on the number of IOPS depending on the disk volume, and when the instance is launched, this limit is very small.

If you start using the disk heavily with the new EBS, you will likely reach the limit soon, and all operations will become much slower.

We use the Symfony framework, which warms up its kernel cache before starting. It added complexity – we even tried to switch to tmpfs.

2019: Passing PCI DSS Certification

By the beginning of 2019, we had not yet had our own card processing, but we had designed it and were ready to begin development. We faced a choice: to stay on Amazon, where we had a little more than six months of experience, or to return to OVH.

Since no one on the team had practical experience with the Payment Card Industry Data Security Standard (PCI DSS) certification, choosing an infrastructure provider was crucial for us.

We did an extensive SWOT analysis to make a final decision.

The OVH provider offers various packs to facilitate PCI DSS compliance. They provide a cluster on dedicated servers and take some certification responsibility.

But, as we expected, managing it was challenging as it also required some experience.

Since the whole world is moving to the cloud, moving to a dedicated server didn't feel right, so we decided to stay on AWS. We considered how to get certified and how to scale.

Besides, we wanted to work with popular technology, not some underground like OpenStack 🙂. Moreover, we had to be able to find specialists for support.

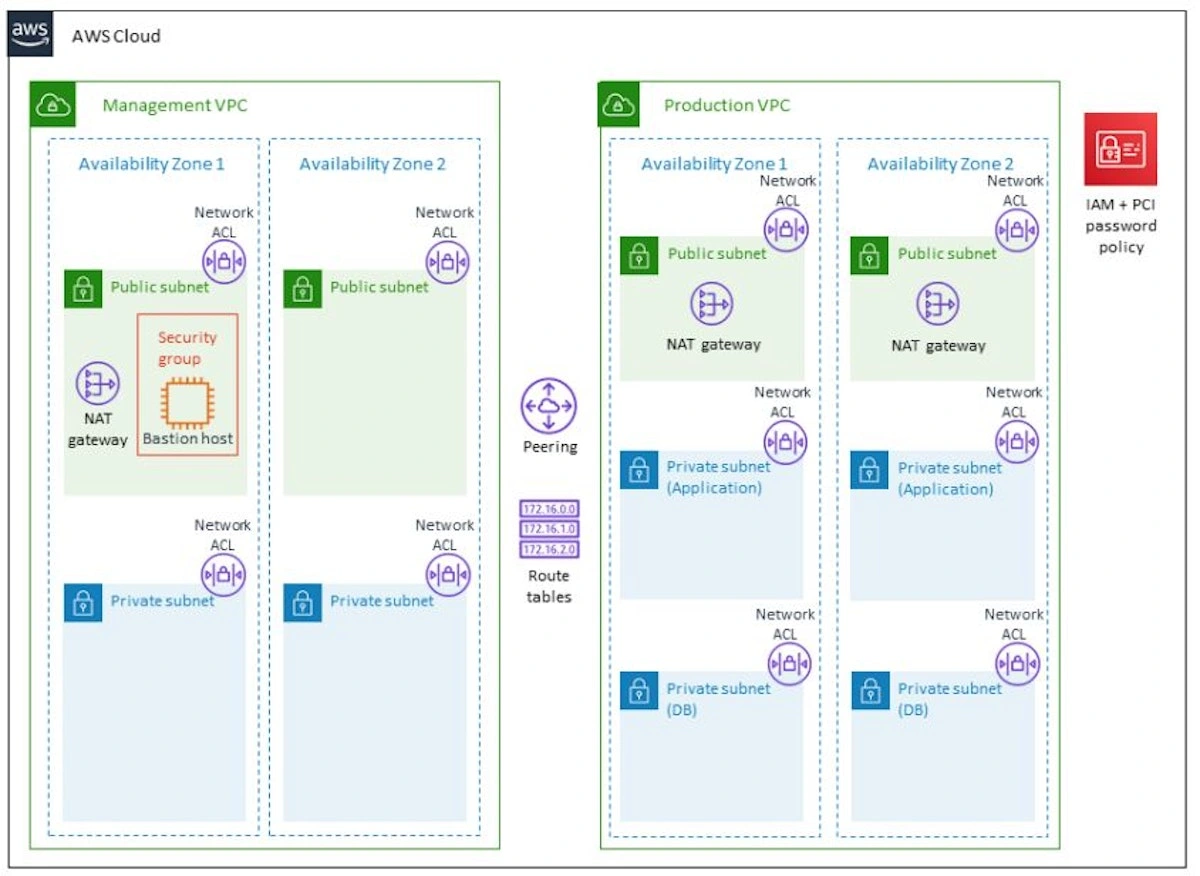

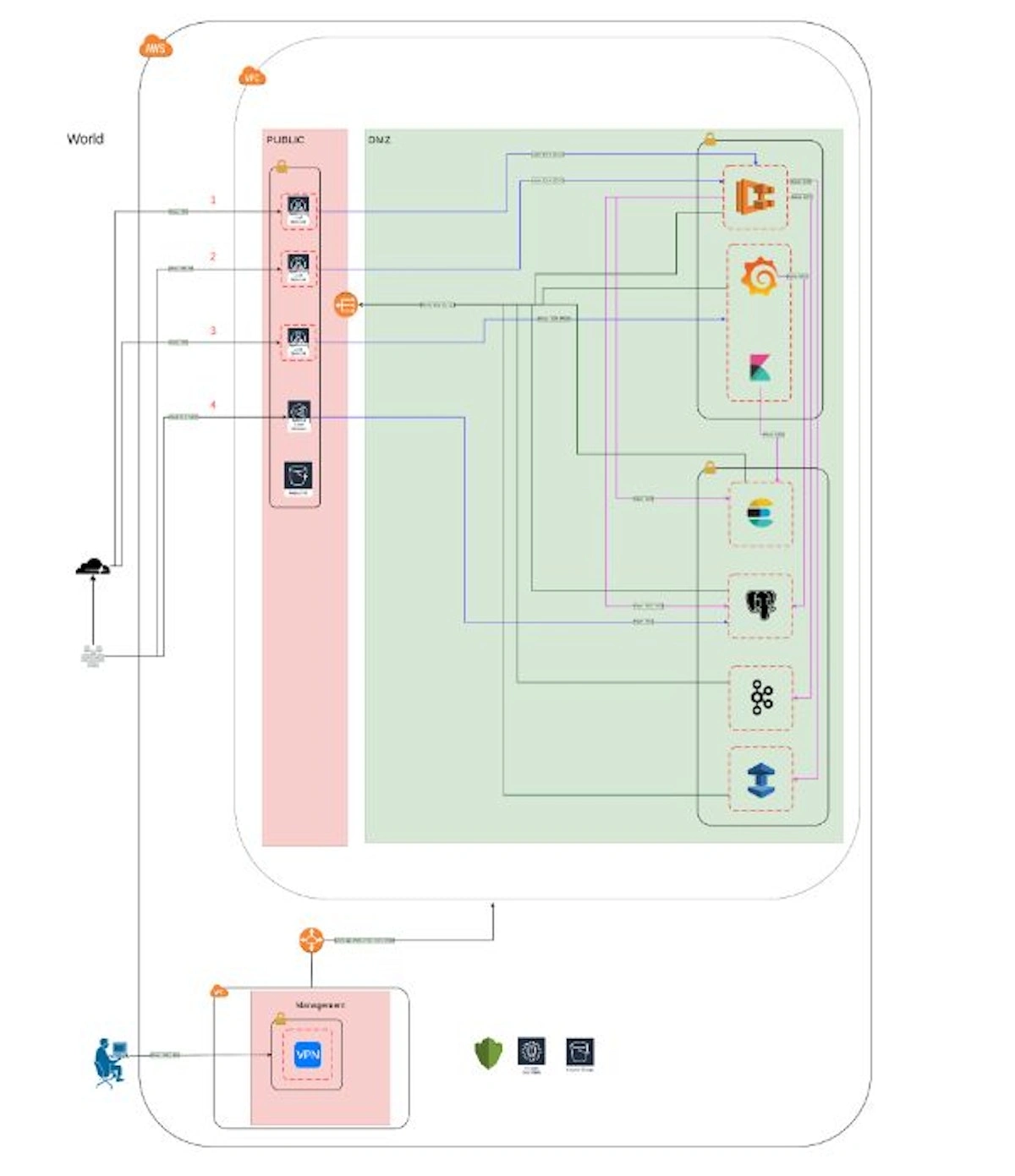

AWS wouldn't be AWS if it didn't have a recipe for deploying a PCI DSS-compliant infrastructure. For this, they made an excellent CloudFormation.

It is actively supported, with new modules and improvements being added over time. With one person's efforts, we set up the staging environment in a week, allowing us to set up production in one day.

We decided to create a separate account for PCI DSS certification passing. As a result, we got two infrastructures. I believe it was the right decision at the time, although it caused us a lot of extra work and pain in the future.

There were few resources, all described in code, all PCI DSS requirements were met, and only two operators had access to this infrastructure. The infrastructure operated in three availability zones, with its own KMS keys.

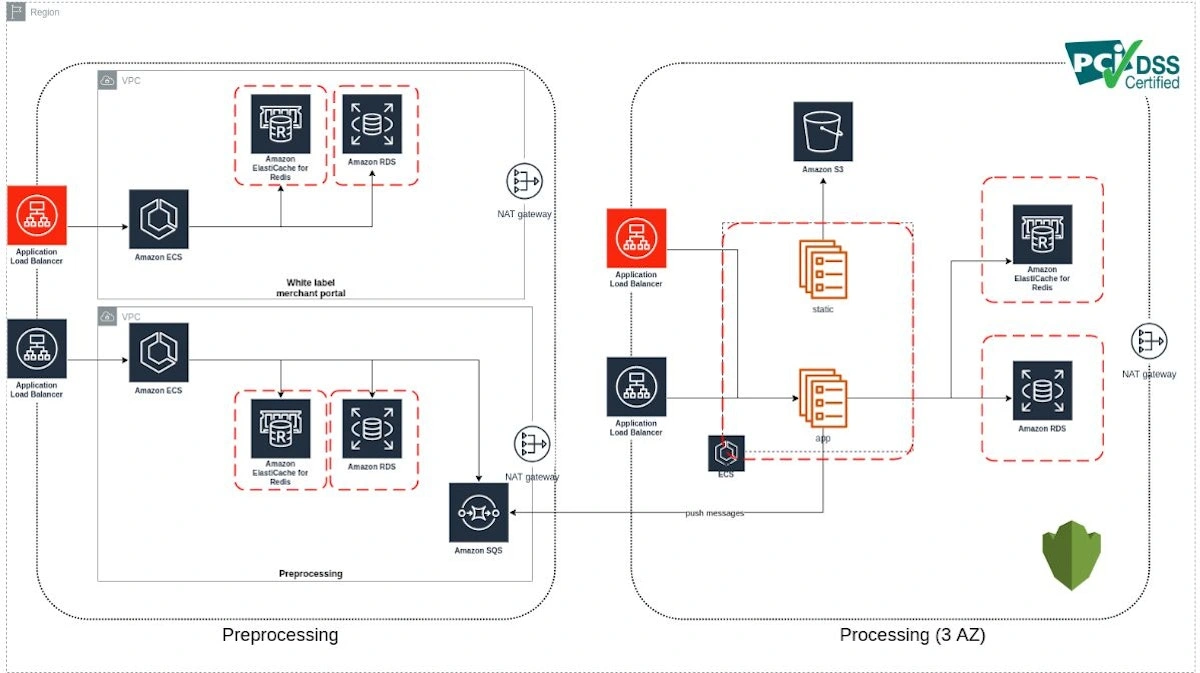

Preprocessing communications were implemented via HTTP API and SQS to avoid complicating our infrastructure with internal peering.

It allowed us to state that card transaction processing is a separate system with its own API, and the only client of that API is our product, Corefy.

During 2019, we gained a lot of new experiences. First of all, it's passing PCI DSS certification.

We also learned how to work with EBS and realized that capacity planning is mandatory because our partners started sending traffic as soon as we launched the card infrastructure.

The volumes of this traffic were sometimes unexpected. Today we process 3000 operations daily, tomorrow 30000, and the day after tomorrow – 300000.

Sometimes we learned about such changes through alerts or visual traffic monitoring.

Mistakes to Avoid

There's a pitfall you can avoid as you start working with autoscaling. During 2019, we "killed" our cluster several times with autoscaling because health checks for our backends were set incorrectly.

If you do not possess adequate resources to declare that your service instance is ready and you frequently poll it, a scenario may occur where the load balancer assumes that the service cannot accept any traffic and thus removes it from the traffic distribution.

While the new service is setting up and warming up, the traffic to other instances increases, where the effect can repeat. Thus, like an avalanche, all cluster services become unavailable.

An interesting story happened with the database. At the time, it lived on instances of the T family with default disks.

When you see that EBS credits are already approaching the mark below 100, and you don't expect a decrease in traffic, upgrading the T-type instance is a poor decision.

As soon as you do this, you will not have a stock of credits on EBS. They start from zero. The database will "devour" the burst rate at the start and won't be able to work stably. Simply put, it will overload.

It was the first serious incident when working with the database that taught us to ensure enough capacity.

We have come to understand that, in our case, instances of the T family are suitable only for the PoC infrastructure.

2020: Migrating From RDS to Dedicated Servers

The productivity of applications started sinking due to increased traffic and DB data volumes.

Sometimes we got unexpected behavior from the app when there was a lot of traffic or when, for example, some payment provider was unavailable.

We learned to handle significant traffic changes (x3-x5) and store and process dozens of gigabytes of data daily. Operating at such loads, we faced new types of problems.

For example, some processes had no reconnects to Redis, or incorrect reconnection occurred when working with the database. Sometimes this created parasitic traffic, and we "clogged" the Postgres connection pool.

We decided to migrate from RDS to dedicated servers due to the expenses associated with infrastructure, the frequency of minor incidents, and insufficient comprehension of RDS instances' capacity in relation to our processing performance.

Over several months, we mastered new tools and planned the migration.

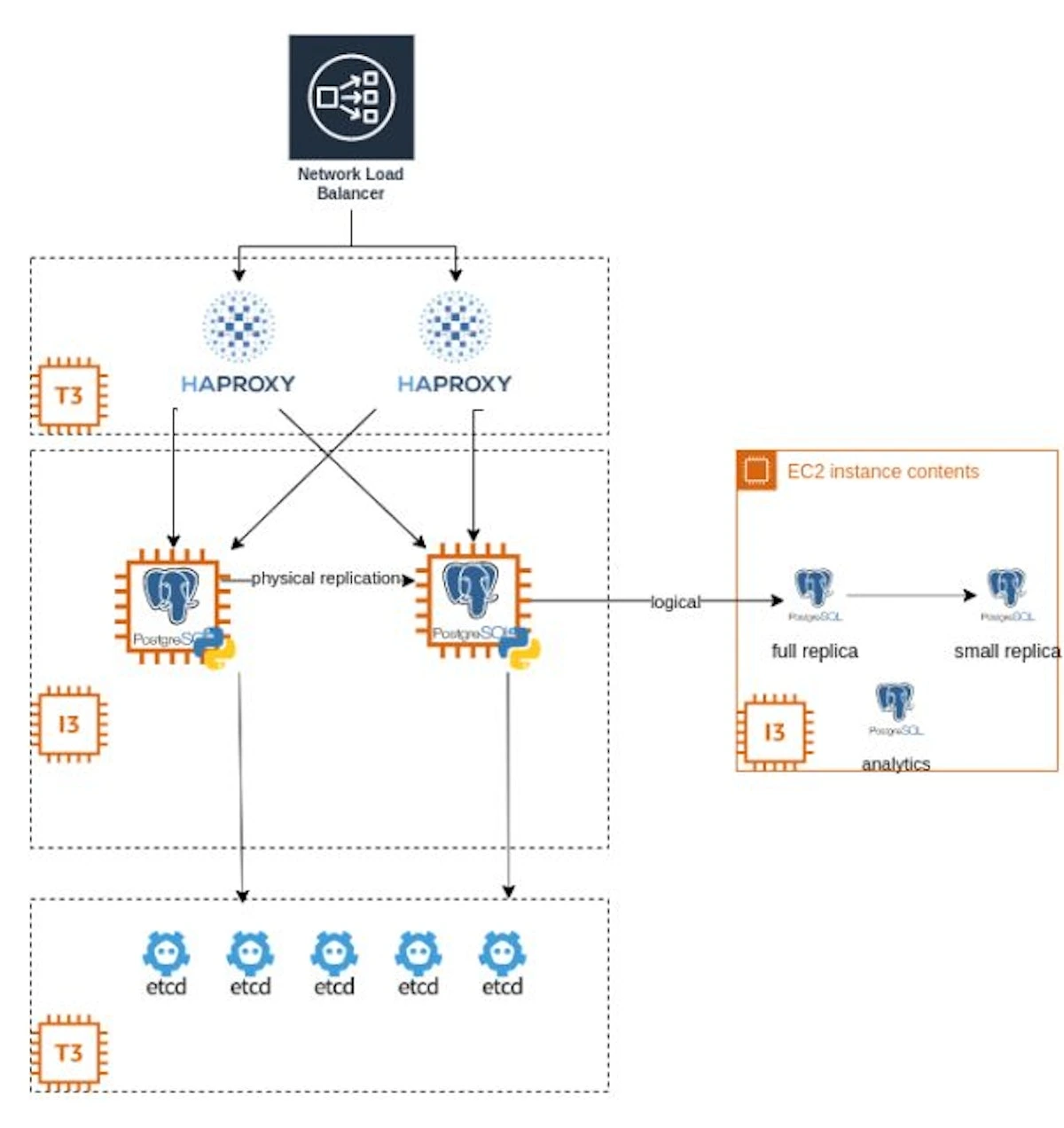

The choice fell on Patroni, where we built an almost classic scheme: we had Haproxy, and traffic went through NLB to instances with PostgreSQL and Patroni.

We had five etcd instances in three availability zones, so if one of them went down, we had a quorum. Running on three servers, this solution allowed us to process more data for less money.

Isn't that happiness for business? To begin with, we took i3en instances and then upgraded them to i3en.2xlarge.

Problems We Faced

1. First, the etcd cluster collapsed, and because of this, the Patroni cluster collapsed. Quorum didn't help. The switch of the master occurred independently, and the cause wasn't immediately apparent.

Eventually, we discovered that the issue's root was related to time synchronization. We resolved the problem by implementing chrony.

2. Incorrect configuration. The parameters didn't match the capabilities of the machines running the services. Mainly, that’s because there were no revisions during migrations.

3. NLB reset the connection to port 5432 if we update the target to 5433. In our architecture, there was a division of ports into writer and reader on one balancer.

We reported this to support and were offered to send everything in its entirety to reproduce the issue. It required a hell of an effort, so we decided to let it go.

Keep in mind that communication with support can be less efficient than you'd expect.

4. Wraparound. There is a problem I wish no one to face. On November 2, 2020, at 08:58 AM, our server started rejecting all attempts to write something to it. It was the beginning of our biggest BD incident of all time.

We had physical copies in backups and had to restore BD using backup urgently, but it didn't work due to a physical problem with XID counters.

We decided to truncate the top tables, make a dump of the minimum amount of data, roll out processing from it, and then add data in the runtime.

It is not an exhaustive list of the issues we faced, but most occurred due to inadequate resource segregation. When we had background processes and the web running, for example, on one ECS cluster – it was one division.

The second division was domain one. Communication with payment providers could take place in different queues and clusters.

By the end of the year, we had over 10 ECS clusters and 100 queues. We also had 4 PostgreSQL clusters, preprocessing, card processing, and a merchant portal (a separate application with its own database cluster that we provide to clients – payment systems – for reselling our channels).

There were also four public load balancers for just one product. We also completed the merging of two AWS accounts into one.

More than 10 million transactions passed through the system per month, so we had 2.5 TB in two DBs.

We already understood the load profile and could plan it for long periods. We took the Savings Plans on EC2 Instance i3n for our databases for a year and saved about 35%.

Cluster scaling tuning and their saturation with spots also allowed us to save significantly and purchase Savings Plans for critical clusters on C5.

2021: Migrating to AWS Aurora

The number of incidents, on-calls, and instability in the service operation led us to move our card processing to AWS Aurora. We decided to try a smaller project and then migrate others if everything went smoothly.

Gradualness was necessary to estimate the cost of operation because it was difficult to predict the number of reads and write IOPS.

We started to digitize all our infrastructure in Terraform actively. It's not the most pleasant thing to do, as you have to make many imports and work with production that isn't fully described in the code.

Retrospective

As a result of the experience gained, I want to draw certain conclusions retrospectively, through the prism of the value we could have received:

Describe the entire infrastructure in code from day one. It will help create documentation, allow others to enter your project with minimal involvement, conduct audits, and deploy similar infrastructures. We haven't done it, so now we're making up for it.

Prioritize and review goals for the period. For example, it's one thing if you want to save, but if the downtime is costly – it is quite another. Our migration from AWS RDS to Aurora was through our dedicated self-managed instance. In 2020, we received a lot of negativity and a bad user experience precisely because of the database. Of course, we've grown as a team in terms of architecture and infrastructure. But if we compare the financial and reputation losses with the amount we could have spent on Aurora, it would have been more rational to choose Aurora. There's a mental trap: when you calculate the cost of the service, sometimes it seems easier to build it yourself. But you must also consider the cost of system maintenance, people's work, monitoring tools, backups, and everything else that Amazon provides "out of the box".

Do cost planning to avoid unpleasant surprises. Growth planning is closely related to cost planning. Namely, you can opt for Savings Plans, allowing you to grow more organically and cheaper. Take advantage of this opportunity.

Design your applications so that they work on spot clusters. An instance can "die" at any moment, and your application must be able to handle it.

Avoid having all resources in one account. We haven't planned the development of our accounts, so for some time, we had development, production, and other company resources that were not related to processing in one account. That's why we did it in a separate account when it came time to prepare our infrastructure for PCI DSS certification. We just couldn't do it in our main account filled with various unrelated resources. Now we have come to the point where it is essential to separate accounts. We transfer them to another root account to perform SSO, divide workloads by type (development, production), and allocate separate accounts for resources, logs, and everything unrelated to processing.

A Little Thanks to AWS

By deciding to work with AWS in 2018, we switched focus from solving infrastructure problems to product development. It's worth saying that AWS support comes with a fee.

With the growth of your infrastructure costs, the price you'll have to pay for support will increase respectively, even if you don't use it.

Speaking of the positive aspects, I'd like to highlight the efforts of the account managers and architects automatically assigned to projects with a budget of $5k or more.

We worked closely with them to optimize our sage of AWS services and lower the overall cost.

There are tons of programs out there that aim to hook you up and increase your AWS check. However, they offer credits for mastering certain services, allowing you to save money.

For example, if you develop a Machine Learning product, it's an excellent opportunity to get several hundred or even thousands of dollars for your PoC.

Plans

We strategically decided to switch to K8s, build a data warehouse, and reorganize our AWS accounts. I will tell you about all this in detail in the following articles.

Like this project

Posted Feb 13, 2024

Ghostwriting piece for DevOps based on the CTO's conference speech recording in Ukrainian. We collaborated to update the content and ensure technical accuracy.