CME Group Trading Hours Scraper

CME Group Trading Hours Scraper

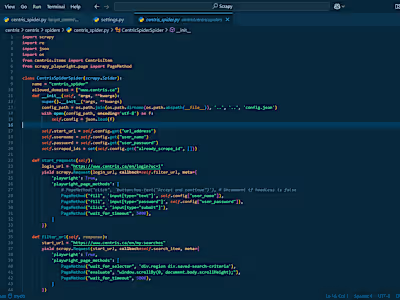

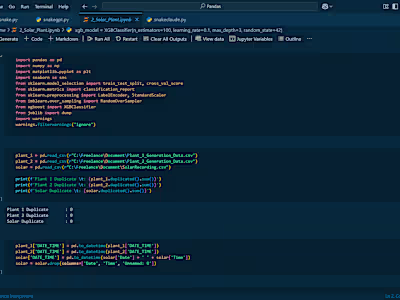

This Python script

CME.py automates the process of scraping trading hours data from the CME Group Trading Hours page using the playwright library. It extracts product names and their associated trading hours, then saves the data into a timestamped CSV file for easy analysis or record-keeping.Overview

Purpose: Scrape trading hours data for various financial products listed on the CME Group website.

Tools:

playwright.async_api: Automates a Chromium browser to interact with dynamic web content.csv: Writes the scraped data to a CSV file.datetime: Generates timestamps for unique CSV filenames.asyncio: Manages asynchronous operations for efficient web scraping.Output: A CSV file (e.g., trading_hours_20231024_153022.csv) containing columns: Product Name, Trade Group, and Trade Group Text.

How It Works

1. Setup and Browser Initialization

The script launches a Chromium browser in non-headless mode (visible for debugging) using

playwright.It navigates to the target URL:

https://www.cmegroup.com/trading-hours.html.2. CSV File Creation

A timestamp is generated using

datetime to ensure each CSV file has a unique name (e.g., trading_hours_20231024_153022.csv).The CSV file is opened with headers:

Product Name, Trade Group, and Trade Group Text.3. Interacting with Filters

The script clicks a dropdown menu on the webpage to reveal filter options (e.g., categories of trading products).

It iterates through each filter, clicking one at a time to load the corresponding trading hours data.

4. Data Extraction

The

get_data function extracts data from a table displayed after each filter is applied.For each row in the table:

Product Name: Extracted from the

product-code column.Trade Groups: Extracted from multiple

events-data columns, which contain trading hours details.Each trade group’s text is written to the CSV file alongside the product name and a trade group identifier (e.g., "Trade Group 1").

5. Asynchronous Execution

The script uses asyncio to handle asynchronous browser operations, such as waiting for page elements to load or actions to complete.

The main function start_url is executed with asyncio.run().

Key Features

Dynamic Web Scraping: Handles dynamically loaded content by automating browser interactions.

Structured Output: Saves data in a CSV format, making it easy to analyze or share.

Console Feedback: Prints the number of filters, rows, and extracted data for monitoring progress.

Like this project

Posted Apr 13, 2025

Automated scraping of CME Group trading hours using Python and Playwright.

Likes

0

Views

3