Built with Framer

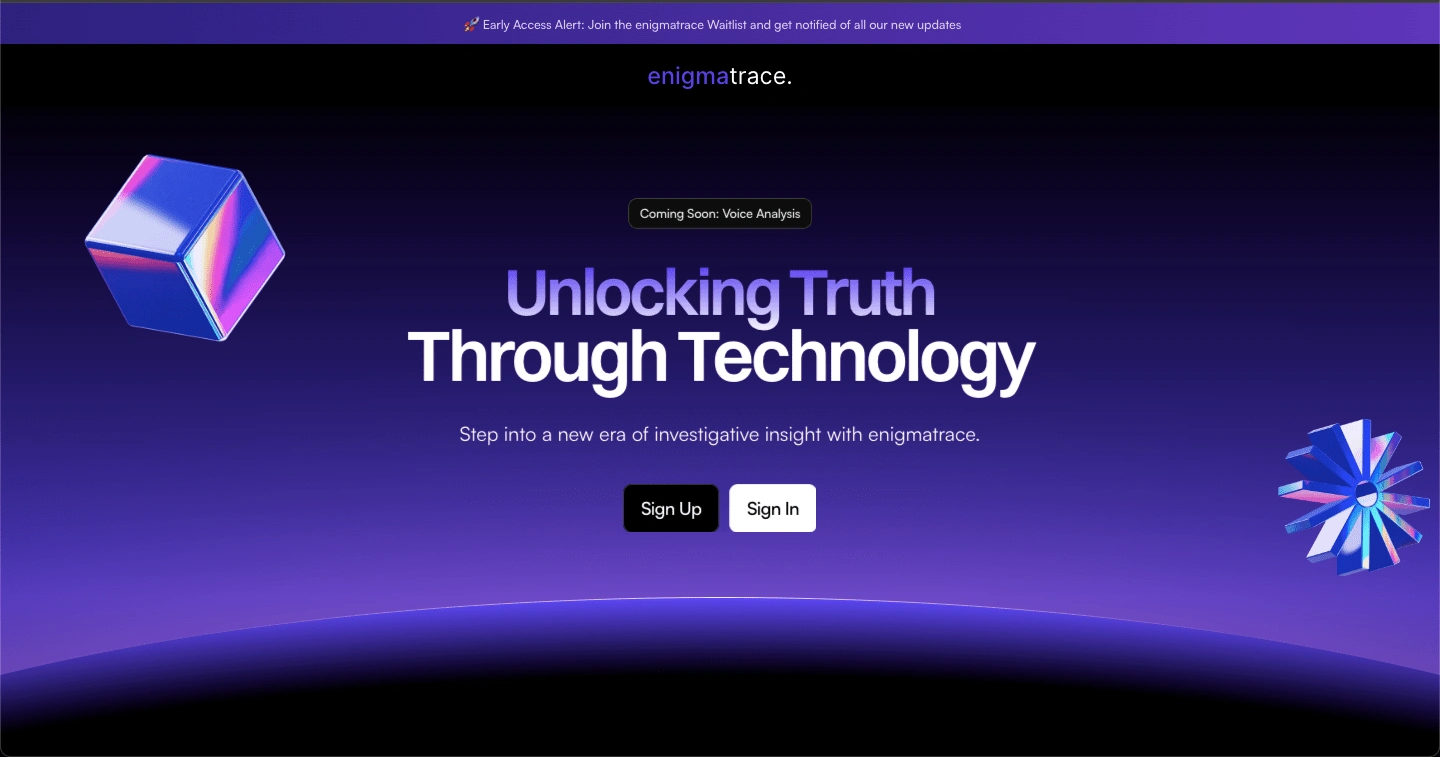

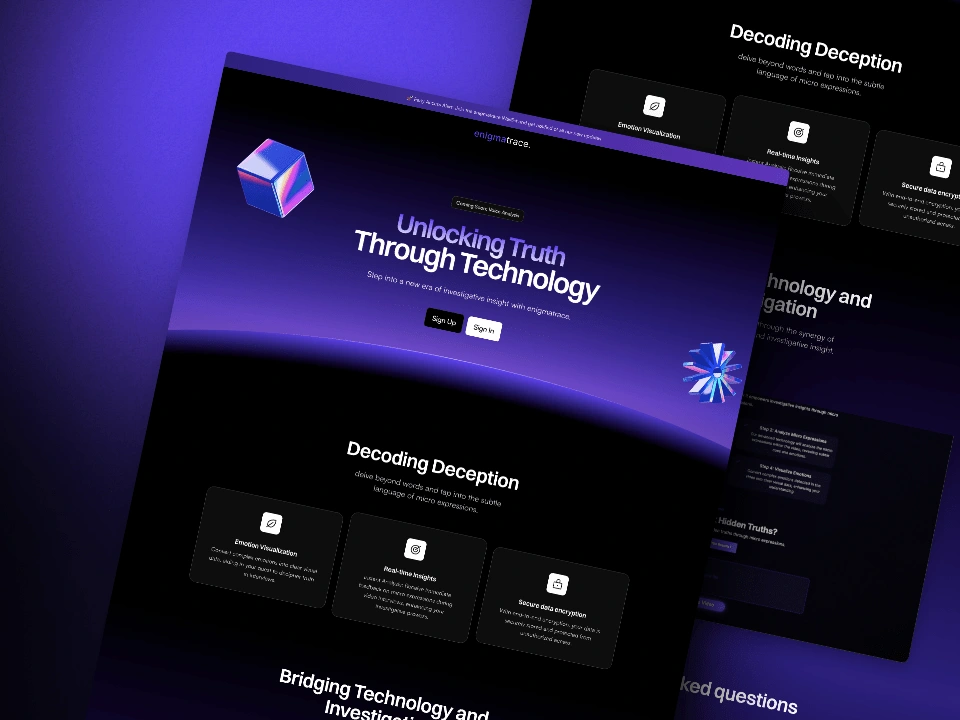

enigmatrace - AI Deception Detector

enigmatrace - Hero section

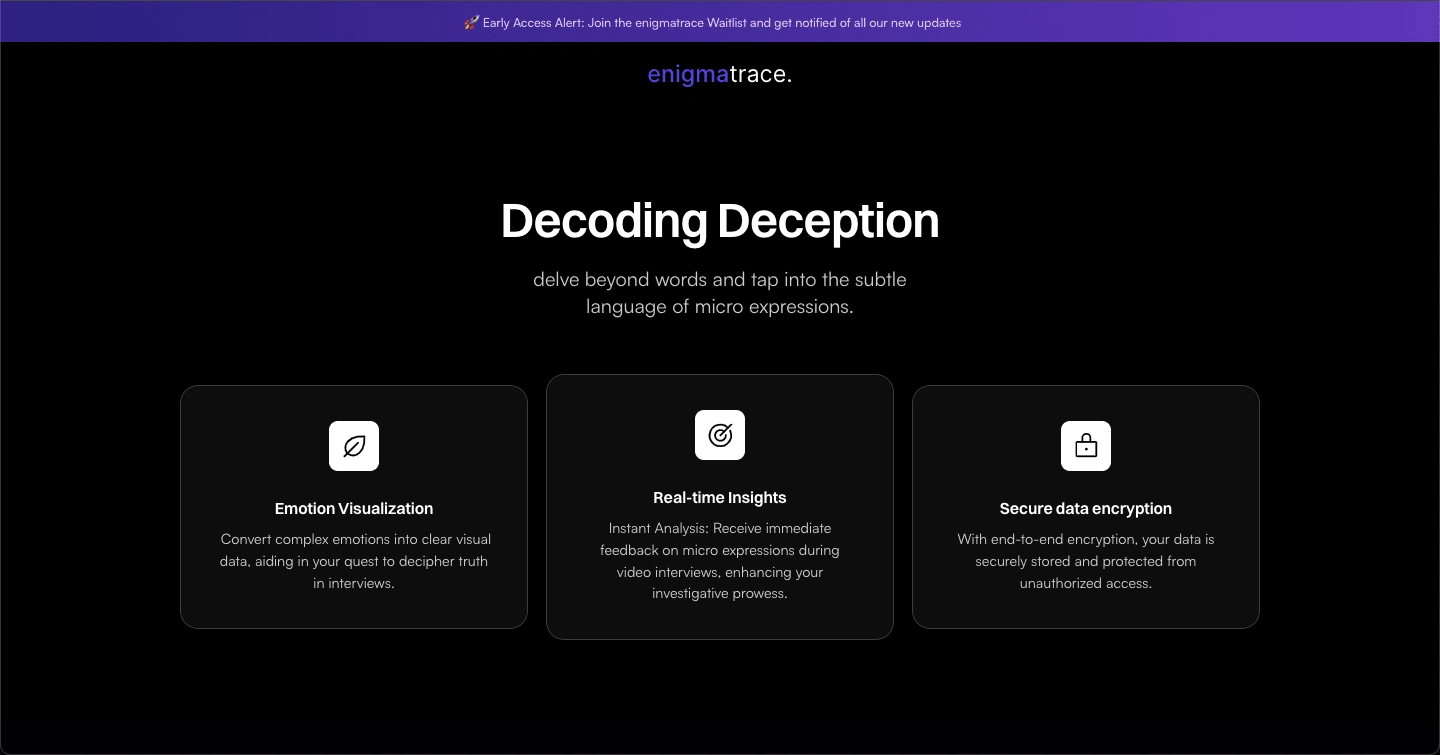

Features

Promo

The story ✨:

As a part of buildspace's nights & weekends S4, I got the idea to create enigmatrace...

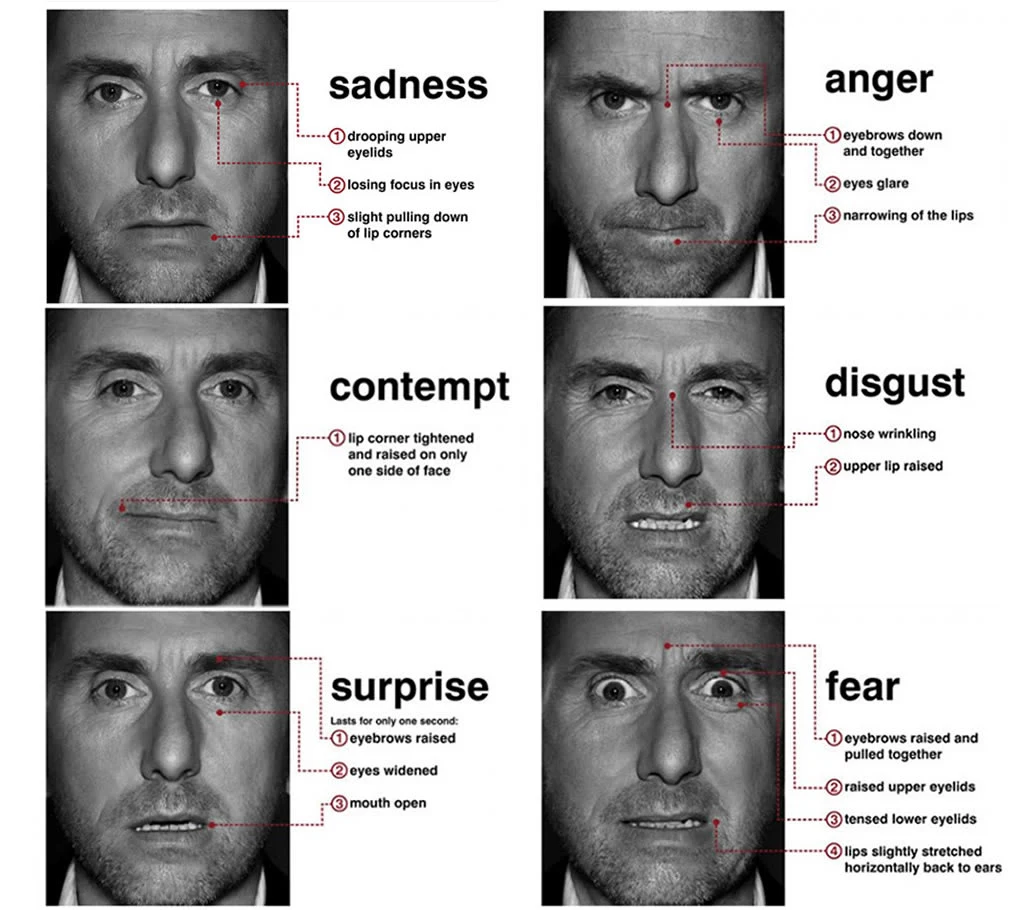

The idea came to me as I was reading a book by Paul Ekman concerning micro-expressions at that time.

Universal Micro Expressions

I instantly got the idea that this would be done much more efficiently using a computer, and so by this time, I already had an idea, it was just a matter of seeing if I could build it or not.

Validating the concept 🧪:

Before moving further I decided that I had to see for myself if this could work, and if so to what extent would it be accurate.

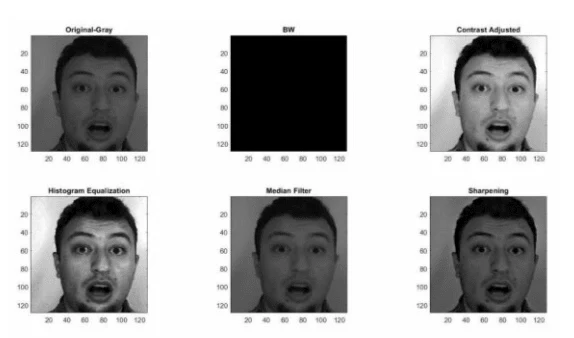

So I went ahead and started working on the backend first, the initial goal was to map the micro expressions with the frames in the videos, this, of course, was much easier in theory than in application, but it was successful nonetheless.

Figure 2 Effect of basic pre-processing in the spatial domain (color data)

The Key To The Operation 🗝:

At this point, my program was able to recognize the micro-expressions emitted by the person in the video, but that in itself was not enough.

The goal is to create a deception detector and to do that, I need to make sure to compare whatever the individual was saying at that time to the micro-expressions they were emitting, the higher the percent of contradiction between these two, the higher likely that they're not telling the truth.

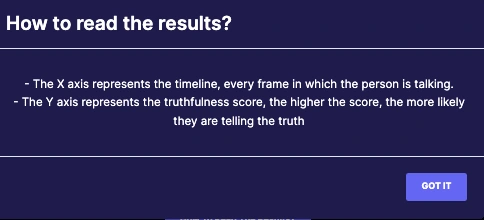

To make this easier, I created what I called the "Truthfulness score":

Guide to reading the results

Getting Off Local Host 🌍:

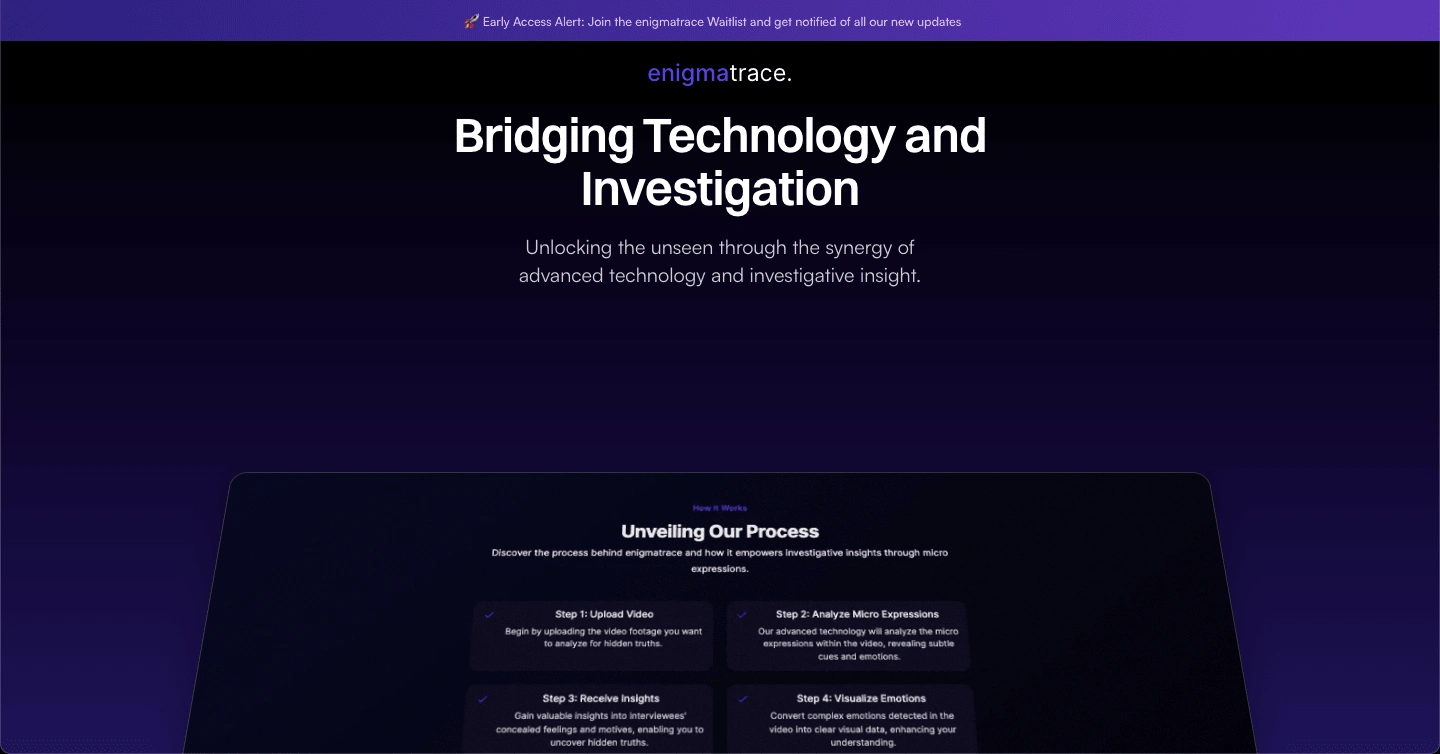

After the validation process, I decided it was time to take my project online finally. For this, I chose to use Framer for my landing page, providing a visually appealing and interactive introduction to enigmatrace.

The intuitive drag-and-drop interface of Framer allowed me to design a professional-looking landing page without writing extensive code.

For the application's dashboard, I opted for Next.js, a powerful React framework that offers server-side rendering and static site generation.

Next.js enabled me to create a fast, scalable, and SEO-friendly dashboard, where users can interact with the AI-powered microexpression analysis tool. And more importantly more flexibility in terms of displaying the results.

The Testing Phase 🧪:

Now that my application is live, I designed an experiment, to see just how well its capabilities extend.

To do this, I asked a couple of my friends the same thing, to record a video in which they tell a story that happened to them, that I don't know about. And if the story is real, meaning it happened, throw in some small lie inside of it.

The goal was to see if my application would be able to accurately judge if the story is real, and then if it is, spot the exact time where the lie happened.

After that, we read the results together and see for ourselves whether it performed well or not.

Long story short, it did! 💫

Like this project

Posted Sep 22, 2023

enigmatrace is a cutting-edge platform designed to uncover emotions & detect deception through facial micro-expressions