ECG Monitor design

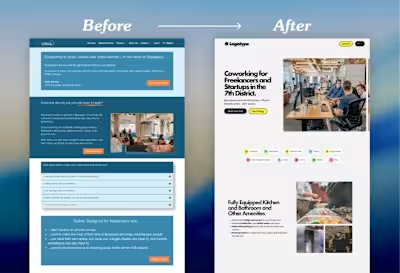

Nobody cares about animations on a hospital bed. Behind the scenes of this hyper-technological event, the embedded world Exhibition&Conference , there is still UX... While the spotlight shines on chipsets, boards, AI, 3D images, and all the buzzwords, the user is someone who just needs help, especially if you’re designing an ECG. I’m not here to show my designs and say, “Look how cool I am, look at this shiny effect I made.” But it’s hard to post these kinds of topics. That’s why at #LVGL we started this project by doing research. And what’s better than #AI to investigate and gather data quickly? Yeah, I know designers just like to jump on Pinterest and get “inspired,” but for this case, I felt it deserved a more serious approach. So I started to use Perplexity.ai and ChatGPT for in-depth technical and UX research, to shed some light on these questions: 1 — What are the UX best practices for medical devices, according to Norman Nielsen or similar authorities? That question led to an answer: some data must always remain visible. So I had material for the next one: 2 — Which types of information must always remain visible, and which can change without compromising safety? And so on, until I arrived at more detailed questions like: 3 — What are the accepted color semantics in medical UIs? That’s how we avoided starting from a blank canvas or simply imitating previous designs. So before jumping into Figma, I just needed to drop these insights into v0.dev ... and boom! I had a basic functional prototype to build on.

Like this project

Posted Jan 22, 2026

Nobody cares about animations on a hospital bed. Behind the scenes of this hyper-technological event, the embedded world Exhibition&Conference , there is sti…

Likes

1

Views

2

Clients

LVGL