Geo-Niche Web Directory Development

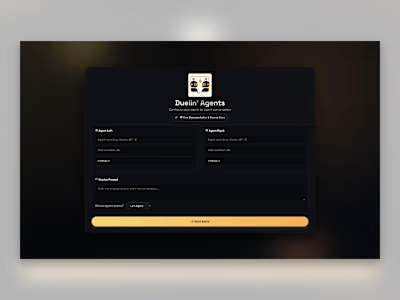

Building a Geo-Niche Web Directory With AI (10k+ high-performing webpages in one click)

Role: Product Designer & Developer

Timeline: 2025

Tools: Vanilla JavaScript, Replit, Python, HTML, CSS

Genesis: Building a Geo-Niche Thrift Directory That Actually Gets Found

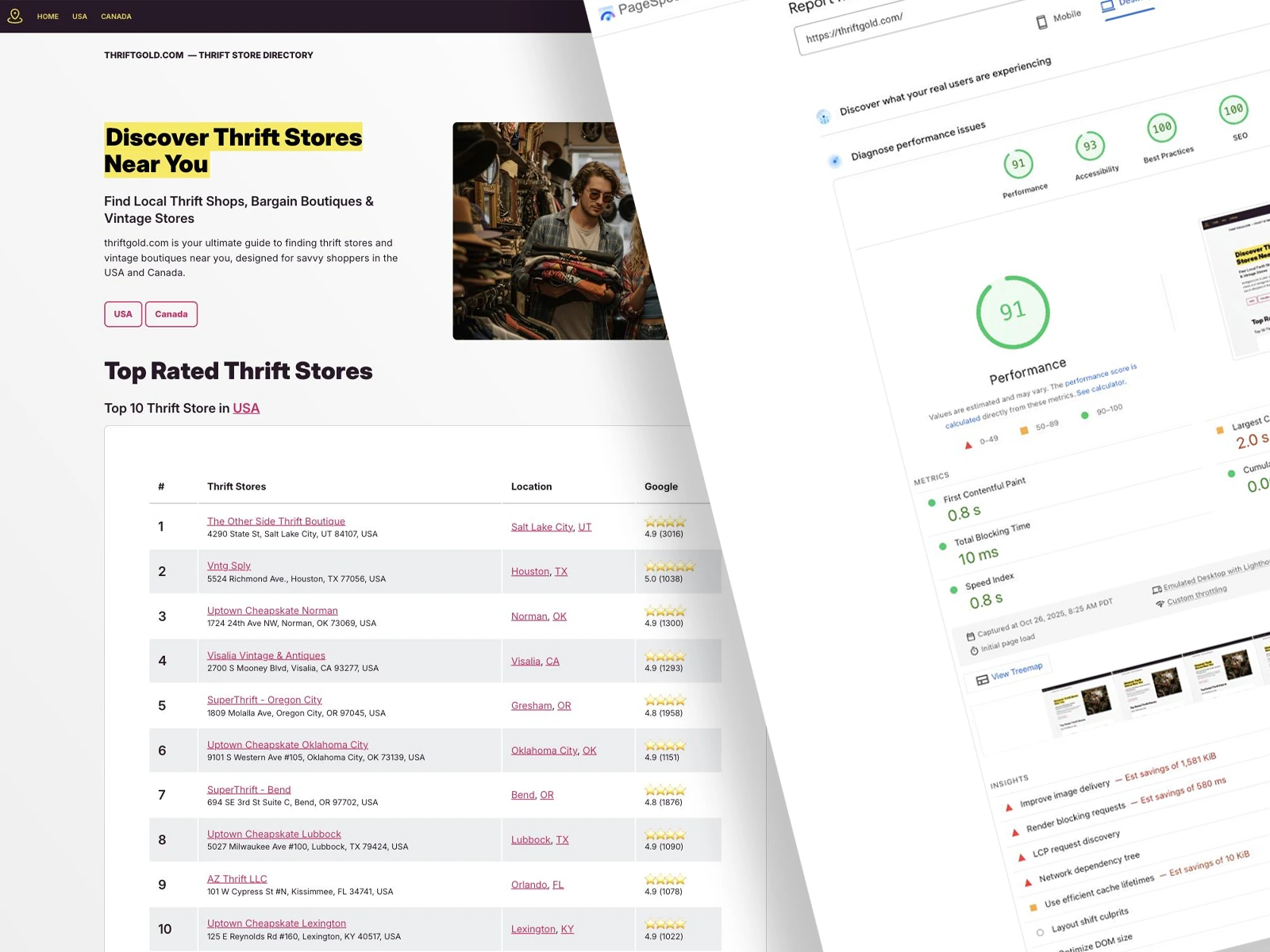

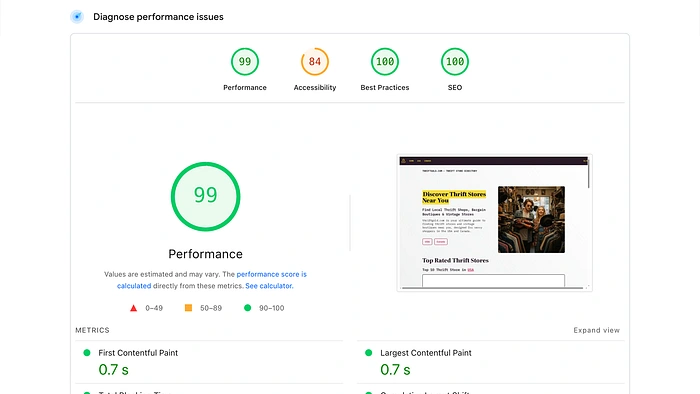

I mapped every North American thrift store and bargain shop into 9,000 flat-HTML pages served from Netlify’s edge CDN. Zero backend, instant global caching, and a single

_redirects file handling legacy paths in one hop.Why build this when AI summaries are eating everyone’s clicks? Because first-party location data still enjoys scarcity — especially now that Cloudflare blocks unapproved AI crawlers by default, forcing LLM vendors to pay or stay out.

The Stack (And Why I Chose Each Piece)

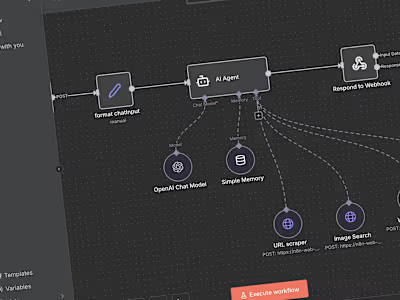

Generator: Custom Python script with Jinja templates

Why: Faster than wrestling with Pelican; Real Python’s Jinja primer was my guide

Config UI: Tiny Flask app

Why: Lets non-devs tweak YAML and hit Build — pattern borrowed from Real Python’s Flask guides

Hosting: Netlify

Why: Free SSL + easy 301s via

_redirectsAI Assist: Replit Agent (Claude Sonnet 4) for live refactors; Gemini Pro for PRD scaffolding

Collaboration: ChatGPT for rubber-duck debugging; no Figma — HTML/CSS spec came from chat with Replit’s agent

Deadpan aside: Skipping Figma felt like wearing mismatched socks to a design review — technically fine, stylistically liberating.

The Duplicate-Name Nightmare

New York City alone hosts multiple Value Village outlets and a constellation of Salvation Army stores. My first slug scheme (

/value-village/) generated duplicate pages, and Google promptly chose its own canonical while sidelining the rest.The fix: Embed geo segments (

/ny/new-york/value-village-w48th/) and emit absolute self-canonicals on every page. When two stores still collide, I append the street number. Ugly, but indexable.URL Roulette & SEO Triage

I renamed slugs three times in one week — each change required a permanent 301 mapped in

_redirects to preserve equity. Google Search Console's Page Indexing report quickly flagged redirect chains and "Duplicate, Google chose different canonical" issues.Key lesson: Code refactors are cheap; crawl budget isn’t.

I also added structured data love — LocalBusiness schema per store for richer local SERP cards.

From Thrift to Vinyl: FindVinylRecords.com

Armed with battle scars, I repurposed the same generator to launch FindVinylRecords.com — a sister directory exclusively for record stores. The swap was 95% YAML edits and a new CSS palette. Launch-to-index happened in half the time because the redirect playbook was already baked in.

Early Results & What’s Next

The wins:

Index coverage now trends up

Single-hop 301s reduced “Excluded” rows by ~40%

Organic clicks remain modest — content beats scaffolding

Next sprint: Beefy blog posts and local-store spotlights.

Long-term goal: Prove that curated location data still carries resale value, even as AI abstracts the web.

Deadpan footnote: Static sites are like thrift-store vinyl — lightweight, charming, and full of pops if you mishandle the grooves.

Tool Stack in One Breath

Netlify, Replit (Agent v2), ChatGPT, Claude Sonnet 4, Gemini Pro, Python+Jinja, a stubborn

_redirects file, and a generous pour of caffeine.Have you built location-based directories? What challenges did you face with duplicate listings and SEO? I’d love to hear your war stories and any tricks you’ve discovered.

Like this project

Posted Oct 25, 2025

Fast loading geo-niche thrift directory with 10k+ static pages using AI, HTML and Python. Made w/ Replit.

Likes

0

Views

7