AWS Lambda Deployment with CloudFormation and GitHub Actions

Like this project

Posted Nov 17, 2025

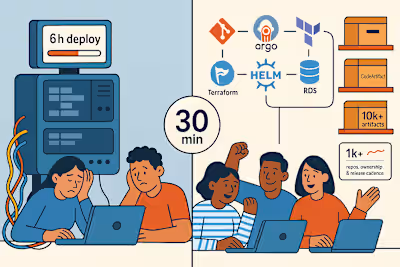

Deployed AWS Lambda using CloudFormation and GitHub Actions for a quick POC.

Hey there, if you've landed here, you're probably a developer interested in deploying an AWS Lambda as quickly and simply as possible. ⚡

You’ve come to the right place! Let me share some of my thoughts on Lambda and serverless. Many tutorials out there involve a lot of manual steps and can be hard to maintain or even set up if you just want to try out AWS services as quickly as possible.

Before Everything

This example uses CloudFormation because, although it isn't the best IaC tool, it's the quickest for a one-click deployment POC. Even though Terraform isn’t ideal for this, CDK or SAM may be easy to maintain.

The resources involved are minimal: a bucket where we'll store our code as a zip file, a helper Lambda to create an empty zip as a placeholder and then clean the bucket when we delete it, and our actual Lambda with a public endpoint URL. There is no API Gateway here—too much complexity for a simple POC. Of course, there are IAM roles for each Lambda.

Content

Architecture Boring Stuff

User Interaction

A user sends an HTTP request to the Lambda function URL (

LambdaFunctionUrl).The LambdaFunction handles the HTTP request, assuming the

LambdaExecutionRole IAM role for the necessary permissions.It generates a URL and interacts with an S3 bucket (

codebucket), which stores the .NET code.S3 and Helper Lambda

The S3 bucket (

codebucket) stores the .NET code.A helper Lambda function (

HelperS3ObjectsFunction) manages S3 objects within this bucket, such as creating and deleting objects.The helper Lambda function assumes the

HelperS3ObjectsWriteRole IAM role to manage these S3 operations.IAM Roles

LambdaExecutionRole: Provides the necessary permissions for the primary Lambda function.

HelperS3ObjectsWriteRole: Provides permissions for the helper Lambda function to manage S3 objects.

Pipeline User for GitHub Actions

The GitHub Actions workflow uses an IAM user (

PipelineUser) to deploy updates to the Lambda function and upload the packaged code to the S3 bucket. The workflow includes steps for:Checking out the code

Setting up .NET

Installing dependencies

Building the project

Zipping the Lambda package

Configuring AWS credentials

Uploading the package to S3

Updating the Lambda function code

Flow

User -> Lambda Function URL -> Lambda Function -> S3 Bucket

Helper Lambda -> S3 Bucket (for management tasks)

Pipeline User -> GitHub Actions -> S3 Bucket and Lambda Function (for deployment)

cloudformation-template.yaml

LET’S START!

Let's get going if you already have an AWS account and the AWS CLI installed (jq is also necessary but not mandatory).

Step-01: 🚀 Deploy CloudFormation Stack for Code

Note: The Lambda has a predefined name for easy identification in the pipeline:

aws-lambda-code. The Lambda's zip file is called my-lambda.zip—important as it serves as a placeholder for deploying our empty Lambda.Step-02: 🛠️ Create AWS IAM User for Pipeline

In this step, we'll create a Pipeline User that GitHub Actions will use. This user will have the necessary policies attached to copy files to our bucket and update our Lambda function.

Step-03: 🔒 Add Secrets to GitHub Secrets

After creating the IAM user and generating the access keys, follow these steps to add these credentials to your GitHub repository secrets for use in GitHub Actions:

Navigate to Secrets in Your GitHub Repository:

Go to the main page of your repository on GitHub.

Click on the Settings tab at the top of the repository page.

In the left sidebar, click on Secrets and variables > Actions.

Add New Repository Secret:

Click the New repository secret button.

AWS_ACCESS_KEY_ID:

Name:

AWS_ACCESS_KEY_IDValue: Enter the

AccessKeyId value you obtained.Click Add secret.

AWS_SECRET_ACCESS_KEY:

Name:

AWS_SECRET_ACCESS_KEYValue: Enter the

SecretAccessKey value you obtained.Click Add secret.

S3_BUCKET_NAME:

Name:

S3_BUCKET_NAMEValue: Enter your S3 bucket name (e.g.,

blazing-lambda-code-bucket).Click Add secret.

Following these steps, you'll securely add the credentials to your GitHub repository for use in your GitHub Actions workflow.

Step-04: 📦 Dependencies

Some compiled languages, like in this C# example, need some dependencies:

And a small entry point:

For Java, it's similar:

Step-05: 🤖 Pipeline Magic

Copy the pipeline into

.github/workflows/deploy.yml:Important: The

my-lambda.zip was named to overwrite the previous zip with our actual code. aws-lambda-code is predefined in the CloudFormation stack.Cleaning Up

To delete the CloudFormation stack, use the following command:

BLAZINGGG ENJOYY 🎉🔥

You can see your pipeline triggered, the code zipped, uploaded to S3, and the Lambda updated. This workflow will trigger on each commit to the main branch. 🚀

We take the Lambda URL from the stack output or directly from the Lambda interface to make an HTTP request. 🌐

The best part? You can go to S3 and, if you want to try out DynamoDB, go to the

LambdaExecutionRole and add more permissions. You can do it in IAM or redeploy the CloudFormation stack if you're brave enough.And there you have it! A blazing fast, simple way to deploy AWS Lambdas. Enjoy!

Happy deploying :)