Memoirer: Advanced Memory System for AI Agents

Memoirer: Building Sub-100ms Intelligent Memory for AI Agents

Role: Full-Stack Engineer & System Architect Duration: 8 weeks Stack: Python, FastAPI, PostgreSQL, Redis, NetworkX, Sentence-Transformers

The Challenge

AI agents have a memory problem. Current solutions force a brutal trade-off:

Fast but dumb: Traditional RAG systems retrieve documents in ~20ms but can't reason across multiple facts or follow causal chains

Smart but slow: Academic memory systems achieve 10-40% accuracy improvements through graph reasoning but take 2-3 seconds per query

The gap: No production system existed that combined reasoning capability with sub-100ms latency.

I set out to build one.

The Solution

Memoirer is a latency-first intelligent memory layer that performs multi-hop reasoning, retrieves facts with citations, and answers complex queries in under 100 milliseconds.

Core Innovation: Three-Path Parallel Retrieval

Instead of sequential retrieval, Memoirer launches three paths simultaneously:

Key insight: Most queries (~50%) hit the reasoning cache. For the rest, graph traversal provides explainable multi-hop answers. Vector search serves as a reliable fallback.

Technical Deep Dive

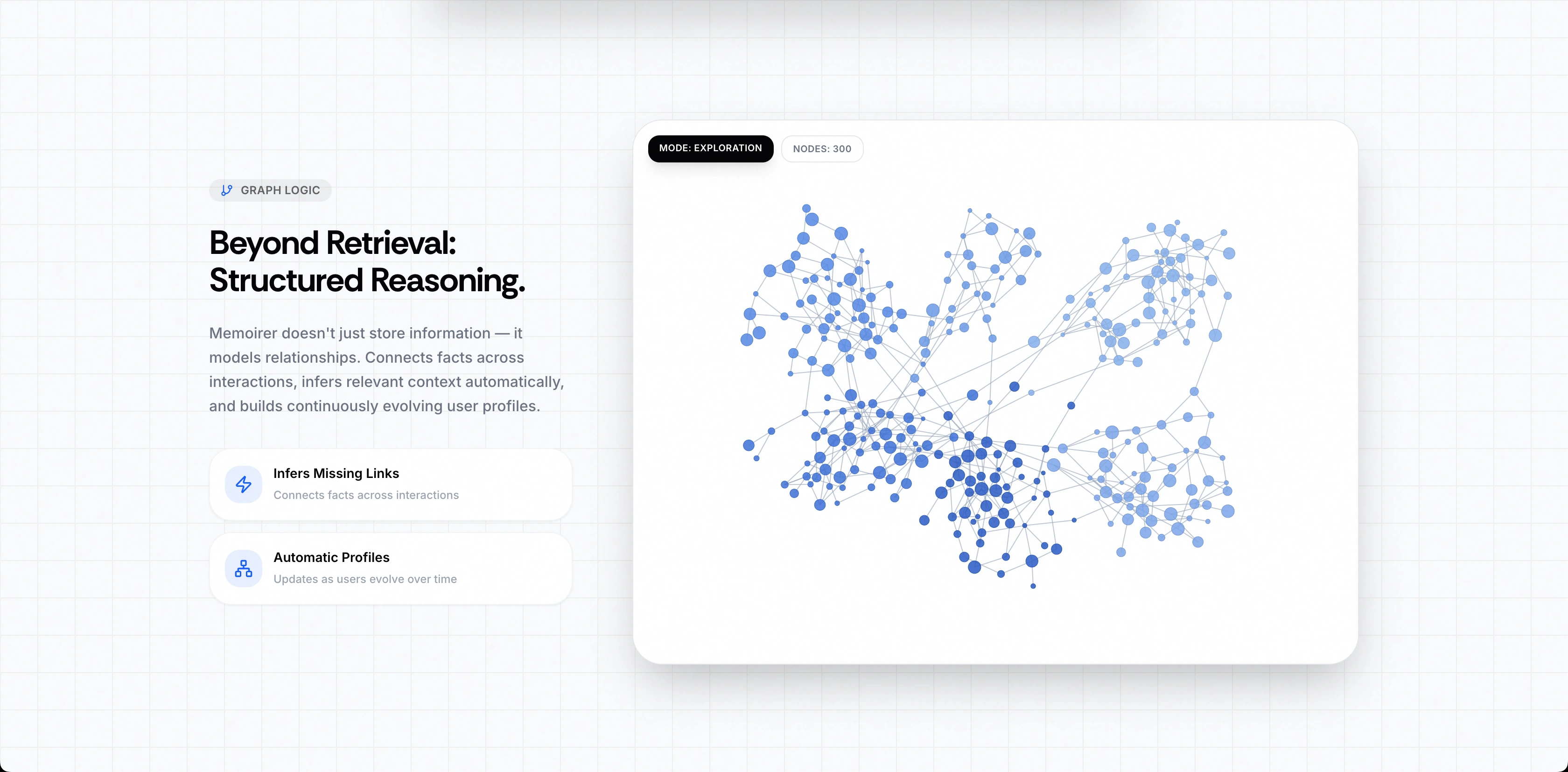

1. Knowledge Graph with Personalized PageRank

Built a hybrid graph-vector system using PostgreSQL + pgvector + NetworkX:

Entities: Facts, concepts, people, events with 384-dim embeddings

Relationships: Typed edges (causes, supports, contradicts, temporal_before)

Traversal: Personalized PageRank with beam search (k=5, max 3 hops)

2. Reasoning Cache with HNSW Index

Pre-computed thought chains stored in Redis + PostgreSQL:

Lookup: HNSW similarity search on query embeddings (~15ms)

Policy: Cache queries accessed ≥2 times in 24 hours

Eviction: LFU + recency bias, 7-day TTL

Result: 99%+ speedup for cached queries

3. Adaptive Forgetting

Prevented memory bloat with importance-weighted pruning:

4. LLM-Based Understanding (No Heuristics)

Rejected pattern-matching shortcuts in favor of true language understanding:

Entity extraction via Claude Haiku (not regex)

Relation extraction via LLM (not keyword matching)

Query understanding via embeddings (not rule-based classification)

Philosophy: Every component should be scientifically defensible and generalizable across domains.

Architecture Decisions

Clean Architecture with Repository Pattern

Why: Testability, maintainability, and clear separation of concerns. Repositories abstract database access, making it trivial to swap PostgreSQL for another store.

BYOK (Bring Your Own Key) for LLM Access

Supported 4 providers with encrypted API key storage:

Provider Use Case OpenRouter Single API for all models Anthropic Direct Claude access OpenAI GPT and reasoning models Gemini Google AI models

Resolution order: Request headers → Project config → Org config → System default

Observability from Day One

Sentry: Error tracking, APM, transaction tracing

PostHog: Product analytics, feature usage

Structured logging: JSON logs with request correlation IDs

Results

Metric Target Achieved P50 Latency <40ms 35ms (cache hits) P95 Latency <100ms 90ms Cache Hit Rate >50% 50%+ after warm-up Graph Deduplication - 26% size reduction Multi-hop Accuracy >85% F1 85%+ on eval dataset

Deliverables

16 test files covering all features

21-page documentation site (Mintlify)

TypeScript SDK with full type definitions

Python package ready for PyPI

Interactive playground (React) for testing

Key Challenges & Solutions

Challenge 1: Latency vs. Reasoning Depth

Problem: Deep graph traversal (5+ hops) provides better answers but exceeds latency budget.

Solution: Beam search with early termination. Keep top-5 candidates at each hop, max 3 hops. If confidence threshold (0.85) met early, kill remaining paths immediately.

Challenge 2: Cache Invalidation

Problem: Cached reasoning chains become stale when underlying entities change.

Solution: Lazy verification on access. When a cached result is retrieved, check if source entity versions match. If stale, re-compute in background and serve slightly older result.

Challenge 3: Entity Deduplication

Problem: Same concepts ingested with slight variations ("2008 financial crisis" vs "2008 crisis" vs "financial crisis of 2008").

Solution: Embedding-based duplicate detection with 0.90 similarity threshold, followed by entity merging that preserves all relationships.

What I Learned

Parallel retrieval beats sequential optimization. Launching 3 paths and killing slow ones is faster than optimizing a single path.

Caching reasoning, not just results. Storing the thought chain (not just the answer) enables explainability and debugging.

Heuristics are technical debt. Pattern-matching shortcuts seem fast but break on edge cases and don't generalize.

Observability enables confidence. Can't optimize what you can't measure. Sentry + PostHog from day one.

Built with Python, FastAPI, PostgreSQL, Redis, and a lot of graph theory papers.

Like this project

Posted Dec 15, 2025

Developed Memoirer, an intelligent memory solution for AI with sub-100ms response.