Explainable-AI-XAI-for-pothole-detection

Like this project

Posted Jul 25, 2024

This repository houses a robust XAI (Explainable Artificial Intelligence) model for pothole detection, leveraging the power of LIME (Local Interpretable Model-…

Likes

0

Views

1

Tags

Explainable-AI-XAI-for-pothole-detection

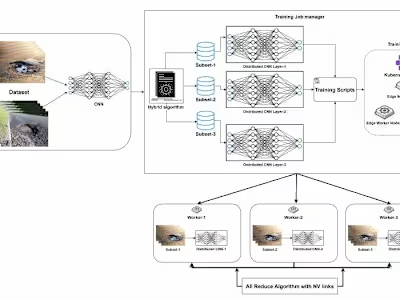

This repository is dedicated to an advanced XAI (Explainable Artificial Intelligence) project focused on binary classification of road surfaces into 'Pothole' and 'No Pothole' categories. Leveraging the capabilities of LIME (Local Interpretable Model-agnostic Explanations), we delve deep into model interpretability, ensuring clarity and transparency in our predictions.

Key Features:

Advanced deep learning techniques tailored for binary classification of potholes. Integration of LIME for insightful, interpretable explanations of model predictions. Comprehensive datasets capturing diverse road scenarios to fine-tune classification accuracy. Interactive visualizations indicating feature importance, aiding in understanding the decision boundaries.

Fairness in AI:

Maintaining fairness in AI model predictions is of utmost importance to us. By utilizing LIME, we strive to unearth any latent biases in the model and dataset, ensuring that our pothole detection mechanism is equitable and doesn't inherently favor any particular segment of data. This project aligns with the principles of responsible AI, where fairness is paramount and each prediction is both justifiable and understandable.

Objective:

Our aim is to create a solution that doesn't just classify potholes, but also offers stakeholders a clear understanding of the reasons behind each classification, bridging the gap between AI predictions and actionable insights.

How to Use:

Clone the repository. Install required dependencies (included in code). Feed in your road surface data or utilize our provided sample dataset. Run the model, and gain insights into its decision-making with LIME visualizations.

Contributions:

We welcome contributions to enhance classification precision, extend the scope of XAI applications, or optimize the underlying algorithms. Initiatives to further enhance fairness are particularly appreciated.