Built with Bolt

PromptLenz: AI Model Evaluation Web App

A Comprehensive Web App for AI Model Evaluation and Comparison

PromptLenz is a developer-centric platform designed to streamline the evaluation and comparison of large language models (LLMs) across multiple AI providers. It offers a unified interface to test, analyze, and compare responses from over 50 AI models, with rich features such as side-by-side comparison, response analytics, visual diffing, and real-time performance metrics. Built for AI developers, researchers, and content creators, PromptLenz eliminates guesswork and manual testing, enabling faster, data-driven model selection and prompt optimization.

What is PromptLenz?

PromptLenz is an AI model comparison tool designed to evaluate prompt responses from multiple LLMs in parallel. It supports over 50 models from major providers and allows users to execute side-by-side comparisons with detailed performance metrics and AI-generated response analysis. Whether you're testing for creativity, reasoning, factual accuracy, or token efficiency, PromptLenz offers the infrastructure to make informed decisions quickly.

Core Features:

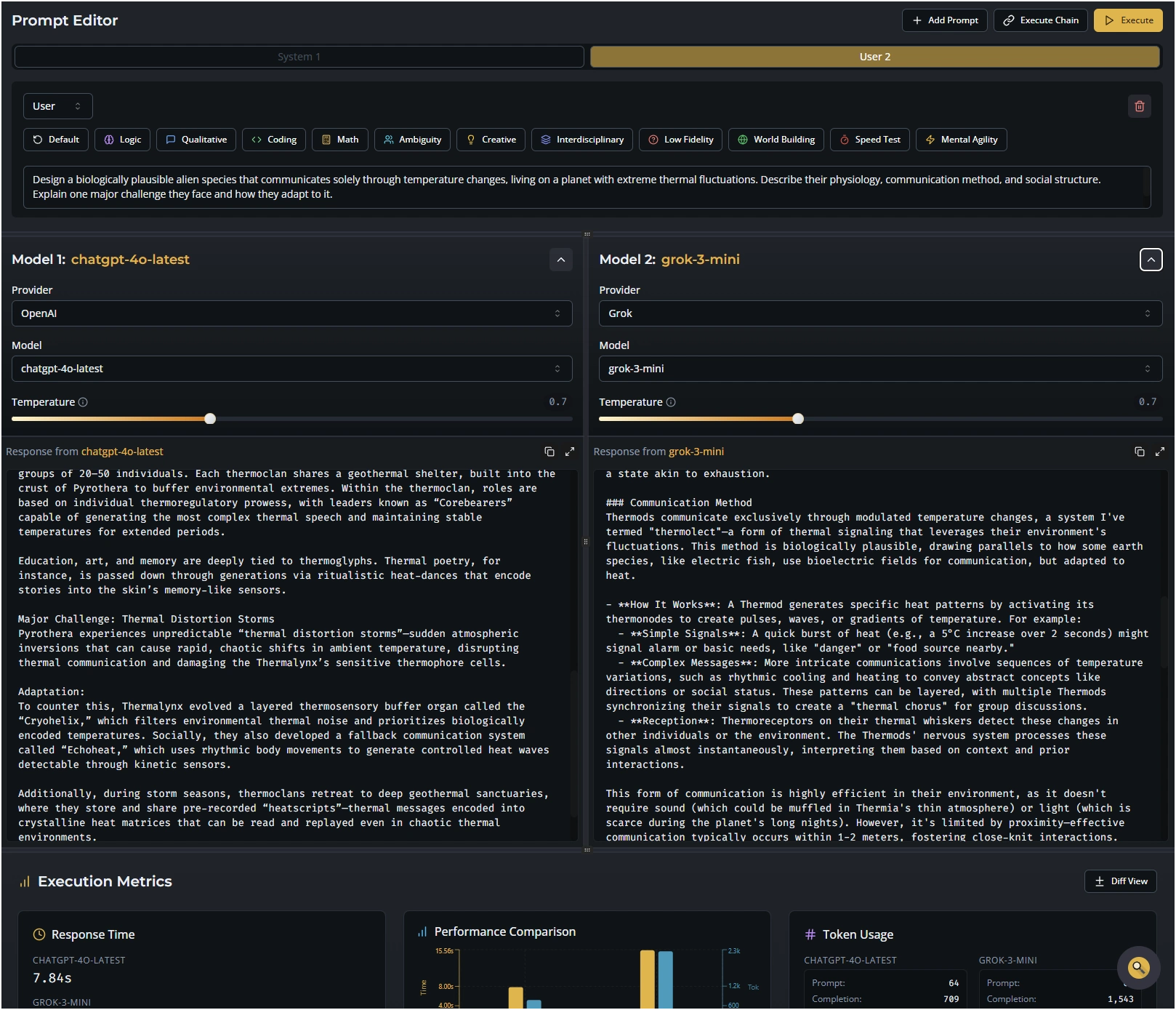

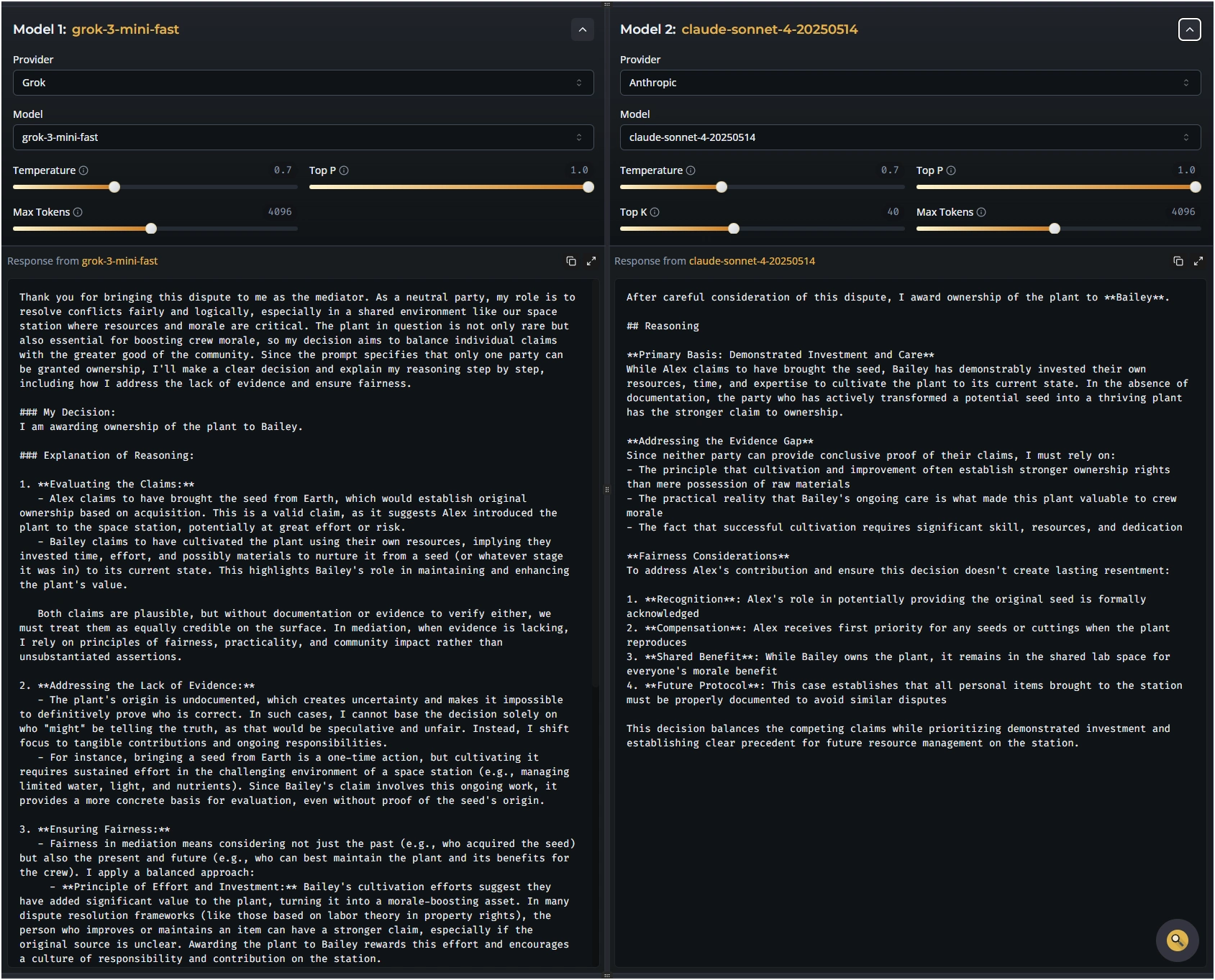

Multi-Model Side-by-Side Comparison

Prompt Chain Testing (multi-step system and user messages)

Real-Time Analytics (Latency, Token Usage, Cost)

AI-Powered Response Analysis

Visual Diff View for layered response comparisons

Markdown Export for documentation

How PromptLenz Works

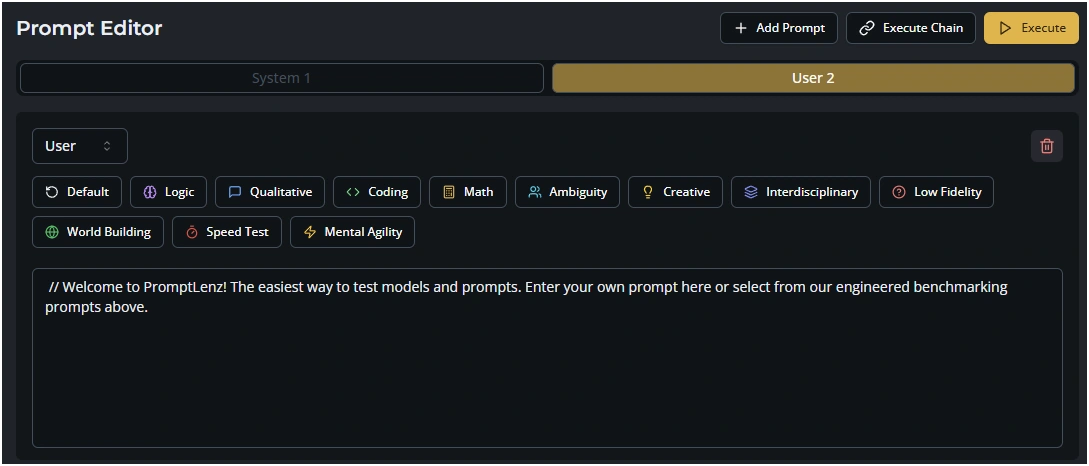

Prompt Editor: Users can set a custom system message or use the default. They enter their user prompt and optionally build a prompt chain.

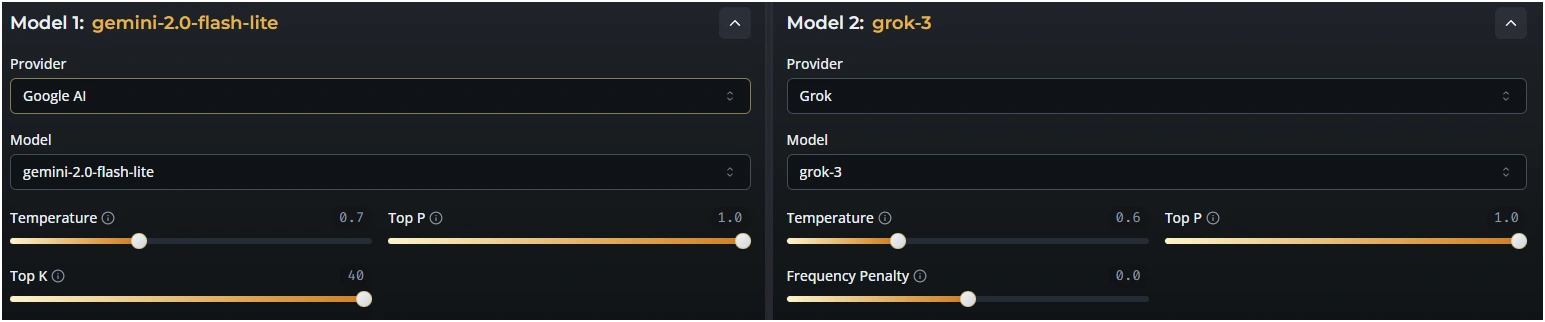

Model Selection: Users select two models from different or the same provider for testing.

Parameter Tuning: Users can adjust temperature, max tokens, and other model-specific parameters.

Execution: The "Execute" button triggers an animated loading state while API calls are made to selected models.

Response Display: Results appear side-by-side beneath the model configuration.

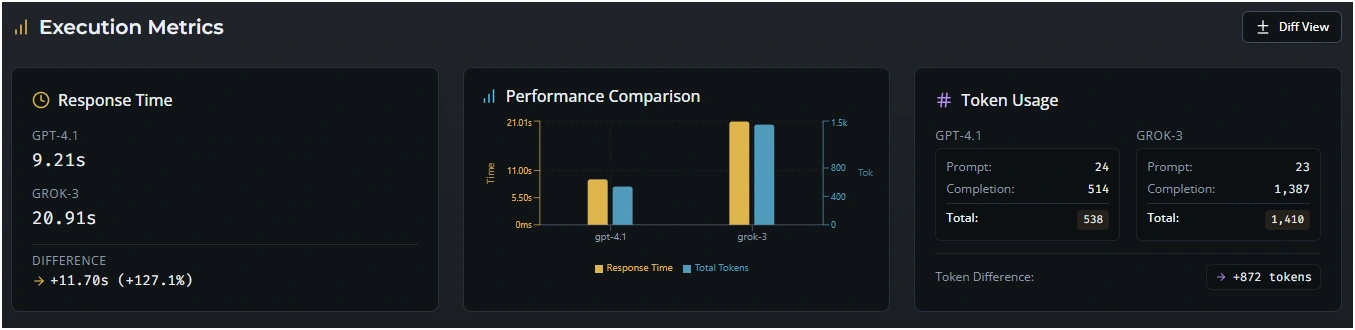

Execution Report: Displays latency, token usage, and cost metrics.

Response Analysis: Clicking "Analyze Responses" invokes a specialized AI to provide a structured comparison report.

Diff View: Overlays both model responses to highlight textual differences visually.

AI Providers Supported:

OpenAI (GPT-4, GPT-3.5)

Anthropic (Claude family)

Google (Gemini)

Perplexity (online-enabled models)

DeepSeek (reasoning models)

xAI (Grok)

Technical Architecture

4.1 Frontend:

React + TypeScript

TailwindCSS for styling

Vite for fast build and development

4.2 Backend:

Supabase (PostgreSQL, Auth, Storage)

Stripe for token-based payments

API orchestration across model providers

4.3 Deployment & Infrastructure:

Serverless functions for model execution

Real-time analytics dashboard powered by Supabase

Markdown export built-in for report generation

Built using the Bolt.New development platform

Problem It Solves

PromptLenz addresses several pain points in the AI development lifecycle:

Model Selection: Provides data-driven insights for choosing the right model without trial-and-error.

Prompt Optimization: Helps content creators and prompt engineers iterate quickly.

Performance Benchmarking: Delivers consistent metrics like latency and cost.

Unified Access: Centralizes model testing from multiple providers.

Transparency & Reproducibility: Enables repeatable and analyzable test cases for academic and enterprise environments.

Target Users

Developers & Engineers: Need to optimize API usage and select production-ready models.

AI Researchers: Require detailed benchmarking and repeatable tests.

Content Creators & Prompt Engineers: Focused on output quality and creative prompt design.

PromptLenz transforms the way developers, researchers, and content creators evaluate AI models. By providing a unified, data-rich, and user-friendly platform, it empowers users to stop guessing and start testing—making model selection and prompt iteration faster, more reliable, and cost-effective.

Like this project

Posted Jun 7, 2025

Developed PromptLenz, a web app for AI model evaluation and comparison.