I built a production-ready serverless

Like this project

Posted Feb 2, 2026

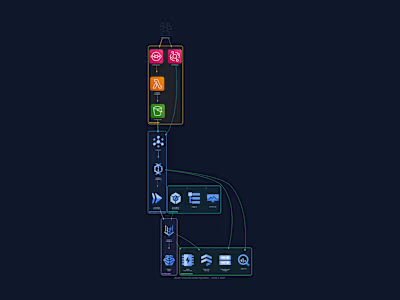

I built a production-ready serverless LLM API on GCP designed for low cost, strong security, and fast inference. Requests flow through CDN, load balancing, W...

I built a production-ready serverless LLM API on GCP designed for low cost, strong security, and fast inference. Requests flow through CDN, load balancing, WAF, and API management before hitting a Cloud Run FastAPI service that handles prompts, session history, caching, and model routing. The system switches between Gemini 2.5 Pro for deep reasoning and Gemini Flash for fast responses, with RAG support using Vector Search over 768-dim embeddings. Data is stored in Firestore, cached in Redis, and logged to BigQuery. Everything is secured with VPC Service Controls, Workload Identity, KMS, Secret Manager, and DLP. CI/CD is fully automated with Terraform and Cloud Build using canary rollouts and auto-rollback on SLO violations. At around 50K requests per day, the platform runs at about $1K/month and scales to zero when idle.