Research: An AI-Enabled Talking Robot 🤖

Overview 🔎

This is a use case I did with a team to implement end-to-end vision-to-grasp functionalities on a conversational humanoid robot.

Check out the paper here🕵️♀️.

The paper describes the techniques used in the following video presenting an interaction scenario, realised using the Neuro-Inspired Companion (NICO) robot.

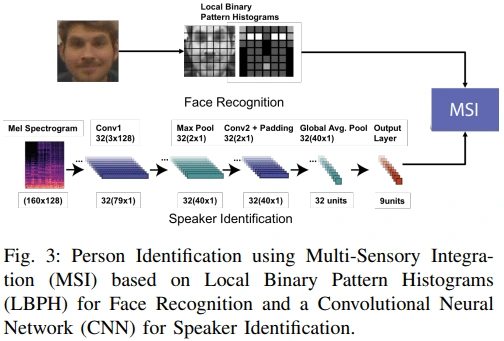

NICO engages the users in a personalised conversation where the robot always tracks the users' faces, remembers them and interacts with them using natural language.

NICO can also learn to perform tasks such as remembering and recalling objects and

thus can assist users in their daily chores. The interaction system helps the users to interact as naturally as possible with the robot, enriching their experience with the robot, and thus making the interaction more interesting and engaging.

What I Did with the Robot 🤖

I contributed to the humanoid project in three different phases:

First, I built an interface to control an IoT haptic sensor (start, stop, record). We wanted to install a haptic sensor on the robot hand and did an experiment to caress the sensor over surfaces of multiple materials. I applied a SOM (Self-organizing map) to cluster different material properties from the sensor data and the model successfully grouped sensor data from the same material within the same cluster and similar materials close to each other.

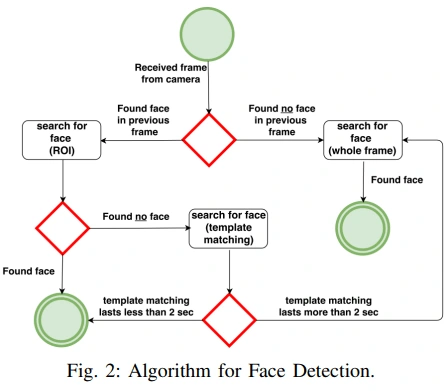

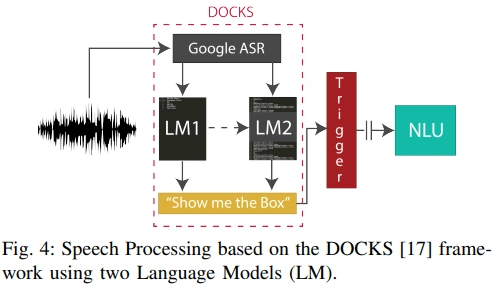

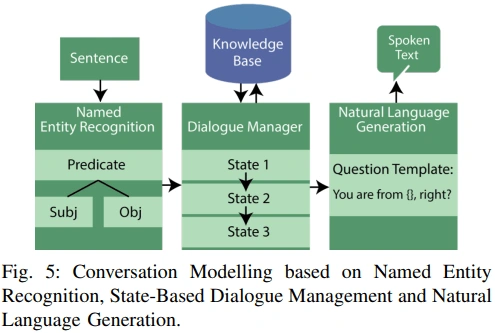

Second, in a group project, I help build a computer vision module that enables the camera to detect faces and make the robotic head follow where the faces go. More work went into designing a dialogue system of a conversation between the humanoid robot with a human assistant using ROS-SMACH. The dialogue system enabled the robot to respond to questions, derive capital cities from country names, and learn object names and respective positions taught by the human subject.

Third, I enhanced the vision-to-grasp functionality of the humanoid robot as the work for my Master's thesis. I enabled the humanoid robot from single object detection to recognizing multiple objects in the scene by applying Grad-CAM and CNN methods. The implementation allows an end-to-end process of a robot recognizing an object, asking the user to choose an object to pick up, identifying the object of choice, and proceeding to grasp the object with its predicted arm and hand joint values.

Implemented Functionalities 🦾

Below are some figures taken from the work to showcase the main functionalities the team and I implemented on the NICO robot:

👋Thanks for checking out my work. If this is similar or related to something you need to get done, I'd love to help you reach your goals. Feel free to reach out, I'm curious to know more about your use case! 🤩

Like this project

Posted Oct 15, 2022

A project I did with a team to implement end-to-end vision-to-grasp functionalities on a conversational humanoid robot 🦾.

Likes

0

Views

38