Scalable Diffusion Models with Transformers (DiT)

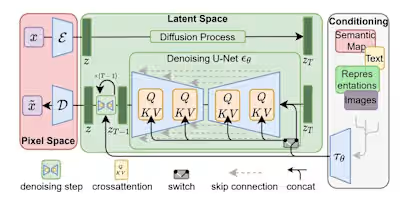

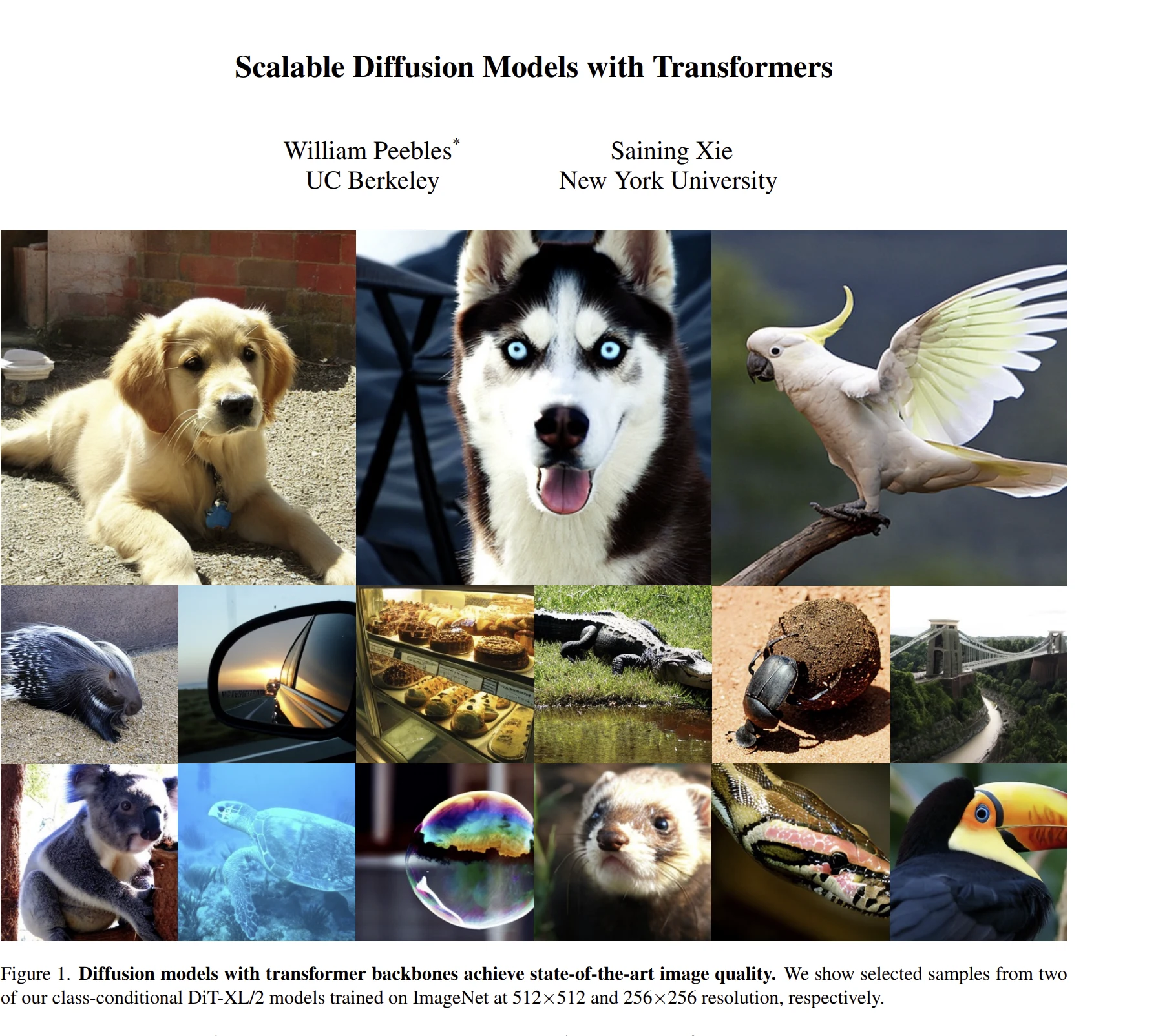

This project investigated the potential of the recently introduced DiT (Diffusion models with Transformers) architecture for text-to-image generation. DiT's inherent scalability made it an attractive choice, particularly considering the subsequent success of Stable Diffusion 3, which adopted a similar approach.

The core architecture leveraged a series of DiT blocks, meticulously constructed from individual text encoder and decoder components. This custom architecture aimed to bridge the semantic gap between textual descriptions and corresponding visual outputs.

Training was conducted on a dedicated cluster environment, utilizing a meticulously curated text-to-image dataset. While the resulting model demonstrated promising capabilities in generating coherent images, budgetary constraints limited the size of the training cluster. This, in turn, restricted the model's ability to fully exploit the potential of the DiT architecture.

Like this project

Posted Aug 3, 2024

This project investigated the potential of the recently introduced DiT architecture for text-to-image generation.

Likes

0

Views

20