Data Analytics Platform

Like this project

Posted Feb 29, 2024

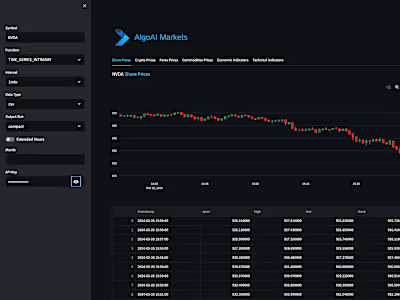

Built and implemented a NLP capability to make business decisions using AI

Likes

0

Views

33

Clients

TAL

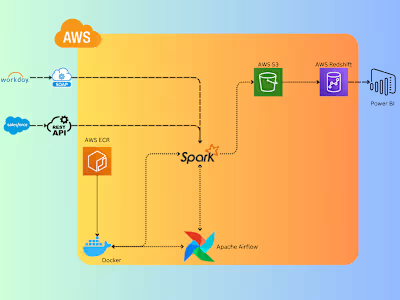

Designed On-prem microservice driven architecture using Docker as a POC.

Implemented and migrated successfully POC in Azure Cloud.

Contributed to the implementation of an NLP algorithm, developing a dedicated API to serve and feed data to the NLP model.

Assisted in constructing ETL pipelines to support the API, ensuring efficient data integration and management. Successfully deployed these solutions to the corporate production system, optimising underwriting decisions for life insurance policies.

For the tasks described in your portfolio, which involve natural language processing (NLP), feeding data to models, and making decisions (like underwriting for life insurance), different types of neural networks could be employed depending on the specific requirements and characteristics of each task. Here are some approaches on howI used NNs:

Recurrent Neural Networks (RNNs): These are particularly suited for sequential data like text. RNNs can process inputs of varying lengths and are commonly used in NLP tasks. However, they might struggle with long-term dependencies.

Long Short-Term Memory (LSTM) networks: A special kind of RNN, LSTMs are designed to avoid the long-term dependency problem. They work well for tasks that require learning from important experiences that happened many steps back in the sequence, which could be beneficial for understanding the context in underwriting decisions.

Gated Recurrent Units (GRUs): GRUs are a variation of LSTMs that are simpler and can be more efficient computationally. They are also effective for sequential data and can be used for similar NLP tasks.

Convolutional Neural Networks (CNNs): While primarily used for image processing, CNNs have also been successfully applied to NLP tasks. They can be used to extract features from text data, although they are less common for purely text-based applications compared to RNNs and LSTMs.

Transformer models: This is a newer architecture that has become very popular for NLP tasks. Transformers avoid recurrence and instead use attention mechanisms to process data in parallel. This makes them faster and more scalable than RNNs and LSTMs. Models like BERT, Tensorflow, and others based on the Transformer architecture have set new standards in NLP for a variety of tasks, including text classification, translation, and question-answering.