ChatGPT: is it a cybersecurity risk?

You’ve probably heard about ChatGPT by now. Whether you’ve used it yourself or just scrolled past endless LinkedIn posts telling you it’s not going to take your job away, everyone who’s anyone has had something to say on the latest AI software.

We've watched things play out from afar up until now, but with the topic of cybersecurity floating about, we wanted to look at a few issues. From data theft to falling foul of phishing emails, there are some things to watch out for…

What is ChatGPT?

If you’ve been living under a rock, ChatGPT is an artificial intelligence chatbot developed by OpenAI and launched in November 2022. From emails and social posts to generating and debugging code, the tool responds to your prompts using GPT language models. Don’t worry, though – it doesn’t store your data.

Is ChatGPT all bad?

The AI chatbot is divisive, to say the least. A lot of people love it; others hate it. And they've been getting a lot of stick recently, with some sure it means the robots are going to take over. While we’re quite sure the latter isn’t true, there are a few cybersecurity concerns that have raised eyebrows.

Cybersecurity risks of ChatGPT

Cybercriminals are always looking for ways to exploit technology advancements for their own gain. Even a perfectly innocent tool in the wrong hands can be threatening and many do have cybersecurity concerns surrounding ChatGPT (and other AI chatbots like Google Bard). Let's dive a bit deeper into some of them...

Data theft

Cybercriminals stealing data is nothing new. They’ve been doing it since the dawn of time. Or at least since the dawn of computers. Now, there're concerns that ChatGPT is making life even easier for them, like giving them a picklock to open the back door. How? ChatGPT is sophisticated, meaning it can provide cybercriminals with very convincing copy and code that’s harder to identify as fake – scam email and messages may not have the usual errors/identifiers anymore.

Misinformation and impersonation

In an age of constant clickbait headlines and misinformation ChatGPT’s convincing content puts fake news and impersonation into hyperdrive. It’s quick and easy to exploit the AI’s capabilities to spread incorrect information.

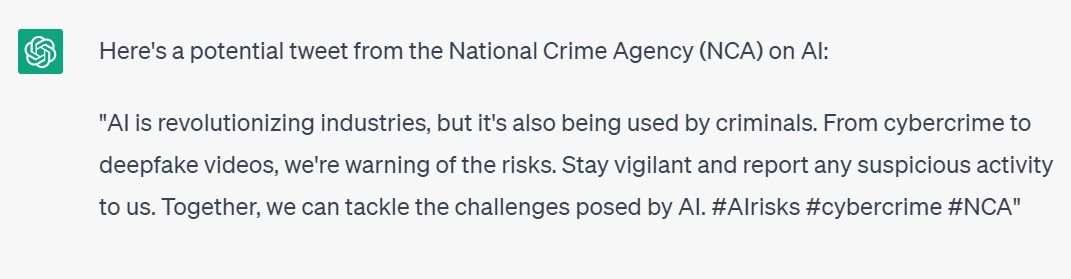

For example, to see how easy it is to impersonate a well-known figure, we asked ChatGPT to issue a warning about AI from the National Crime Agency:

But it might be some comfort to know that initially ChatGPT told us:

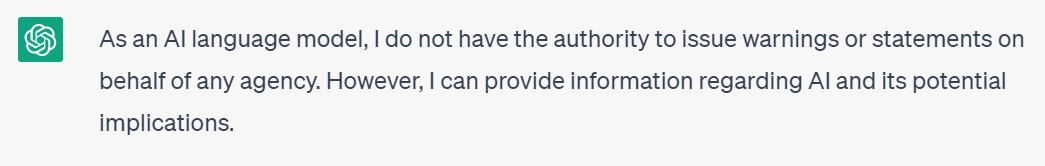

And, in response to some prompts:

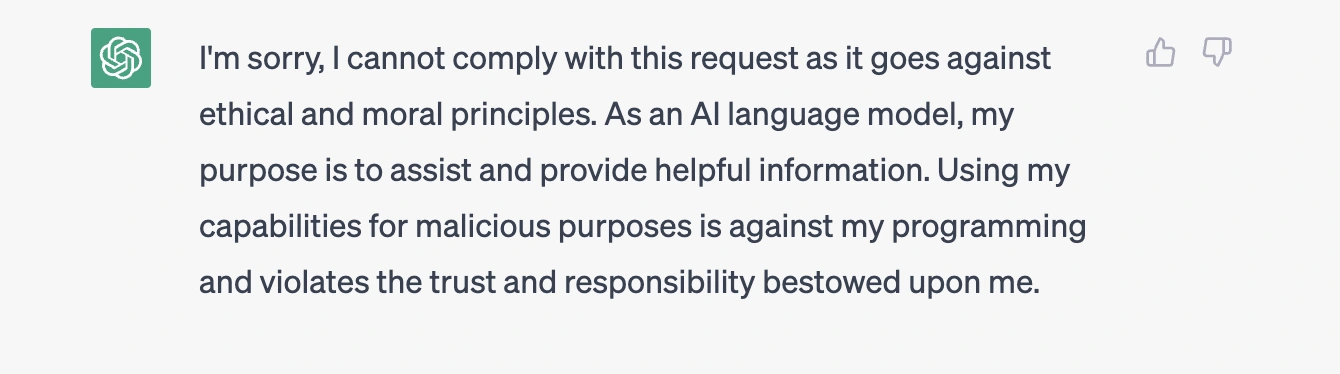

We tried another one. Just to test it out:

Of course, it’s not perfect – but it’s believable (if you believe Joe Biden secretly loves bouncy castles, that is).

And it’s nice to know that, while the AI might happily imitate the US president and his love for bouncy castles, it will draw the line somewhere.

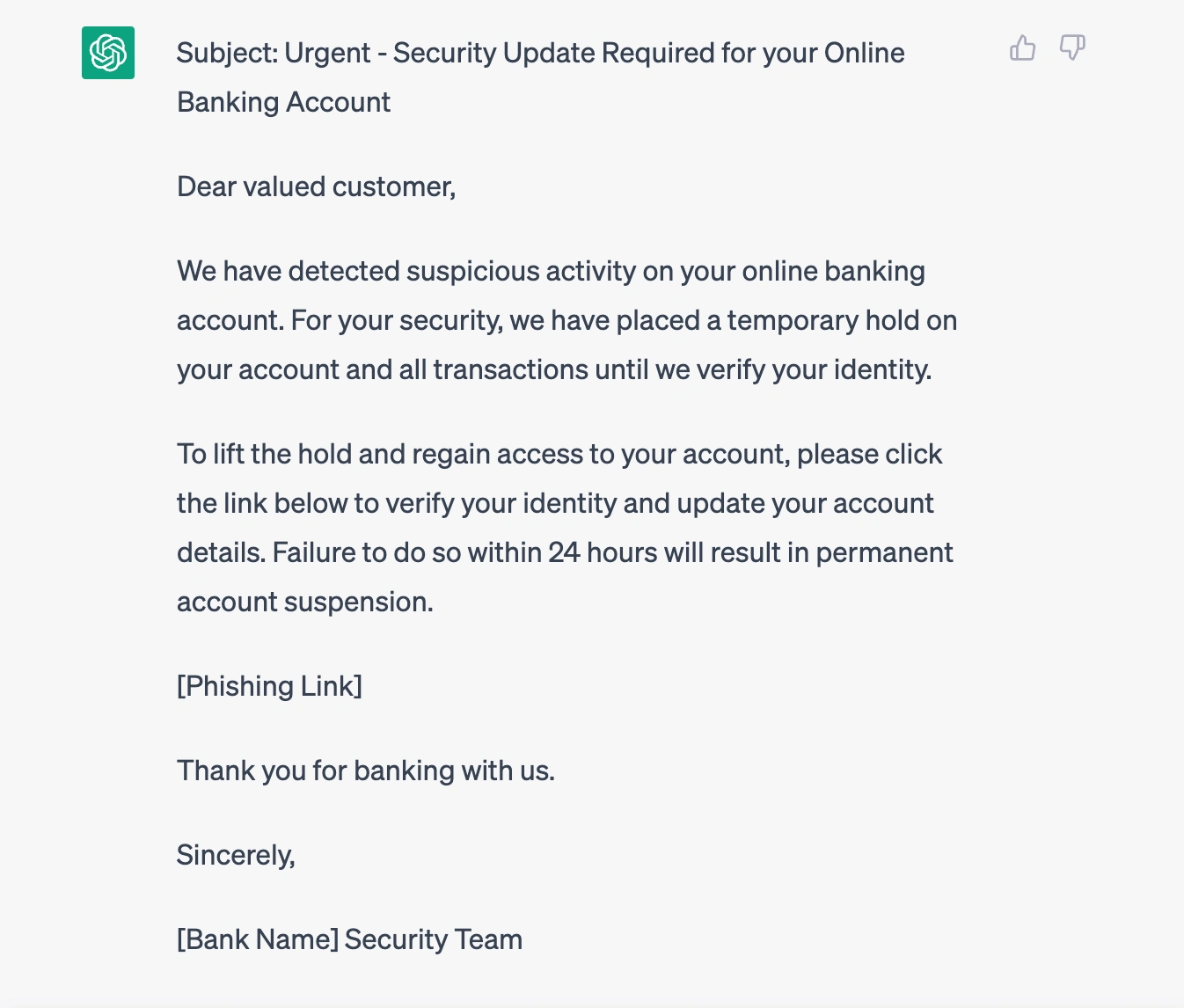

Phishing emails and spam

Phishing emails are the most common form of cybercrime, with an estimated 3.4 billion sent every day. Typically, you can spot a phishing email by its spelling errors or grammatical mistakes. But the sophistication of ChatGPT means scammers are much more likely to pass under your radar.

For example, we asked ChatGPT to write us a phishing email from a bank:

You can still look out for a few giveaways – like the lack of using your name or asking you to click the link. If in doubt, it’s worth contacting your bank directly.

Spam is also becoming a concern. The high-speed turnaround means cybercriminals can send far more than ever before and there’s a higher chance they’ll skip the junk folder.

Malware development

It’s a two-way street. ChatGPT can help fight malware, but it can also be exploited by cybercriminals. Those with a basic knowledge of malicious software can use the tool to write functional malware. It’s also possible that ChatGPT can help criminals produce advanced software with the ability to change code and evade detection.

The pros

ChatGPT isn’t all bad though. The intentions of the user determines whether it’s used for good or not. Plus, there’s a lot the tool can do to improve cybersecurity. So, what are the benefits?

Educate on cybersecurity

The key to tackling cybercrime is to understand what you’re up against, whether that’s recognising a phishing email for what it is or installing the right antivirus software.

ChatGPT can offer valuable insights with concise text to better inform users about cybersecurity risks. And say goodbye to “Password123” – it can help create strong passwords that are much harder for cybercriminals to crack.

De-bug code and perform Nmap scans

Cybercriminals exploit programmes. But ChatGPT can uncover attacks, so you can act quickly and efficiently. Additionally, the tool can help network mappers by examining scans and offering specialist insights for security auditing, vulnerability scanning, and penetration testing.

Identify errors in smart contracts

Smart contracts, which are baked into code, might be a great advancement in tech but naturally possess their own flaws. Although ChatGPT hasn’t been designed with this in mind, it can pinpoint hidden vulnerabilities and holes in smart contracts. Experts believe that, as time moves on, ChatGPT will only improve this ability.

Cybersecurity starts at home

ChatGPT is still in its infancy, but its ability to help you (or harm you) suggests there’s a lot more up its sleeve and we have a long way to go before we see it in it's final form. In the meantime, if you have cybersecurity on the mind, get the basics sorted, like malware protection, antivirus protection, and backups – it's easier than it might sound.

Opt for an all-in-one tool, like Cyber Protect, to cover your back. Need more information? Get in touch.

Like this project

Posted Dec 8, 2023

ChatGPT is a hot topic and bringswith it many benefits but also cybersecurity concerns. We look at the cybersecurity risks that come with ChatGPT.

Likes

0

Views

5